Looking at OpenAI's Go-to-Market and Product Positioning

Okay, it’s not a surprise that ML is huge huge huge, and companies that offer ML services are bringing in similarly huge attention and valuations. There are a few that seem to overlap a bit, at least in the eyes of investors and customers. Those that are worth examining are OpenAI, Hugging Face, Cohere, and AI21 Studios. Through Algolia, I’ve worked on products that have used OpenAI, and evaluated Hugging Face (many times), Cohere, and AI21. It’s been a good opportunity to see the different approaches each is taking with its go-to-market.

In this post, we’re going to look at OpenAI’s product offerings and positioning. I’ve been lucky enough to work with OpenAI, through Algolia, since February of 2020. And, while we’re winding down what we’ve built with OpenAI’s GPT-3, the past couple of years have me long on OpenAI as a whole.

(If you’d like to see more in this series, you can see my previous post on Hugging Face and their go-to-market.)

OpenAI

OpenAI is the first company building large-scale language models that has really attracted attention outside of a circle of ML practitioners. Since the announcement of the GPT-3 API, you’ve seen even non-developers building on top of it like never before. Some of this interest may have come from the sheer size of the model, but most of the interest probably came from the interface. Instead of seemingly opaque code, you could get inferences much easier than what most people had ever seen before.

Q: What is human life expectancy in the United States?

A: Human life expectancy in the United States is 78 years.

Q: What is the meaning of life?This snippet would generally come back with an answer in the expected format.

This way of working uses zero-shot learning and isn’t the first of its kind, but is certainly the first that has made an impact beyond ML practitioners.

In reality, this interface is probably more complex than a traditional programming interface. Because GPT-3 is general purpose (it can be used for classification, general text generation, sentiment analysis, and more), and the interface is via natural language, a new skill of “prompt engineering” is necessary. Prompt engineering means figuring out the right natural language prompt to use in order to get the API to respond the way you want. This is not easy, and is much more difficult than using a client library to classify text into one of three classes. Of course, the inverse of the difficulty that comes with prompt engineering is the lack of model training that is necessary.

It’s also worth noting that, especially for text generation, GPT-3 is magical… 90% of the time. The other 10% of the time, the generated text is either nonsensical, or there’s something subtly off.

But still, that doesn’t matter too much, as OpenAI has captured people’s imaginations, and there are people building products and features on top of the OpenAI API.

So we’ve seen that OpenAI has attracted the interest of people who aren’t ML engineers in a way that no other language model or ML company has before—is this OpenAI’s ICP? How are they handling their go-to-market? Let’s take a look.

OpenAI’s Products

Unlike what we saw with Hugging Face, OpenAI isn’t going broad. They only have three products: GPT-3, Codex, and DALL·E 2. “Three” products, but they are all conceptually very similar. You could summarize each broadly with “ML-driven {blank} generation through language prompts,” where blank is “natural language” for GPT-3, “code” for Codex, and “images” for DALL·E.

This conceptual link is nice for multiple reasons. Generally when a company offers products that share certain core similarities but serve different use cases, there is an opportunity for increasing ARR from existing customers. This expansion is so valuable, because the customer’s LTV is going up, but the CAC isn’t. (In reality, except for a 100% self-serve business, which OpenAI is not, there’s still an expansion CAC, but it’s much cheaper than acquiring new revenue.)

Except I don’t believe that these horizontal offerings will drive much expansion revenue for OpenAI, for the simple reason that both Codex and DALL·E are niche products. How many companies will need to integrate text generation, code generation, and image generation into their products? Not so many. This means that each of these products will target different customers, although we’ll see that they have similar positioning.

Let’s first examine who might be the ICP for each product.

Codex

For Codex, you could say the answer is “every company that hires developers” is the target customer, but I don’t think that’s true. These companies won’t use Codex directly. That’s too inefficient. Instead, they will use a service that uses Codex, whether that’s Github, IntelliJ, Microsoft, or what-have-you. These companies can integrate Codex into their IDEs or text editors, and pass the cost on to the end users. The number of companies who monetize these tools either directly or indirectly is really small.

Remember, though, that a total addressable market (TAM) consists not just of how many companies would use a service, but how much they will, collectively, pay. If TAM looked only at the number of companies, there would be no airplane manufacturers (or, for that matter, airplane part manufacturers). If these big companies have enough traffic through their developer tools who use code generation, that can lead to a good amount of revenue for OpenAI, because OpenAI isn’t charging individual licenses to each of these companies, but is charging based on usage.

So we see that the ideal customer for Codex is at a company that builds developer tools with a large end-user population. More specifically, the customer will almost certainly be on the product team, and so can be expected to be heavily technical, as well.

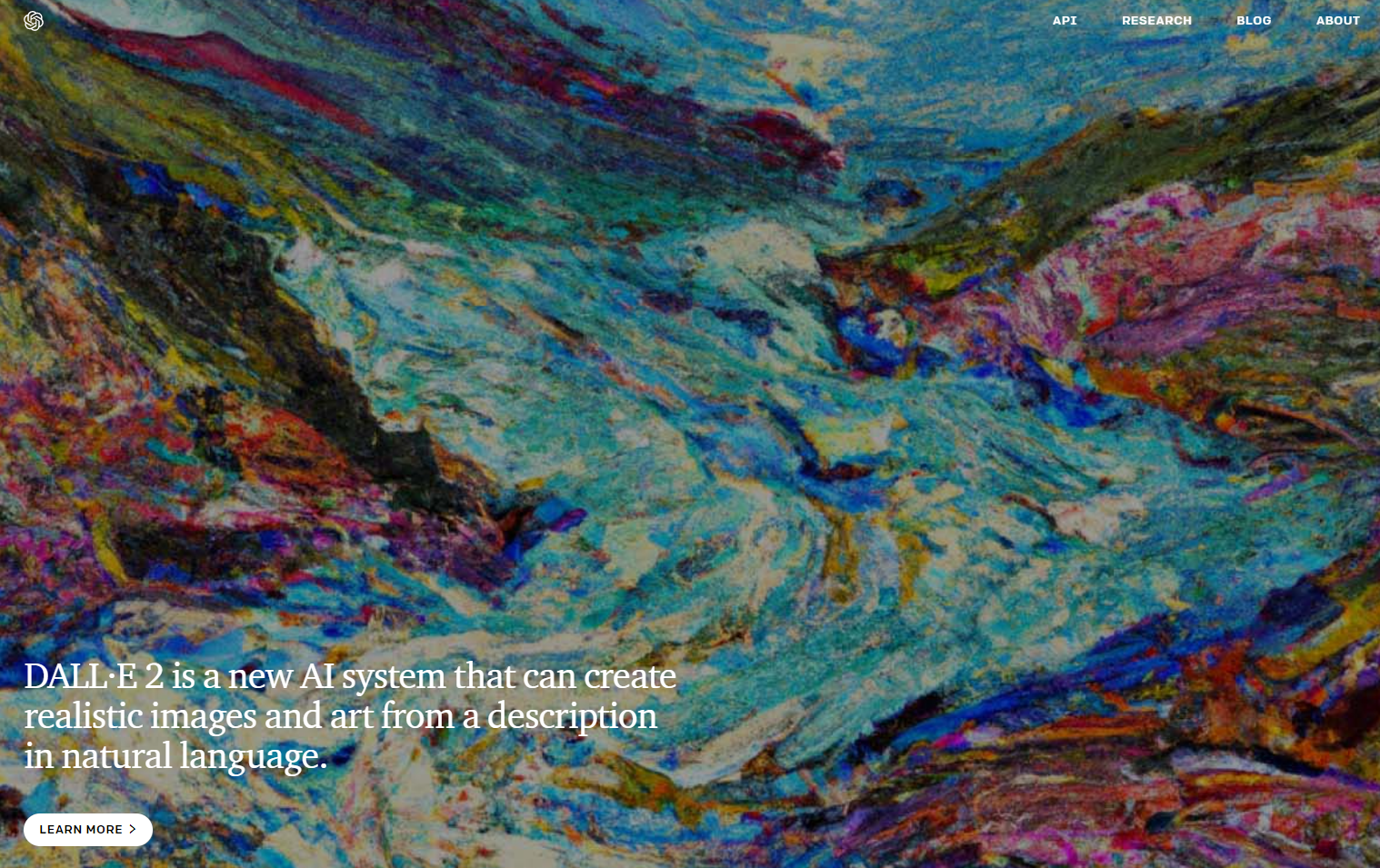

DALL·E 2

DALL·E 2, meanwhile, is more an impressive display of technology than it is a product. (And what an impressive display it is.) This may be my B2B SaaS background bias showing, but it’s difficult for me to think of many use cases where image generation is necessary. Sure, there will be some, but again: it’s niche. Even more so when we recall what we discussed above: 90% magical, 10% unusable. So you won’t, for example, integrate DALL·E directly into a video game to “breathe” characters into existence.

If there is a market for DALL·E, it’s probably in more “creative” domains. For example, perhaps a children’s book author could use the technology to illustrate a story. Or it could be used to create header images for blog posts. I’ve seen, for example, outputs from the model of a person holding a sign saying a specified text.

All of this is to say that I’m not sure who the ideal customer is for DALL·E. Perhaps a designer short on time, or a writer without design skills? Unlike Codex, I don’t think the ideal customer is a technical profile. OpenAI hints at the same:

Users have told us that they are planning to use DALL·E images for commercial projects, like illustrations for children’s books, art for newsletters, concept art and characters for games, moodboards for design consulting, and storyboards for movies.

Another possible path for DALL·E is to take a similar approach as Codex, and bundle within creative tools. You know what would be perfect? Adding DALL·E into Powerpoint and Word as a 21st century replacement for clip art. OpenAI and Microsoft are already partners through Microsoft’s investment in the company, so I would be disappointed if this conversation hasn’t already happened.

And, so while Codex is a niche product around code, and DALL·E is a niche product around more visually “creative” endeavors, OpenAI has one more product that is much broader.

GPT-3

The third product we’ll discuss is the first product offered by OpenAI, which is GPT-3. It’s the product that’s worth the largest analysis, because it’s the one that I believe has the largest TAM.

The reason that I think GPT-3 has the widest reach is because it works on text, not on images or code. Few work on code day-to-day and few work with graphics, but everyone works with text.

That wouldn’t matter, though, if GPT-3 wasn’t flexible. We already saw above an example of how GPT-3 can be used for NLG, and indeed this is where the initial interest came about, as a sort of “simple” auto-completion of text. This is immediately accessible to people. They see the same with their mobile keyboards, and are increasingly seeing the same inside gmail or Google Docs.

The powerful thing about GPT-3, though, was just how powerful its NLG could be. While Google Docs autocompletion is a few words in prose, large language models like GPT-3 could do so much more.

I once needed fake data around audiobooks—titles, years of publication, author names, and synopses. I’m no good at prompt engineering, but I was able to get 100 books back in a few minutes: much less time than coming up with it on my own. And much less mind-numbing.

My toy example, though, downplays the possible applications.

Still not sick of code after our look at Codex? Well, GPT-3 can explain what code snippets do. Simon Willison got GPT-3 to explain a regular expression and other code for him in plain English.

There are business applications, too, of course. Viable uses GPT-3 to summarize text. There are other models out there that summarize text and summarize it well, but Viable’s experience is a hybrid q-and-a/summarization.

Of course, anything that can be done well will eventually be done by ad-tech, and that’s true for GPT-3, as well. Adflow uses the technology to write ad copy. I’ve written ad copy, and it’s dreadfully boring work. But it’s also high leverage: well performing ads directly bring in revenue and can be seen at a high volume, so even a small net increase in performance leads to a large net increase in revenue.

The really interesting thing is, surprisingly, not the NLG at all, but the ability for the model to generalize. Often when we talk about ML generalization, we talk about domains. This is true for GPT-3, which can handle sports as it can handle code. But here, instead, we’re talking about generalization of tasks.

Matt Payne at width.ai wrote about using GPT-3 for product categorization. By feeding the model details about a product, like the title, description, and details, GPT-3 would return matching categories. Again, there are models that do this, but for GPT-3 to do it without training is impressive.

There’s one more part of GPT-3 that is more recent and is worth calling out: embeddings. Embeddings are, essentially, a collection of numbers that programs can use to carry out tasks. Instead of the broad, open-ended capabilities of the core API, the embeddings endpoints only support three different families of models: text similarity, text search, and code search. The two search model families perform pretty similar, and embed either a short string (a query) or longer text (either a document or code). Each is for determining if the text is relevant to the query. The similarity embeddings, meanwhile, determine if two texts are similar.

The support for direct embeddings bifurcates the possible ICP for the GPT-3 API. The ICP for the embeddings endpoint will be machine learning engineers who want a powerful LLM and are okay with the cost, latency, and lack of fine-tuning available relative to other models.

The ICP for the more “traditional” GPT-3 API is much more difficult to identify. With a model that generalizes so well across tasks and domains, there is no clearly identifiable ICP beyond non-developers. Generally when a company refuses to take a clear stand on an ICP, it’s because they don’t feel confident in what they’re putting out there, and they’re afraid to take a stand. I don’t think that’s what OpenAI is doing, and for what we should take a look at their positioning.

GPT-3 Positioning

OpenAI co-founders Greg Brockman and Ilya Sutskever wrote a post in March of 2019 about The OpenAI Mission:

Our mission is to ensure that artificial general intelligence (AGI) — which we define as automated systems that outperform humans at most economically valuable work — benefits all of humanity. Today we announced a new legal structure for OpenAI, called OpenAI LP, to better pursue this mission — in particular to raise more capital as we attempt to build safe AGI and distribute its benefits.

Less than a year later, OpenAI reached out to us at Algolia about their upcoming GPT-3 API. Did their mission change in less than a year to one that is instead focused on product? I don’t think so. Or, at the very least, I think they benefit from the impression that it hasn’t.

OpenAI’s home page is sparse.

Below the fold is the footer. That’s all there is.

Just like we did for Hugging Face, what associations do we make with the home page? For me, I immediately thought of a fine arts museum.

And it’s not like there’s much else on the page to go off of. Immediately under the fold is the footer. Let’s come back to the implications of the home page vibe, but not without first looking at the navigation.

We saw with Hugging Face that the problem was a navigation that wanted to make sure you saw everything that they could do, at the cost of not being clear what they stood for.

OpenAI goes the entirely opposite direction. Four links in the nav: “API,” “Research,” “Blog,” and “About.” Click on the links for the blog or research and you’re deep in technical details. Click on “About” and you see this:

OpenAI is an AI research and deployment company. Our mission is to ensure that artificial general intelligence benefits all of humanity.

That sums it up, doesn’t it? OpenAI is leaning heavily into its ivory tower image. OpenAI’s not the person who “went to school outside of Boston,” OpenAI is unafraid to wear that Ivy sweater. Indeed, I somewhat suspect that the staff at OpenAI would disagree with me even critiquing their go-to-market, because they are so clearly beyond that. (I say this with some exaggeration, as all of the OpenAI employees on the commercial side with whom I’ve interacted have all been top-notch and business-focused.)

Perhaps, we should instead be looking at the API page to evaluate OpenAI’s GTM and positioning. I think this would be a fair critique, though I think we should not discount the halo effect that the API gets from being a part of this “institution.”

Let’s take that challenge, though, and focus more directly on the API homepage.

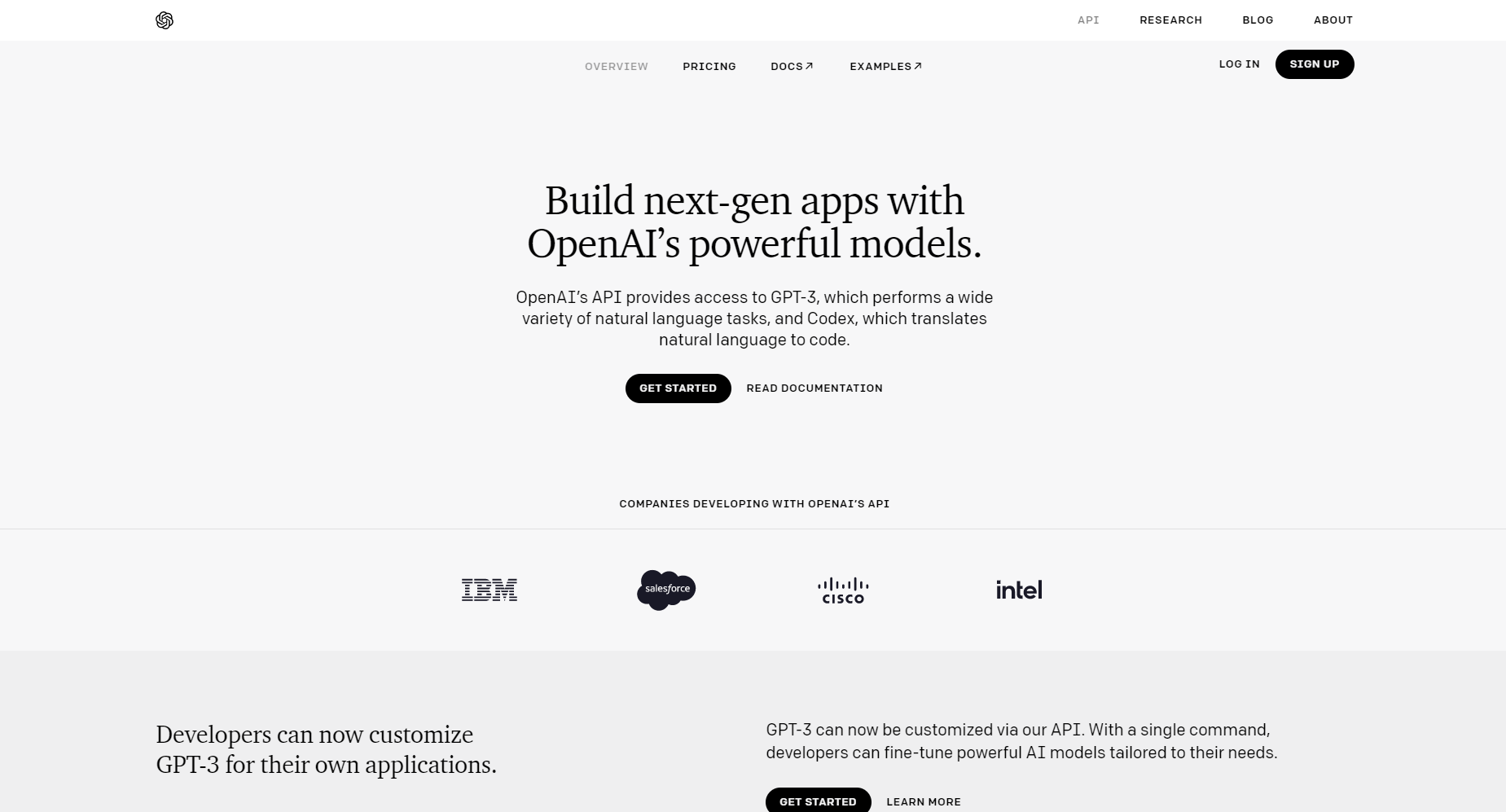

This page looks like a SaaS business page. Customer logos, strong header, call to action smack in the center…

The header gives a hint of the same problem that Hugging Face had: not taking a clear stand on what the product can do. We are told to “build next-gen apps” and that GPT-3 “performs a wide variety of natural language tasks.” This is all true, but doesn’t allow me to start picturing the API in my product. (It does clearly say what Codex does, but again, that’s a niche offering.)

Thankfully, the home page moves away from this shyness below the fold.

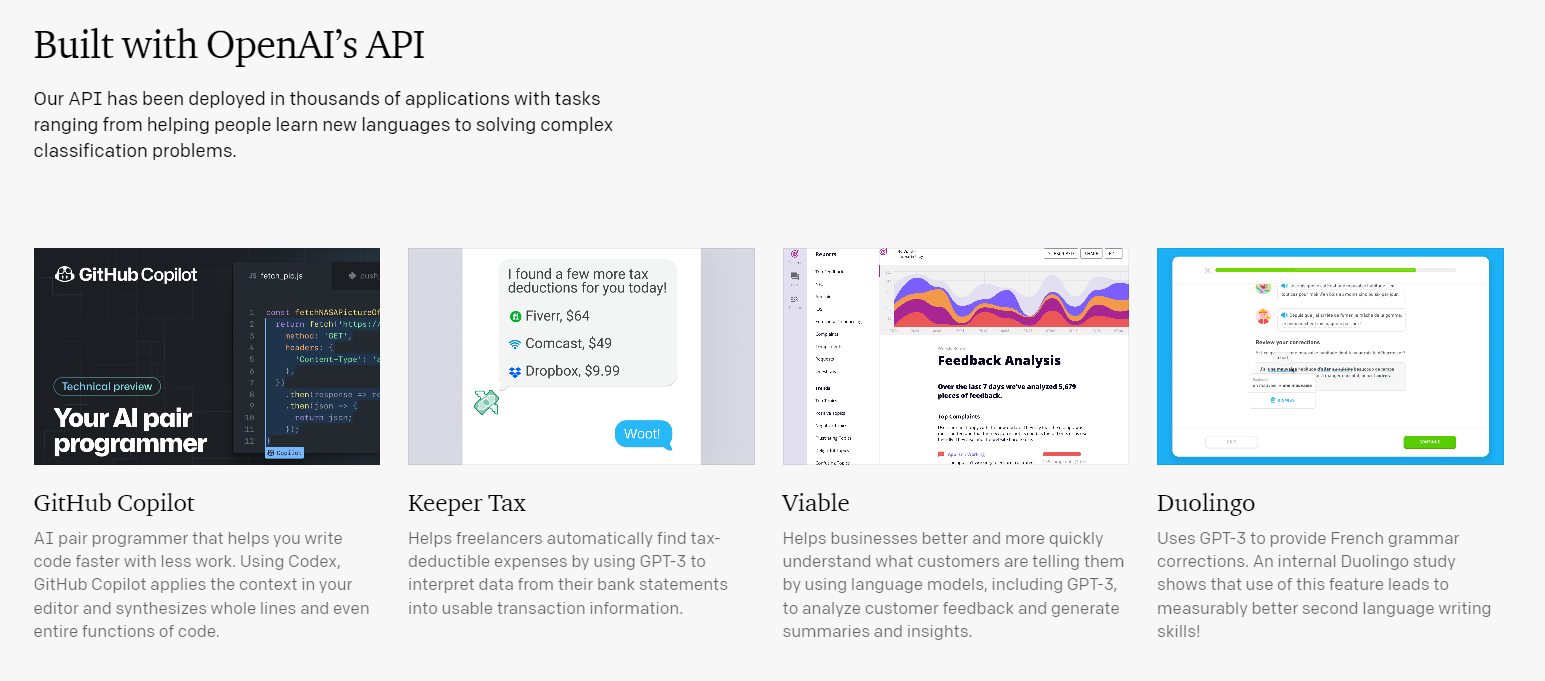

Here we can see examples of copywriting, summarization, classification, and others. There’s even a link that takes you to page showing 49 examples of what the API can do. And then below are four examples of what customers have built with the API.

Are 49 examples too much? Generally I’d say yes, but I think it works here. I think this is another example of OpenAI playing up its “path to AGI” and saying, “GPT-3 is so powerful that it can do all of these things” and not “GPT-3 does all of these things because we didn’t focus and do any of them well.”

The rest of the page is pretty standard. A code snippet, and a callout to being fast, scalable, and flexible. This area is the weakest of the page, by far, as it doesn’t really say anything about the OpenAI API that any other company wouldn’t also say about its offering.

There is also a section about OpenAI’s ethical stand. I doubt there’s anyone who would have not paid for the API if this wasn’t present, but I get why OpenAI is taking space here, and not leaving it in the about page or the docs.

The API homepage doesn’t link off to much, but one page that it does link to is for pricing, which is a good next place to look.

OpenAI API Pricing

OpenAI’s pricing is known for one thing above all: it’s expensive. It’s first tiered per model. (GPT-3 comes in four different flavors, with Ada being the least intelligent but fastest and cheapest, and Davinci the slowest, most expensive, and most intelligent.) Then there’s pricing for the base models, fine-tuned models, and for embeddings.

The pricing is based on the number of tokens used. A token is more-or-less a word, although there’s not a perfect 1-to-1 alignment. The price on the base models are $0.0008 per 1,000 tokens on Ada to $0.06 per 1,000 tokens on Davinci.

To give an idea what this all comes out to as a cost, there’s an example on the API homepage on parsing unstructured text. That example would cost about a cent to run once.

The price goes up if you’re using fine-tuned models, or embeddings. I think the idea with either of them is that you’ll use fewer tokens overall, especially with the fine-tuned models, but the overall cost is certainly to be higher.

This was for us at Algolia one of the most stressful things about using the OpenAI API. We started using the API well before pricing was announced, and when I finally saw what OpenAI put forward, I don’t think I slept well for a week. Our per-query cost would have been nearly $0.25! We performed a lot of work to get that down to a point where we could charge our customers $0.01 on average, but it wasn’t easy.

This pricing is further reducing the SAM for the API. There are very few products that can absorb that cost and be successful, and even fewer companies before they’ve reached product-market fit. OpenAI is helping select companies in this latter scenario with funding, but still…

Part of me is, again, wondering if the pricing is a way to seem aloof and give an air of luxury, like a designer handbag that costs an average family’s monthly income. I’m wondering, and then I stop, because that would be too clever by half. I think the pricing is the way it is, because OpenAI’s costs are high. Even if we take OpenAI’s messaging at face-value that their ultimate goal is to develop AGI, they benefit from having as many people using and building on top of their technology, as each new use case can push the technology further.

So what to do?

OpenAI is doing a lot of things well, and they benefit from the image they’re creating of themselves as highly-educated ivory tower geniuses. Still, I think they would benefit from coming to the common people some more, and add some product marketing.

What I have in mind is around educating people better on how to use GPT-3 and build products with it. Things like webinars and case studies are boring, but they work. OpenAI is hiring for a Developer Advocate, who can do some of this, so that may be one way to go. Another might actually be to offload the stodgy business stuff to Microsoft, who will want to get people to use GPT-3 on Azure and who has this muscle already.

I like OpenAI, and I’m long on them. I had fun thinking about their GTM and positioning, because they do a lot of things that are not best practices, but that I think really work for them. Will they continue taking the high road to increased revenue? We’ll see.