Looking at Hugging Face's Go-to-Market and Product Positioning

Okay, it’s not a surprise that ML is huge huge huge, and companies that offer ML services are bringing in similarly huge attention and valuations. There are a few that seem to overlap a bit, at least in the eyes of investors and customers. These that are worth examining are OpenAI, Hugging Face, Cohere, and AI21 Studios. Through Algolia, I’ve been on teams that have been customers of OpenAI, and evaluated Hugging Face (many times), Cohere, and AI21. It’s been a good opportunity to see the different approaches each are taking with their go-to-market.

In this post, we’re going to look at Hugging Face’s product offerings and positioning. We’ll see a company that appears to still be figuring out who it is, though it clearly knows who it’s for.

Hugging Face

Hugging Face has been attracting attention, first thanks to their open source transformers library, and then due to their business and fundraising, such as their $40 million raise in March of 2021, following $15 million in late 2019.

Hugging Face’s go-to-market is… scattershot. Let’s take a look at their homepage and see if we can figure out what they’re selling and whom they’re targeting.

Let’s start with the emoji and header. This layout is very reminiscent of other open source landing pages. Compare what you see above to the landing pages for spaCy, Next.js, React, Vue, Keras, or Babel. (Interestingly, PyTorch and TensorFlow have homepages that are much more “enterprise” in style.) It’s very much of the same style. The heading says that Hugging Face is “The AI community building the future” and the menu items let us jump immediately into Models, Datasets, something called Spaces, Docs, and more.

This is clearly a developer-oriented company. The problem, though, is that it’s not at all clear from the messaging that it is a company. If what you decide to put above the fold on your homepage shows how you think about your organization, then Hugging Face appears to think of itself foremost as an open source collection of datasets and models, that enables ML developers to share with other developers. Add on to this the “Spaces” features (product?) that Hugging Face rolled out recently, and Hugging Face looks to be a community above all else.

This is clashing with $55 million raised in less than a year, though. There’s got to be some business here, and we can see how they’re looking to bring in money under the Solutions menu option.

This is where we come back to that descriptor again: scattershot. Hugging Face offers six (6!) different products: paid expert support, a hosted inference API, AutoNLP, Infinity, dedicated hardware, and a platform. Perhaps in looking more at the offerings we’ll see that there’s significant overlap here, simply repackaged for different target audiences.

Paid Expert Support

First up is the paid support, or “Expert Acceleration Program.” This is, essentially, consulting. The unique aspect of this paid support program is that Hugging Face is leveraging non-employees as consultants, namely people who have made significant contributions in ML. With these consultants, companies can work through challenges around fine-tuning, latency, go-to-production, bias, and other topics.

The argument seems to be: “machine learning is difficult and hard to understand, we have experts who understand it and can guide you in your project.” I wonder how much of the reasoning behind offering paid consulting is due to prospective customer feedback. I could see that, in conversations with these companies, Hugging Face has heard that ML seems interesting, the prospect would like to explore it, but they simply don’t have the team internally and can’t justify building a team right now. And, yet, that reasoning doesn’t hold up when you read the offering messaging and notice that the program doesn’t offer implementation, but only guidance. These companies would still need an ML team to manage the implementation.

What’s the TAM for this kind of service? Let’s break down who might be interested. First, a company will need to believe that ML fundamental to its product. They will need to already have an ML team on staff for implementation and management, and also believe that the team is not capable of bringing their project to production without assistance. Alternatively, they could have brought it to production, but need assistance with tuning or reducing latency or other topics. This market has got to be incredibly small.

The vast majority of companies (we can reasonably say 99% of all companies) are going to be ML-curious at best, and the ones who are fully in on ML will be more likely to want their team in-house to have all of the skills necessary and not look elsewhere. So you’re looking at a small sliver of companies who want to implement or optimize an ML project and another small sliver that want temporary, outside assistance. Interestingly, Algolia (where I work and where my team has evaluated Hugging Face offerings) could potentially fall within that sliver of a sliver. But we’re unique.

(Another possible target audience could be development agencies who have an ML offering but need assistance for a specialized project. But, again, it’s a small audience.)

If we assume that there are customers who would be willing to pay for paid support, then Hugging Face is running into another issue: professional services is not recurring revenue. For a technology company that has raised the kind of money that Hugging Face has, recurring revenue is key, otherwise you’ve got an annual slog at reupping those services contracts. For the kind of paid support that Hugging Face is offering, whether those contracts renew easily is hard to say. You could say that customers will generally renew because ML is progressing quickly, and every year will bring new challenges. You could also say that customers are paying for premium support for one-off problems. Likely, it’s a mixture of both.

One way where a professional support offering does make sense is when it is tied closely to an offering that does provide true recurring revenue. This where we see that there is a variation in the percentages of professional services for different SaaS companies. Companies like Appian could have 50% of revenue coming from services, because it was tied closely to their platform. Then it’s easier to make a shift in strategy, which Appian has done over the past couple of years and move toward a larger percentage of revenue coming from the product itself.

This approach can be thought of as “professional services as customer acquisition cost.” This is difficult, though not impossible, for Hugging Face to do, as they offer their open source library in addition to paid offerings. Indeed, the landing page for the paid support specifically is directed toward companies not using the Hugging Face hosted inference API or containerized offering (both of which I look at more below). If Hugging Face can use paid support to move customers over to one of these other offerings, then it starts to look more strategic.

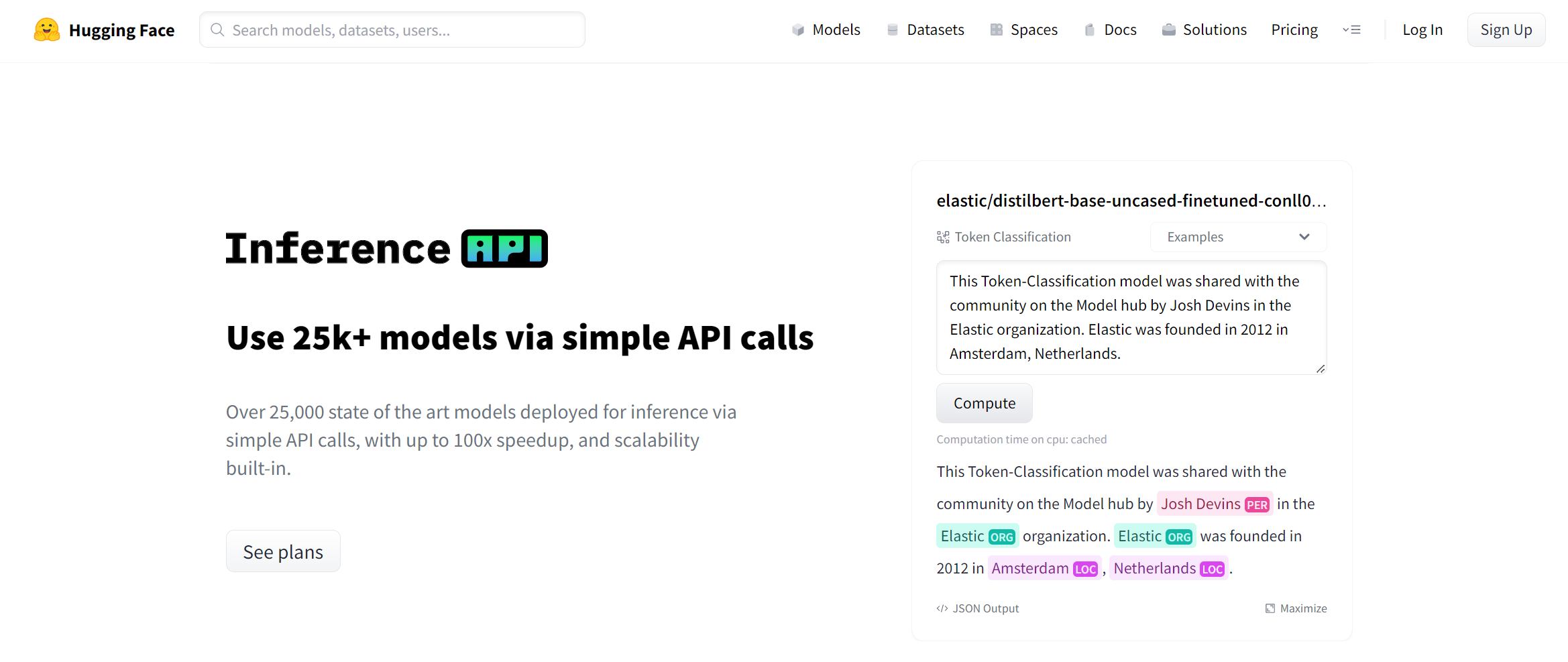

Hosted Inference API

One of those offerings to which customers can move over is the hosted inference API, which is an API hosted by Hugging Face where customers can host models and call at inference time. Hugging Face claims more than 25,000 models are available, with optimizations to latency, scalability, and SLAs in place. The value proposition is: don’t worry about infrastructure, we’ll take care of it.

This is the product that I think has the most promise for Hugging Face, and the one that should be their core focus.

The marketing copy for the API gets the value proposition well. The first subheader is “Plug & Play Machine Learning” and further down the page we’ve got “Let us do the machine learning.” The one critique I have is that this isn’t even higher up on the page. The primary header of “Use 25k+ models via simple API calls” doesn’t take a strong enough point of view.

Just like we did with the premium support product, let’s examine the market and see why the API is their most promising offering, and the one they are most under-promoting.

The market for a hosted, ML-driven API is enormous. I had a conversation a while back with a former colleague who was asking about Hugging Face specifically. I don’t remember exactly what he needed to do—some NLP task—but what’s important is that he doesn’t work at a technology company, he works at a technology-enabled company. That is to say that his company doesn’t sell technology and doesn’t do R&D. They have a dev team of maybe a dozen people, and aren’t even going to hire an ML team.

The Hugging Face API can power tasks for companies like my former colleague’s. These companies will have needs for certain ML tasks, and are looking for ways to run them easily. The size of this market is nearly every company with a dev team, minus those large enough or edge-of-tech enough to have a team that can train, host, and manage models in-house. Unlike the professional services offering, the TAM for the hosted inference API starts off huge.

Hugging Face can capture these companies by leaning heavily into the “Let us do the machine learning” messaging, and build the product for the developer and the product manager who just wants to accomplish a task. The tasks here are ones of product and product marketing.

On the product side, the API needs to be friendly to the developer and the PM. The developer is somewhat pretty well-served as it is—the API is simple to use and integrate. There could be some improvements on developer experience (e.g. client libraries), but they’ve got a decent start as it is. The PM persona could be better served by better reporting and insights. Right now these features are bare-bones, focusing primarily on cost management. Both PMs and devs would be aided by better reporting on trends, detailed usage, and more detailed statistics.

Product Marketing and Positioning

The product marketing and positioning side is where Hugging Face is really falling short.

I must first point out that they are doing very well in communicating the value proposition to developers. Hugging Face is competing, in many ways, with Hugging Face itself. The hosted inference API is competing with the Hugging Face open source library. By leaning heavily into communicating the values of letting someone else own the infrastructure and the improved latency and throughput, Hugging Face the API is providing a strong rebuttal against using Hugging Face the library.

I say this, of course, a bit hypocritically. We explored the inference API at Algolia when building a feature that we called semantic highlighting. In the end, we decided not to go with the inference API even though its strengths were appealing. We decided instead to use the library and host it ourselves because it worked well enough, and we wouldn’t have to go through the legal and security review process to introduce a new data processor. So in this case we saw the unspoken side of “Implement and iterate in no time,” which is “no time… unless you have to go through a lengthy review process.”

The issue with the messaging working so well with developers is that Hugging Face’s mindshare is already very high among developers, and the need is instead to make product builders think, “we can use ML for this.” The people who most need to be convinced about this are not the devs, but the non-technical. Hugging Face should be going to this heavily. They try it some on the landing page for the API, but they do it halfway.

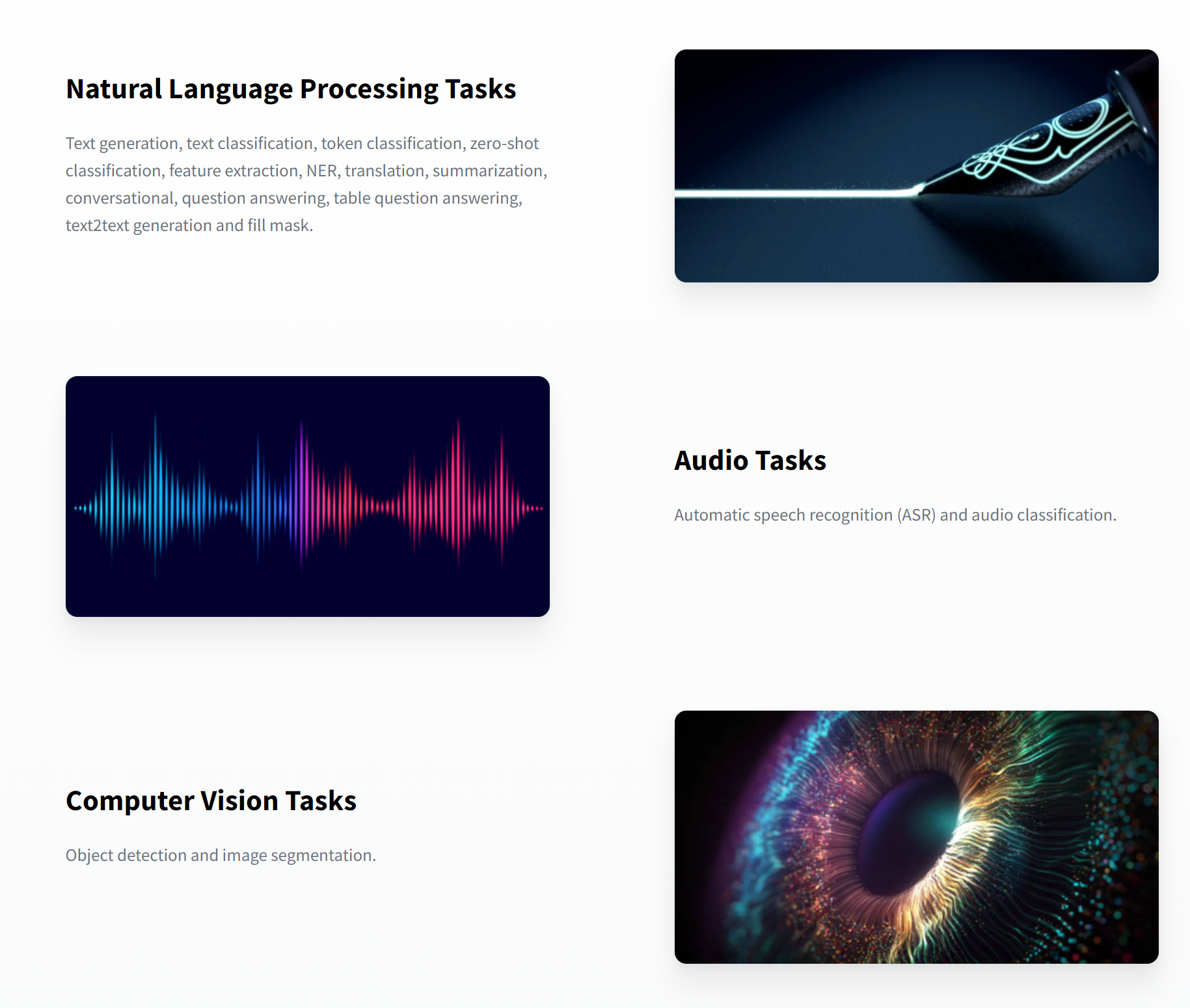

This section needs to be entirely replaced. These are all developer terms. The non-technical members of a company need to know exactly what the Hugging Face hosted inference API can do to achieve their goals. Don’t talk about token classification, talk about identifying locations in text. Don’t talk about table question answering, talk about finding insights in financial reports.

Take a look at the Hugging Face blog or newsletter right now, and you’ll see that the content is almost exclusively for developers. Hugging Face needs to, somehow, introduce more content for the PMs, the Marketing Managers, and others who have an outsized influence not just on the buying decisions, but on the decisions to even look for a technology to buy. Hugging Face needs to drive hard exactly what their technology can enable.

By putting together content geared towards use cases, Hugging Face gets multiple benefits. The first benefit is of expanding the market. Hugging Face is among the leaders for developers when it comes to ML tooling, and it wouldn’t be difficult to become the leader in terms of talking about all that ML can do for businesses. If we think of what OpenAI has managed to do, the difference is clear. OpenAI is no less technical than Hugging Face, and yet with just a single model offering they were able to get non-technical people talking about it and imagining new use cases. I have heard tons of non-technical people talking about GPT-3, and very few talking about Hugging Face. OpenAI is getting people thinking about what ML can do in the next five years, and as such has become synonymous with “powerful ML offering.”

Another benefit of leaning hard into use case messaging is that it will be easier to expand existing contracts. In illustrating what object detection can do for a business’s bottom line, that customer who has been to this point using Hugging Face for translation now can see opportunities to use an existing provider for more parts of their business. Hugging Face can provide multiple building blocks for companies to use in building their products. You see this a lot in e-commerce, with the rise of the term composable commerce.

The challenge here will be that Hugging Face will—somewhat—expand the number of competitors and it doesn’t line up well against any one individual competitor. It will compete against Amazon, Google, Microsoft, and IBM in domains like image recognition, NLP, and text summarization. These platforms all are likely to already be in a company’s stack, and provide much better tools for non-developers to fine-tune the services. There are yet more players, conversely, who are focused specifically on one use case, such as speech recognition.

Hugging Face can combat these competitors by focusing on these value propositions: targeted models, speed/latency, and speed to implementation. Additionally, Hugging Face’s competitors are big, and move slow. In ML, this is a negative, because a models sometimes become surpassed within a year. Hugging Face is adding new models continually, so can offer to their customers the ability to continue to be with the models with the best performance.

Finally, this use case expansion should not just be about messaging on landing pages. Hugging Face has an opportunity here to use education to bring in new buyers. Hugging Face is, incidentally, increasing its educational reach, but again the focus is on developers. They have an opportunity to address non-developers about what ML can do, and investing in content around that topic could work. “What ML can do for legal teams,” “Natural Language Processing for eCommerce,” and other articles sound cheesy to the developer’s ear, but they work.

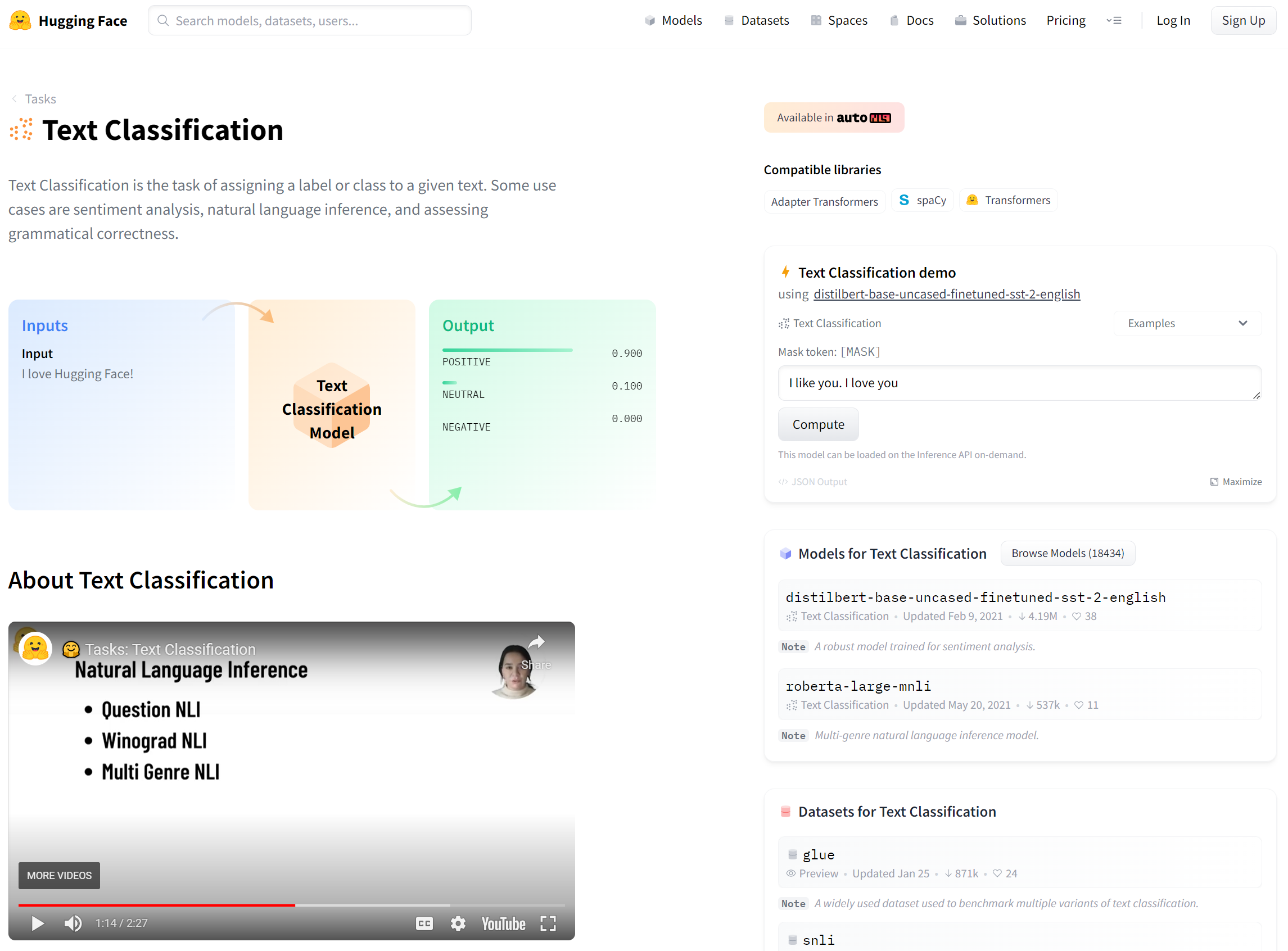

There’s a base here to work off of. Hugging Face has a tasks section which addresses what different models can do, but once again the target is developer-heavy. Great for an open source library, less so for a SaaS offering.

What has a business used text classification to do? What have been the business outcomes? Bonus point if there’s data, but even that’s not necessary right now. They should downplay terms like “entailment,” or “multi-genre natural language inference model.” Save that for the developer docs, and use this section to make the business case for handing over money.

AutoNLP AutoTrain, Infinity, and Hardware

These next three offerings are very “you clearly need it, or you clearly don’t” products. AutoNLP (since renamed AutoTrain) automatically trains and hosts ML models. Infinity is an optimized container offering. Hardware is what it sounds like.

AutoTrain

AutoTrain is the most interesting of the three. Most ML engineers with whom I’ve spoken don’t see much of a benefit to the service, but I think there is something here, even if the target market is still fairly small.

Limiting the market for AutoTrain is that much of the difficulties of ML are still present, so the audience generally are, again, highly skilled engineers.

Gathering data is among the most difficult part of ML. Maybe not the most technically difficult, but practically difficult. There’s nearly always a “”Starting a project with little data with which to train the model">cold start problem" when creating new models. Another data-related difficulty is in selecting the features. Neither of these problems appear to be solved by AutoTrain.

One major benefit of AutoTrain is that it “will find the best models for [the] data automatically.” This is, clearly, a very nice benefit. There are nearly 40,000 models on Hugging Face’s model hub. Even narrowing down to English question answering brings back 132 results. 453 for English text classification. Having a service that will select which of these models will perform best on the task is nice. Then again, most will only select a model once, and so this is not an ongoing benefit.

Again, I’m wondering what AutoTrain enables me to do that I can’t do elsewhere. If we compare AutoTrain to a competitor, Google’s AutoML, the difference in positioning is clear. AutoML talks about how I can “Reveal the structure and meaning of text through machine learning” or “Derive insights from object detection and image classification.” Compare the customer stories, too. Google shows us that California Design Gen is able to better see how individual products perform which led to a “50% reduction in inventory carryovers” (use case and benefit). I don’t know what an inventory carryover is, but I know what 50% reduction means and I like it. Hugging Face, meanwhile, tells me that AutoTrain can “[Democratize] NLP” for “non-researchers.” That last part is nice, but I’m still no closer to understanding how it can fit into my business if I didn’t already.

I also might wonder, if I’m a potential Hugging Face customer, how AutoTrain and Paid Expert Support co-exist. Recall: Paid Expert Support’s value proposition was that ML is hard, and you need to hire someone to help. AutoTrain’s value proposition is that ML is no longer hard. Do I turn to Paid Expert Support only for those lingering challenges of data collection and feature selection? Or is the continued existence of Paid Expert Support a sign that AutoTrain isn’t really “solving” ML for non-ML engineers?

Infinity and Hardware

I won’t spend much time on these two, because they are, again, something you either clearly need or clearly don’t. They don’t expand the market for ML, and that’s okay. The one thing I’ll say here is that I’m somewhat surprised that Infinity is not more prevalent in Hugging Face’s messaging. Infinity is containerized ML that purports 1ms latency. This is impressive!

If I had to guess why Infinity’s not more prevalent, it’s because the market for the offering is fairly small. There are few companies that really need real time inferences, and those that do need it also need high throughput. You don’t see it on the page, but we learned in our conversations with Hugging Face (and you can also see in the Otto customer video on the Infinity page), but Infinity does not support batching of requests or multi-GPU. For customers without this throughput, most will probably be okay with longer inference times. I would expect that, if Hugging Face can improve throughput, that this will be a more interesting product.

Who is Hugging Face’s ICP?

After looking at Hugging Face’s offerings, positioning, and go-to-market, they are clearly targeting ML developers for their offerings. It’s clearly working—Hugging Face has amazing name recognition within that crowd. However, there’s also a bit of a scattershot approach to how they position themselves and their paid offerings. Is Hugging Face a community, a hosted solution, or infrastructure? Right now, they’re all of the above.

Additionally, with six paid offerings, we don’t have the same product repackaged, but different offerings, which means different sales motions, different marketing efforts, different buyers, etc. This may very well work in the longterm, but at the very least there needs to be a better story of when people should become Hugging Face customers and how they grow from small-time customers to larger customers that isn’t there just yet.

The product that Hugging Face offers now that shows the most promise is the Hosted Inference API. However, to reach the broadest possible market, Hugging Face needs to expand beyond ML engineers to others in tech organizations. If Hugging Face can show Product Managers or CMOs what they can do with Hugging Face’s technology, and how it can help their businesses, Hugging Face has an opportunity to land and expand, by slowly adding use cases within organizations and growing their engagements over time.

I’m really interested to see how this shakes out over the next few years. Investors clearly have faith in the Hugging Face team, but we’ve seen open source driven businesses falter before. Will Hugging Face do the same? 🤷♂️