AWS re:Invent 2018 Day Two Recap

Here are notes from day two of AWS re:Invent 2018. If you missed day one, you can read those notes here. Want to continue the conversation? Reach out at dustin@dcoates.com or on Twitter.

How to Train Your Alexa Skill Language Model Using Machine Learning

The first session of day two was from Fawn Draucker and Nate LaFave from the Alexa team on improving spoken language understanding inside of skills. What I can say about this session is that it was 300 level, but it should have probably been 400 level. This is a compliment.

Spoken language understanding (SLU) is, essentially, the automatic speech recognition (ASR) and natural language understanding (NLU). SLU also includes wake word monitoring, slot detection, and dialog management.

ASR is further broken down into accoustic modeling and language modeling. Accoustic modeling is taking the sound waves and transforming them into word representations. Language modeling can be generally thought of as phrase detection. It takes a probabilistic approach and says, “knowing what I know about the accoustics and how people speak, what is the most likely next word?” Draucker gave the example of, “can you hold my…” and then either pin or pen. Some people pronounce these two words differently, but others (including my fellow Texans) do not. They both sound like pin. Language modeling would try to figure out which one is most likely based on the signals and the speech corpus it’s already examined.

To make the ASR successful, developers need to:

- Be *a*daptable (adapt interaction schema to what users are saying)

- Be *s*imple (especially with invocation names, don’t use made up words)

- Be *r*elvant (nothing irrelevant to the skill in the interaction schema)

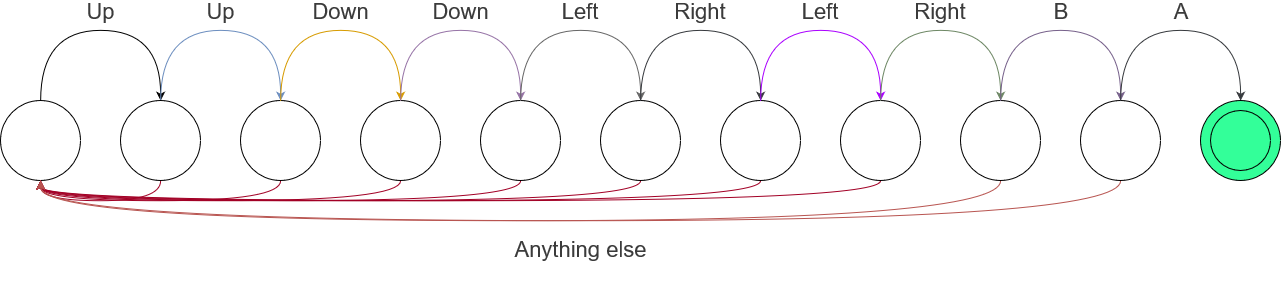

NLU’s application comes in two flavors: rule-based or finite state transducers (FST), or statistical models. Draucker and LaFave didn’t go into what FST are, but it’s important to understanding what Alexa is doing when it gets an utterance and needs to map it to an intent. You may have seen finite state automata (FSA) before, but even if you don’t recognize the term, you’re familiar with the concept. An FSA takes an input and will determine if that input matches the rules within the FSA. Up-up-down-down-left-right-left-right-b-a could be ingested a command at a time by an FSA and at the end determine if the player earned the power-ups or not.

An FST, meanwhile, not only matches, but also produces output. (In CS-speak, it has “two tapes.”) A very good explanation of FST is here, incidentally from another Amazon engineer, Nade Sritanyaratana. The idea is that as the machine reads input word-by-word, it spits out an annotation. This can be as simple as translation to as complex as NLP. The FST are composable, and you can make an FST from other FST combined. Quoting Sritanyaratana:

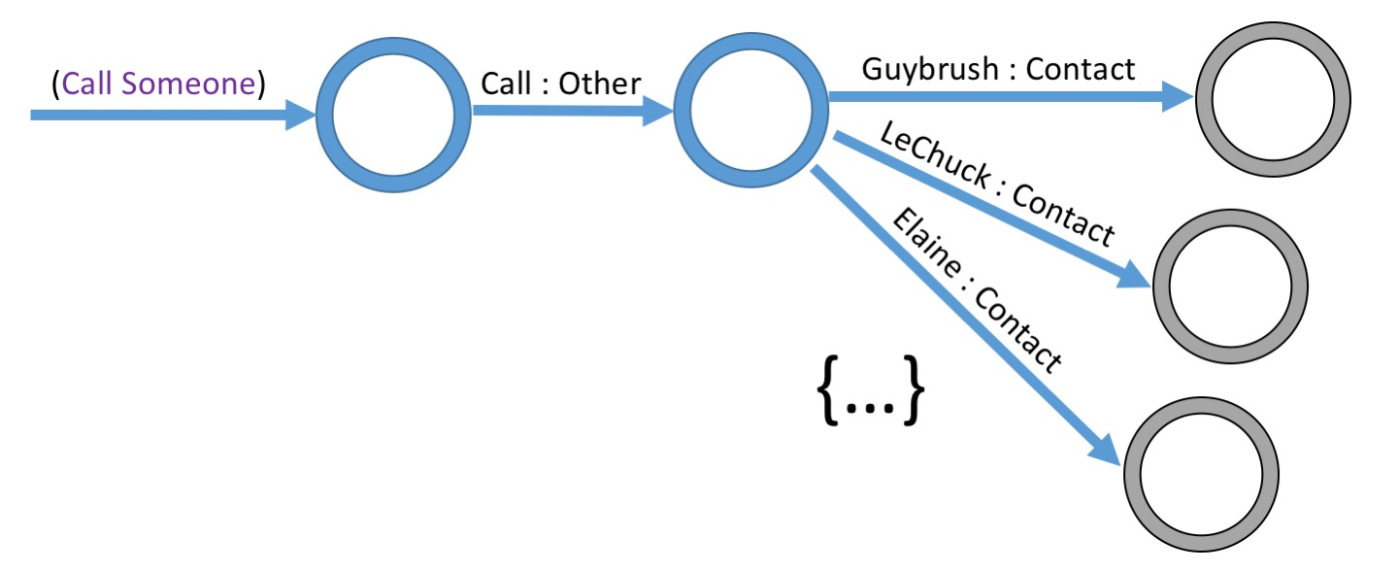

We start this FST by branching out to different commands, like “Call Someone”, “Get the Weather”, or “Nickname Myself”. We use the empty label epsilon (ε) to branch out, which is kind of like expressing, “Any of these commands are possible, from the start of the interaction, before the user says anything”. Let’s look at what an FST to “Call Someone” might look like:

Multiple contacts, like LeChuck and Elaine, would branch out to different end states.

Alexa will build rules (or FST) from the sample utterances and slot values you provide in the interaction schema. It will then compare what the user says (or, precisely, what the ASR understood) to match an intent following those steps. That’s the main difference between an FSA and an FST. The FSA requires an exact rule match, while there’s some expanse in the FSA. The thing about an FSA is that it’s not always going to finish at an acceptable “end state.” There needs to be a threshold of the match.

That’s where the statistical model arrives. The statistical model will take a fuzzier view of matching utterance to intent. Because it’s fuzzier, it’s less accurate and more difficult to guess on which intent Alexa will match. Even more, there are multiple models: based on your skill, all skills, and Alexa broadly.

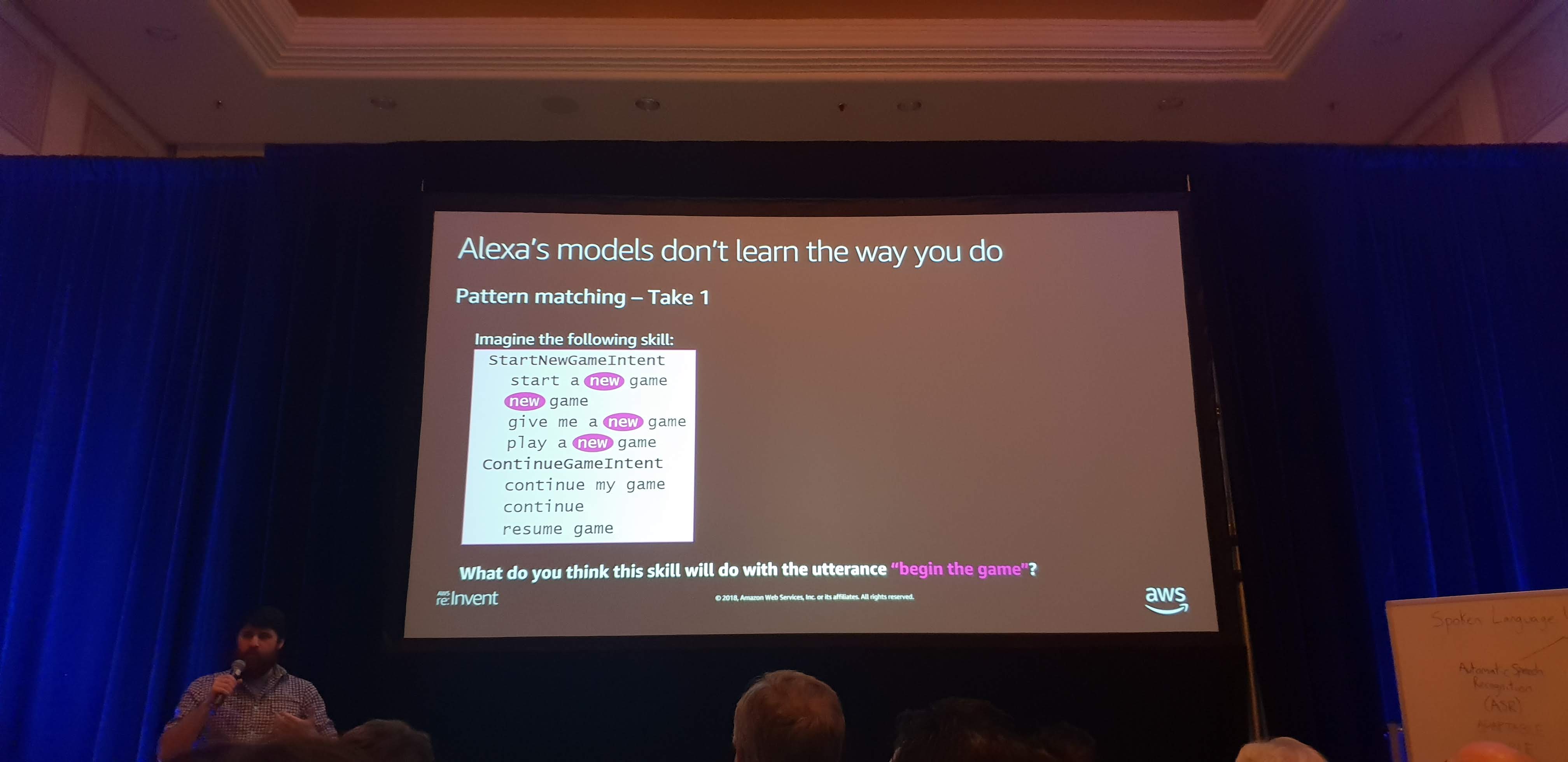

Take a situation where a user says “begin the game” and there are two intents, one for starting and one for continuing the game. You think that Alexa will perhaps match on the StartNewGameIntent because “begin” is a synonym of “start.” Yet that’s not what happens necessarily. The statistical model is looking for patterns, and when it sees that “new” is in every utterance for an intent, it assumes that a user’s request needs to say “new” as well. (In practice, this might be different. A basic example like this is going to bias the model from sending any utterance that doesn’t have “new,” but in reality there might be enough other data that makes “begin” and “start” comparable.) You need to have good coverage of what people are going to say. Lots of utterances that are realistic, without adding utterances in the off-off-off chance that someone might one day say it because that can create ambiguity.

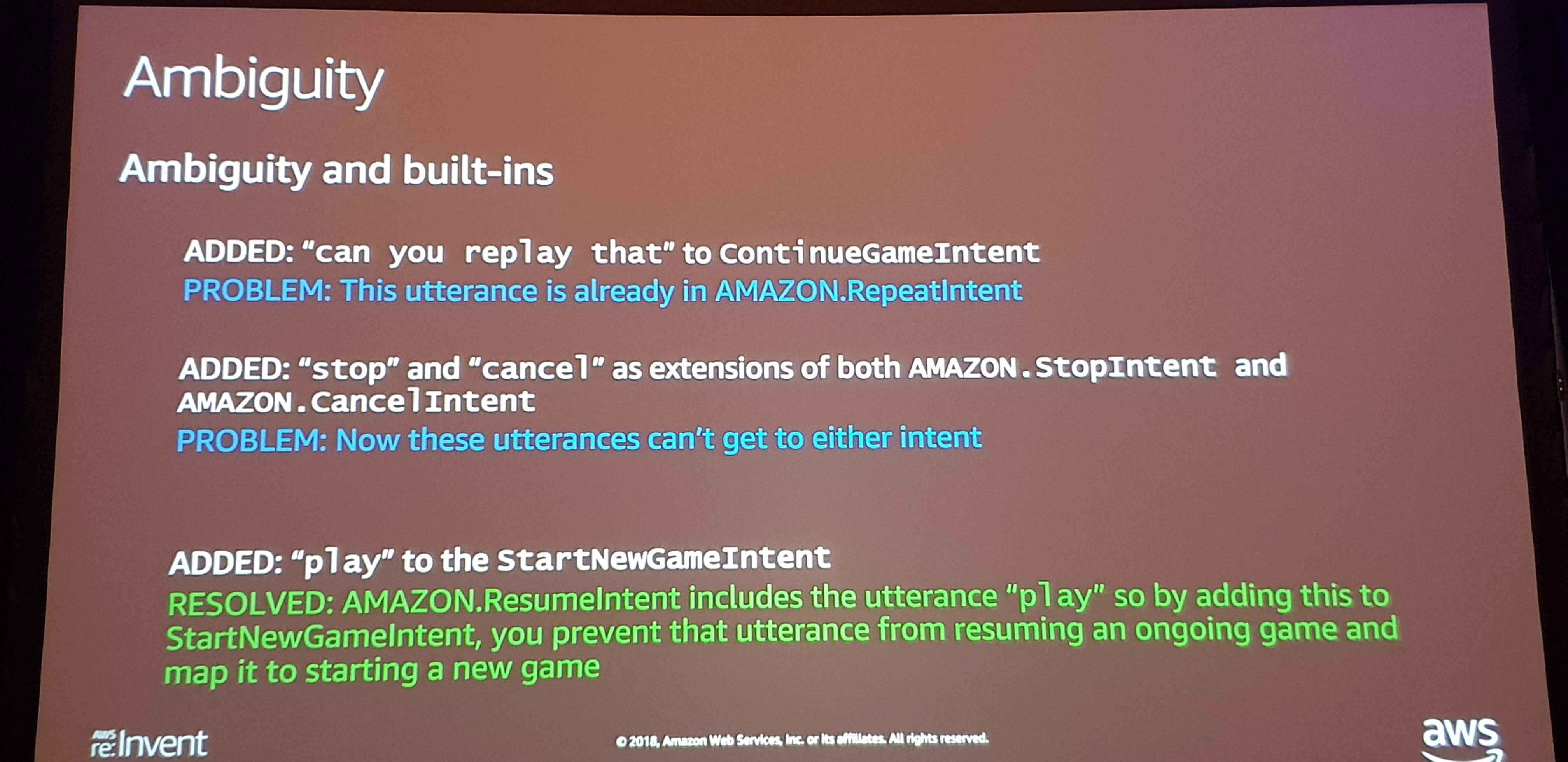

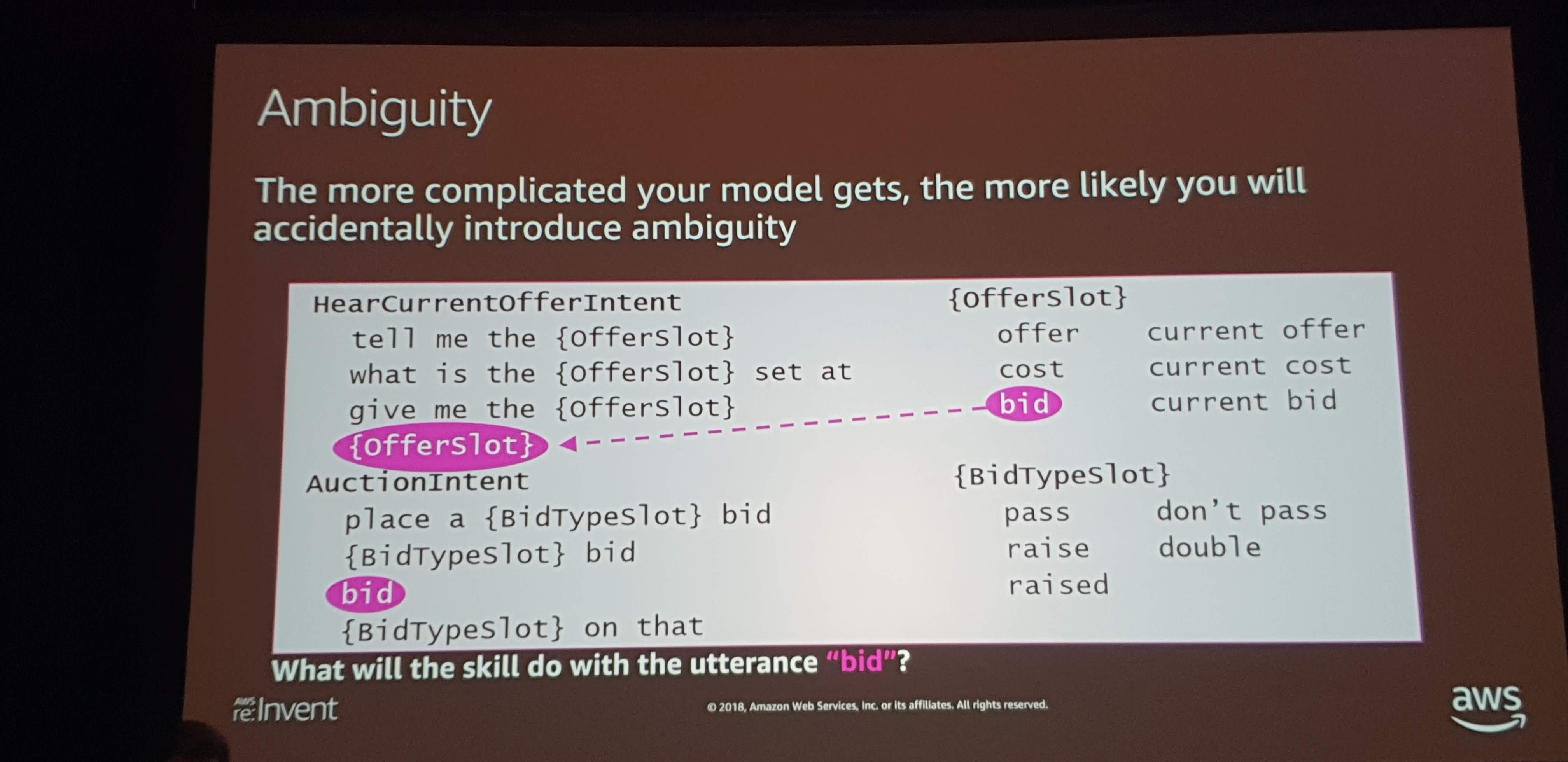

The problem with ambiguity is that it doesn’t translate well to FST. When there are multiple FST matches, Alexa gets rid of them and goes to the stastical model. This can happen when multiple intents share the same utterances, but also can happen when there’s a phrase plus slot grouping that matches a full utterance.

Developers sometimes introduce ambiguity conflicts with built-in intents. Again, the conflicts will lead to fallback to the stastical model and the stastical model doesn’t cover built-in intents. The built-in intents’ utterances of course change over time, so the best thing to do is to watch the intent history, which provides the confidence score. A 1.0 confidence shows that there was an FST match versus a stastical model match, and which intent ultimately resolved the request.

Finally, a point that doesn’t fit elsewhere, but I found it interesting. If you need a slot where values will only ever be a subset of a built-in slot’s values, you’ll usually have more accuracy with the ASR if you create a custom slot. This is because the biasing is spread across the different example values, and the more values the less biasing is applied. I would have thought the built-in slots were so finely tuned that they were better, but there you go.

Make Money with Alexa Skills

The next session was on making money with Alexa, presented by Jeff Blankenburg. The interest for making money was, unsurprisingly, very high and a long line was in the reserve line long before the doors opened.

Developers can make money in two ways through skills. Users can purchase physical goods, through Amazon Pay, or they can purchase digital goods. This second revenue stream is what Blankenburg presented.

There are three types of digital goods, or in-skill purchases (ISPs):

- One-time purchase (or entitlement): This is like a book. Once bough, always owned.

- Consumables: You can’t have your cake and eat it, too. Think: extra life in a game.

- Subscriptions

Before you start thinking of making an I Am Rich skill, know up front that there are fairly low price limits. Subscriptions and one-time purchases can cost between $0.99 and $99.99. Consumables have a range of $0.99 and $9.99. The dollar signs there are on purpose, because ISPs are only for US skills at the moment. Additionally, that lower-bound of cost isn’t entirely accurate. Amazon can discount the price for the users, and has done this for Prime members. The developer is still getting the full cut (70% of list price).

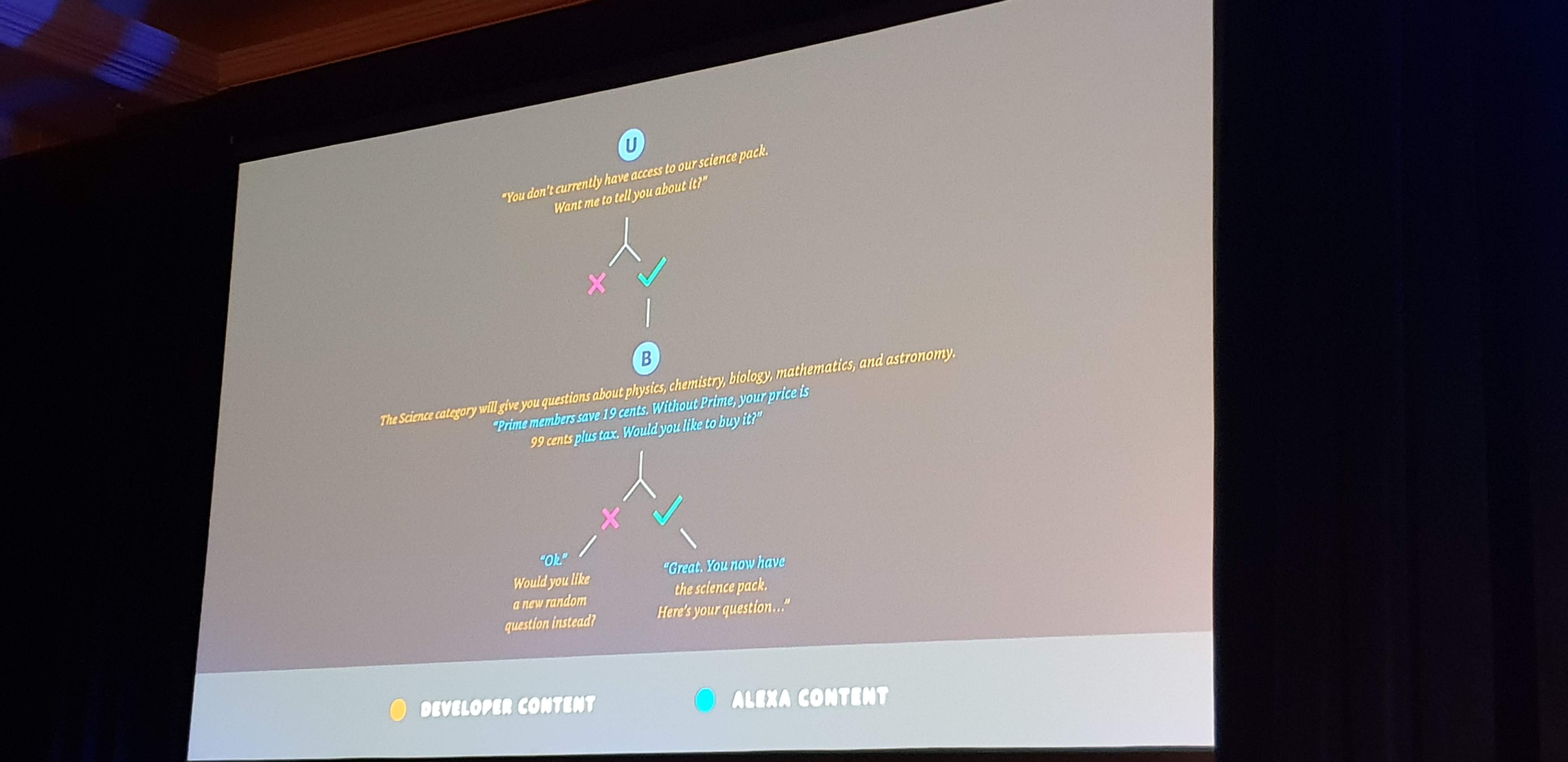

The discount mechanism that Amazon applies is why the implementation process isn’t as simple as telling the user from the skill what the price is and handing it off to Amazon. Instead, developers are sending the price and some descriptive prompts, then Alexa handles the rest.

Blankenburg didn’t touch on it, but I imagine that another reason for Alexa to take such a large role in the purchase flow is to prevent dark patterns from tricking users into spending more than they planned.

The purchase flow is a true flow and the skill needs to have logic that handles all of the possible permutations and outcomes. For example, when users enter a purchase flow, the skill can ultimately receive a status that shows whether the purchase was accepted, declined by the user, or if there was an error. Blankenburg noted that the most common reason for an error was that the device didn’t support ISPs. This happens most often with third-party Alexa devices, as well as the iOS app.

Developers need to also think about how users will get to the purchase. Most often, users will purchase after the skill prompts them. As with any other context, you want to prompt users when purchasing is in the forefront of their thoughts and when there’s a clear connection between what they’re doing or just did and the purchase. You offer players an extra life when they’re getting low, not when they’re just starting out.

Finally, persist information about purchases. You don’t want to hit the monetization API all the time, plus you need to know whether that consumable has indeed been consumed or not. Blankenburg also mentioned reconciling the persistant information if users made a refund or cancellation request (which is handled automatically for subscriptions, and through Amazon customer service for the others). Essentially, you take away the purchase when a user no longer has access to it.

Monetization was another big topic last year, so I expect you’ll continue to see more interest in this topic and more examples of how it works. Before then, you can check out “an example skill”: https://github.com/alexa/skill-sample-nodejs-fact-in-skill-purchases on GitHub.