AWS re:Invent 2018 Day One Recap

This week I’m at AWS re:Invent 2018 and will be trying to attend as many voice and conversational sessions as I can. If you’ve never been, imagining the scale of it is massive. There’s no “voice track.” There are a ton of different sessions, often overlapping. If you weren’t able to make it, or you were but missed some sessions due to scheduling, I’ll share my recaps here. At the end of the week, I’ll also share my overall view of the week and how it compares to 2017.

A quick, quick note of self-promotion if you don’t mind. I’m almost finished with my book on building voice applications. It covers some of the topics in this blog post in-depth, like using SSML and dialog management. I’d love to hear what you think about it. It’s in early release from Manning Publications right now, and if you buy it, you’ll get a say in the final release and get it when it comes out. Thank you, and if you want to chat more about this post or anything voice-related, reach out at dustin@dcoates.com or @dcoates on Twitter.

How to Get the Most Out of TTS for Your Alexa Skill

First up was Remus Mois and Nikhil Sharma, who both work on text to speech at Amazon. They’re on the team that builds Alexa and Polly voices. Amazon currently has 27 Polly voices available for use inside Alexa skills out of the 55 Polly voices overall, and their goal is to have at least one male and female voice in each locale where Alexa is. Mois and Sharma spoke about a few ways to work with speech to make skills a better overall experience. This is important because many of the tactics that they showed can aid with understanding, and others improve the perception of the skill. The tactics can even impact how people respond physically.

They started off, quite appropriately, with pauses. <break>, <s>, and <p> provide different levels of pauses. <s> gives sentence-level pausing and <p> gives paragraph-level. Meamnwhile, the <break> tag accepts an arbitrary number of seconds to create the pause. Mois mentioned that studies have shown that listeners sync their breathing with the speaker. No pauses, and listeners will also take none (or fewer), and feel uncomfortable.

Mois and Sharma transitioned next into <say-as> and <phoneme>, which changes how Alexa pronounces words. For example, you can spell out a word, “w-o-r-d.” Or, via phoneme, you can specify exactly how to pronounce it, via x-sympa or IPA. Other tags in this realm are <whisper>, <emphasis>, and <prosody>. All of these are within SSML (<whisper> is an Amazon–introduced extension), and developers can test them within the Alexa developer console. To add on top, if you really want to change how Alexa says a response, you can forego the Alexa voice altogether. Starting this year, Alexa’s SSML also has <voice> to choose a Polly voice to come in to speak on Alexa’s behalf.

Finally, they went into speechcons and the sound library. Speechcons are specially created responses from Alexa that sound more human-like. In my experience, they’re interesting but you need to be careful with them. Because they sound more like a human, they can be jarring in comparison to the normal Alexa TTS. If you do use them, add space between the normal Alexa utterance and the speechcon, and use them either at the beginning or the end rather than sandwiched between normal Alexa.

SSML is an incredibly underutilized tool for beginning voice developers. It’s a bit like building a web page and stopping with the HTML and JavaScript. It works, but you’re missing much more.

Alexa Everywhere: A Year in Review

Next up was a look back at 2018 by Dave Ibitski. Dave was employee #1 on the dev marketing team for Alexa, so he’s got a good picture of where the Alexa today compares to the Alexa of four years ago. Plus, he always drops stats in his talks, which is useful if you’re interested in how the business and ecosystem of Alexa is growing.

Because sessions were repeated, the presentations from the embedded videos might not match exactly what I present here. This is the summary of the session that I attended.

About those numbers:

- Over 50,000 skills

- 10s of millions of devices

- 100s of thousands of third-party Alexa devs

- 4 out of 5 Echo customers have used Alexa skills

- Third-party skills usage has grown 150% year over year

If you’re curious about how these numbers look compared to last year, check out my day two recap from 2017. The most interesting to me is that skill counts have doubled while skill usage is grown two and a half times. Compare this, too, to the numbers Ibitski provided in the fall at VOICE Summit. At that point, third-party usage had only grown by 50% YoY.

The title of the talk suggested the first big focus: Alexa’s expanse beyond the living room. Device builders have pushed this since 2017, but 2018 saw Amazon get into it, too. Microwaves, clocks, and automobile adapters. You’ve seen them before. Alexa isn’t trying to take away all of this business, and has continued to build out developer tools for expanding Alexa’s reach, such as AVS and Alexa for Vehicles. (A note: I am intrigued and happy that the name is Alexa for Vehicles, not Alexa for Auto. Tractors, boats, airplanes?) This expanse goes beyond devices, and includes other platforms. Windows users can now download a Windows 10 app, replete with a ctrl-windows-a shortcut. Finally, a very welcome design overhaul of the Alexa mobile app with Alexa built-in so Alexa can go with anyone no matter their mobile platform. (I sometimes wonder where we would be if Amazon had waited a year to release a phone, and built one around Alexa rather than five cameras and a 3D effect.)

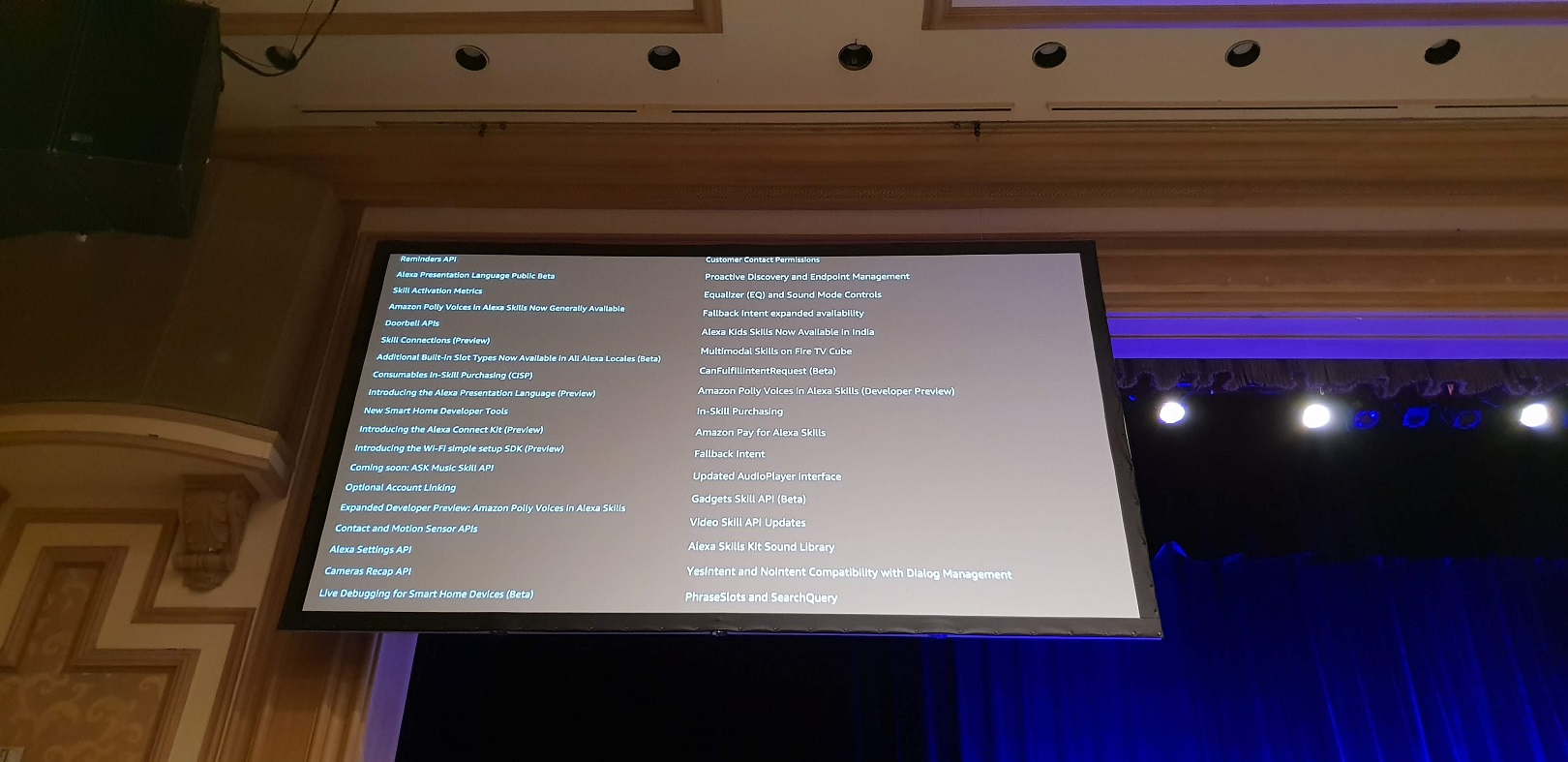

The second big theme of Ibitski’s talk was developer tools. This blog has covered a few, but there are many more that have come in 2018. Features from the Alexa Presentation Language to CanFulfillIntentRequest. In-skill purchasing to physical goods and services via Amazon Pay. Skill validation via the CLI and the developer console. Hosted skills and blueprints. It’s a lot, and it feels that Alexa has taken a large jump forward in 2018. The really nice thing is that, despite the huge announcements, Amazon released a number of small improvements that make the development process easier.

Two that Ibitski talked about that I didn’t know was ask dialog in the CLI and APL previews. ask dialog is a tool that mimics a back-and-forth with Alexa directly from the command line. The APL previews will push a skill response to an actual device.

Altogether, it combines to bring Alexa everywhere. Everywhere a user will be, and everywhere a developer wants to develop.

Solve Common Voice UI Challenges with Advanced Dialog Management Techniques

The final session of the day was from Josey Sandoval and Carlos Ordoñez. They presented how to use dialog management to build advanced user interactions. What I really liked about this presentation is… Well, the best way to describe it is that when an idea is really clever you had never thought of it yourself, but afterwards you say, “of course that’s how you should do it.” There were many clever ideas in this talk.

Most novice developers will try to collect multiple pieces of information by using multiple slots. For example, they expect a user to say “I want to buy a gift.” “What kind of gift?” “A Christmas gift.” Those two user utterances go to different intents. This is a brittle mess.

The better approach is through Alexa’s dialog management. Dialog management is a state machine that ensures that slots are filled and confirmed if necessary. Developers set it up in the console or in the interaction schema, and provide to the Alexa service which slots are required and which need confirmation. (Intents are also confirmable.) Developers also provide the prompts Alexa will use for those actions.

After the initial look at dialog management, Sandoval and Ordoñez examined their first advanced situation. For their “gift idea” skill, they had multiple intents that needed the same slots. One example was a gift intent and a card intent. Both required name, but if a user jumped directly from the gift intent to the card intent the name didn’t follow. Dialog mmanagement only works within a single intent. Their solution was to use the same slot name for all of the slots that are shared. name should always be name no matter the intent. Then provide a helper that fills the slot values from session aattributes before elicitation. (You can decide at runtime whether Alexa asks for a slot value or not.)

You can see this in action on the GitHub repo for the project.

function getSlotsFromIntent (sessionAttributes, intentName){

var slots = {};

if ( sessionAttributes[intentName]) {

slots = sessionAttributes[intentName].slots;

}

return slots;

}

...

var otherIntentSlots = getSlotsFromIntent (sessionAttributes, "GiftIdea");

for (var key in currentIntent.slots){

if (otherIntentSlots[key] && !currentIntent.slots[key].value){

console.log("nel");

currentIntent.slots[key] = otherIntentSlots[key];

}

}

// Save all slots to session

sessionAttributes[currentIntent.name] = currentIntent;

attributesManager.setSessionAttributes(sessionAttributes);

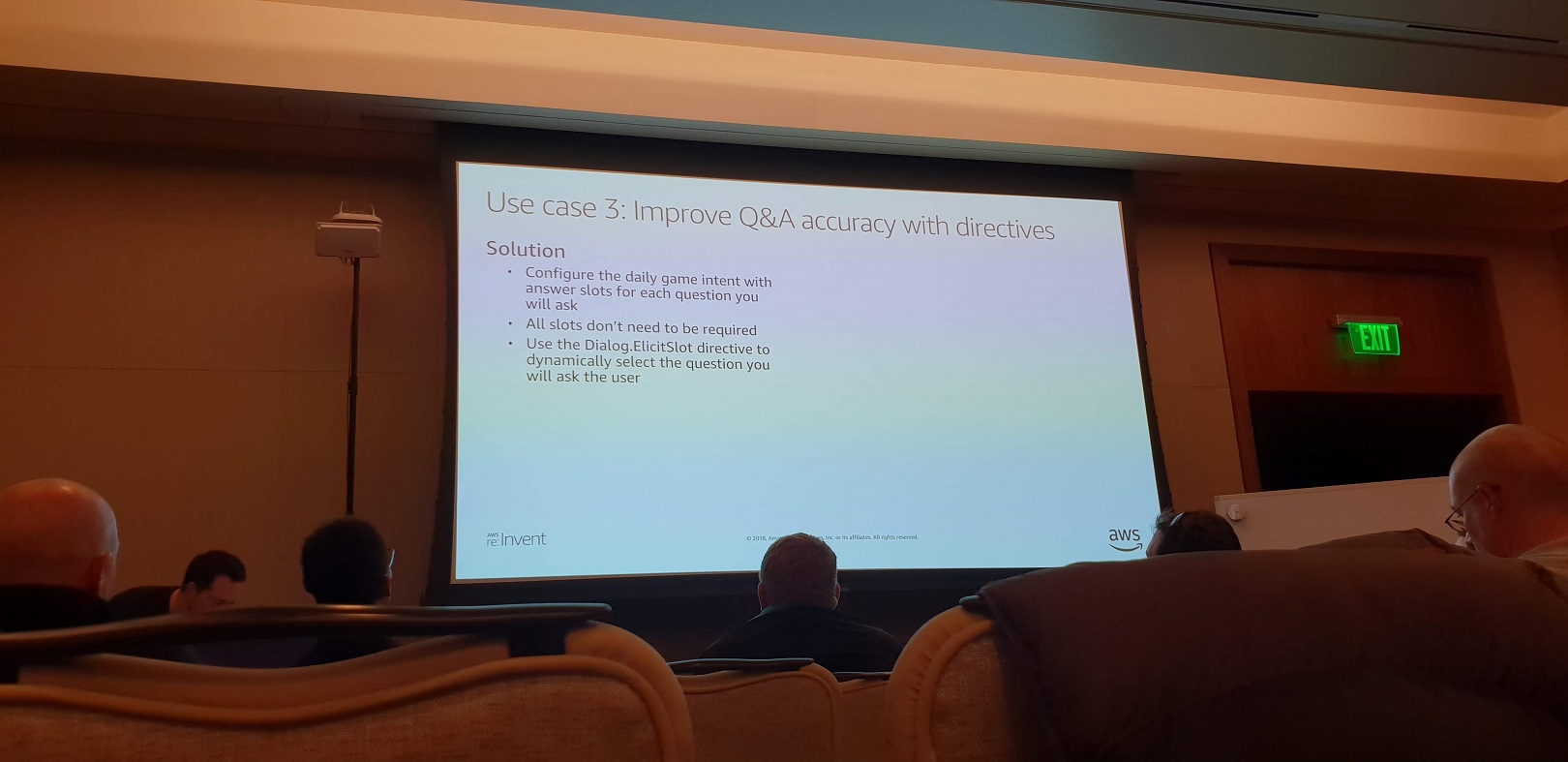

The second advanced case was that of slots where you don’t know the slot values when creating the interaction schema. An update to the interaction schema requires a re-review from the Amazon team, which is unacceptable for a skill that changes every day. The example here was that of a trivia game where the questions change every day.

First, Sandoval said that if you can list out 60–80% of slot values in the interaction schema, you should absolutely do it. The SearchQuery slot type is better than the old literal type, but not nearly as good as the biasing that comes from a built-in or custom slot.

If you can’t, you’ll use slot elicitation to bias the NLU to filling the slot instead of moving to another intent. In the quiz game, the answer to the trivia question was “Las Vegas,” but the skill interpreted that as a weather request. Instead, when you elicit a slot, you’re telling the Alexa service that unless there’s an extremely strong signal that the user wants to move to another intent, whatever that user says is filling the slot. You set this up by adding a slot with the SearchQuery type, and making it required. Then you’ll elicit it from the code as long as you deem necessary. With this approach, the quiz can last one question, five questions, or any other number you choose.

I’ve heard in many discussions with people at Amazon and Alexa devs that dialog management is the part where people click and really get the power of skills. Sandoval echoed this: “[Dialog model] is one of the most important and most underutilized tools for [NLU] accuracy.” This isn’t just a user interaction boost, but an accuracy boost, too.

Check back here tomorrow for my notes on day two of re:Invent 2018.