Why ChatGPT won't replace search engines anytime soon.

Since OpenAI announced ChatGPT and people started to try it out, there have been plenty of breathless proclamations of how it will upend everything. One of those upendings is search. Go on Twitter or LinkedIn (or Bloomberg!), and you can read how ChatGPT and other LLMs are going to replace Google and other search engines.

But, really?

No, Google has little to worry about, at least in the short– to mid–term. Search engines have been around for decades, and will be around for decades more. The lack of real danger to them has to do with search relevance and user experience.

(Why do I feel this way? For the last seven years I’ve worked in search at Algolia, and for the past four I’ve focused exclusively on natural language, voice, and conversational search. I’ve learned what works and what doesn’t, and while I’m long LLMs, I’m not long a replacement of the existing search paradigm.)

Query Formulation

The first reason has to do, ironically, with query formulation. I say ironically here because much of the work around ML in search is to make query formulation less of a hurdle. In the past, the most basic search engines matched text to the exact same text inside results. That means that if you searched for JavaScript snippets, then JavaScript snippets had to be exactly in the document you wanted to find. The problem is that it forced the searcher to try and predict which text is going to be in the documents.

Here’s an example: let’s say you’re cleaning your gas stovetop and you realize that it’s warm, even though you haven’t used it in a while. With an unintelligent search engine, you need to ask yourself before you search: “Should I use the word warm or hot? Does it make a difference in the results I’ll get back?”

Intelligent, ML–driven search works to take this burden away by expanding what counts as a match and including more conceptually similar matches, like warm and hot. Searchers spend less mental energy on determining the right search term, and they are much more likely to find the information they wanted originally.

ChatGPT responses are, however, heavily dependent on the prompt (i.e., query) formulation. OpenAI “lists this as a limitation”:

ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

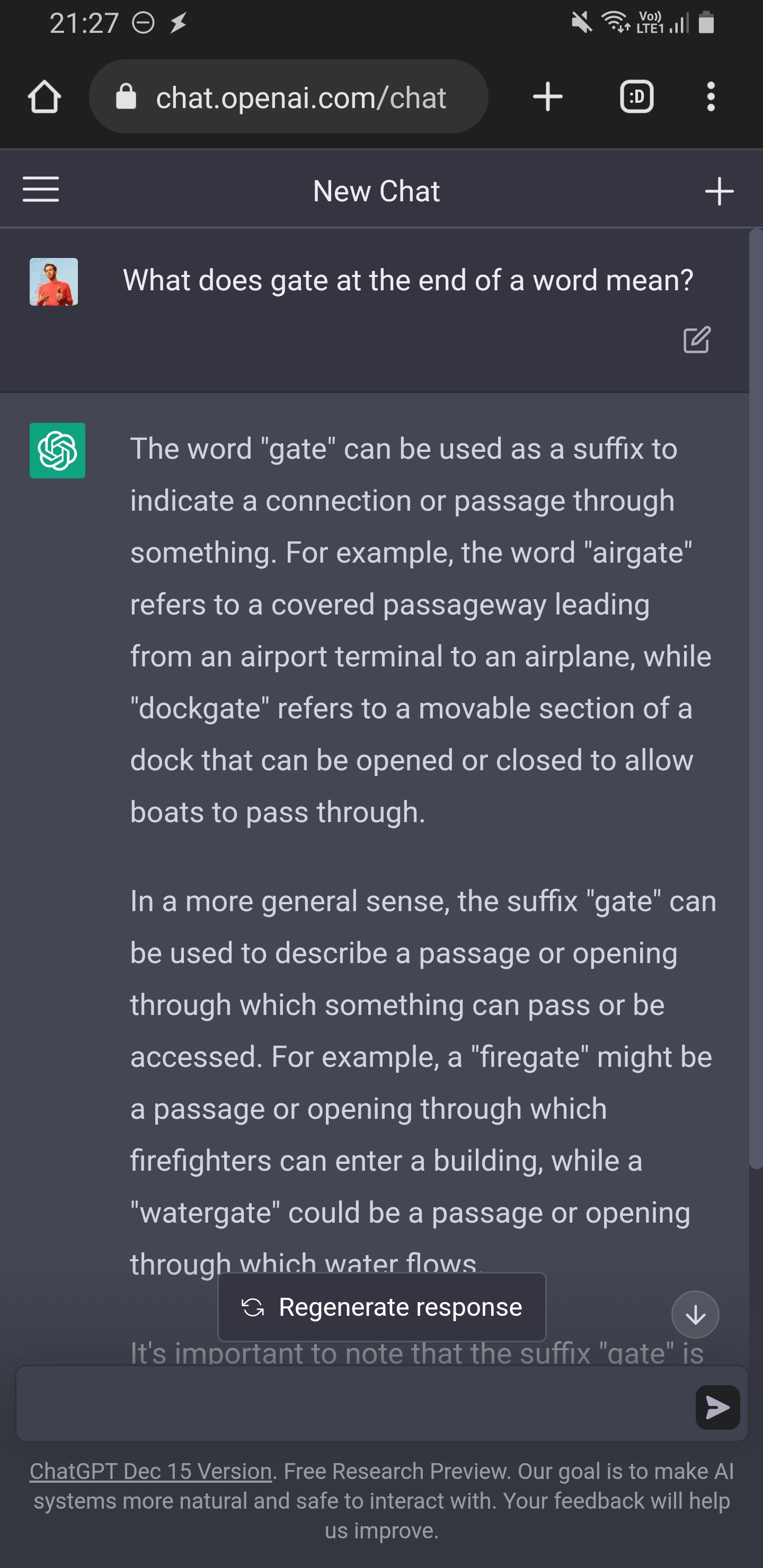

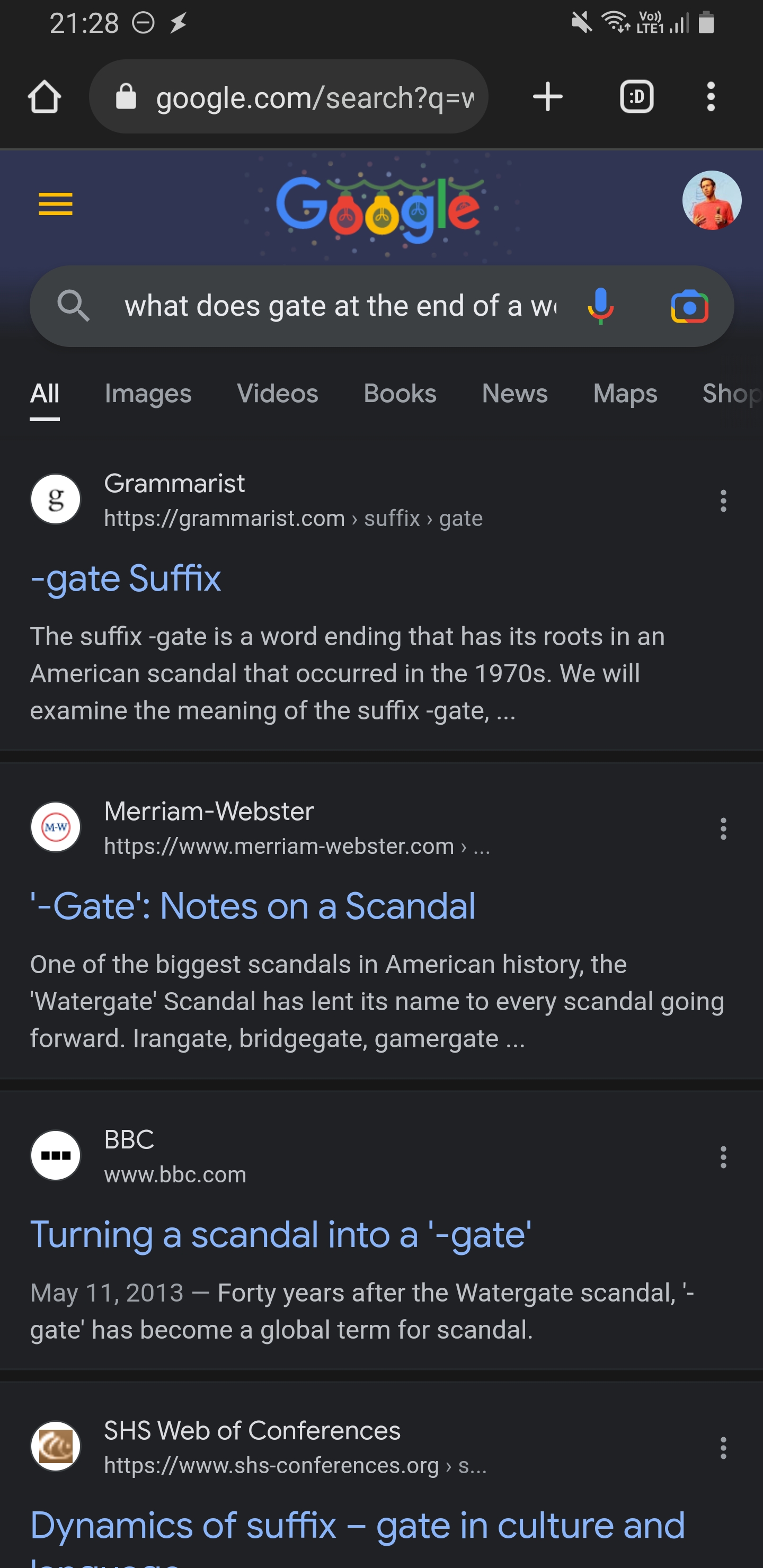

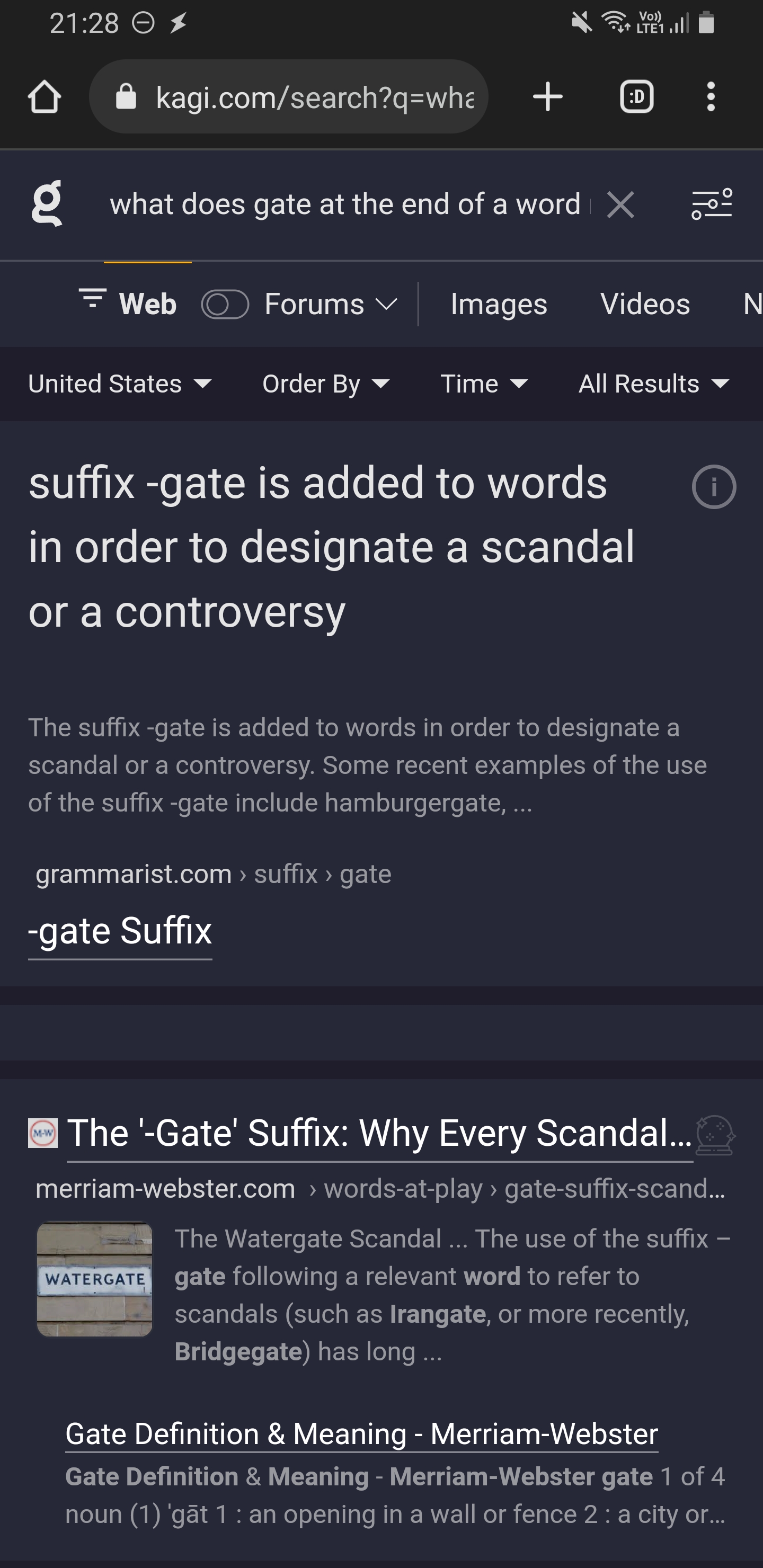

Sometimes this manifests when searching things the searcher already knows a lot about, but it’s much more of an issue when the searcher is hazy about details. If someone searches for what the suffix -gate means, there’s a very, very good chance that the correct result is about political scandals. Google and Kagi reflect this, ChatGPT does not:

(The end of ChatGPT’s response is saying that the use of the suffix -gate is rare. Tell that to Washington!)

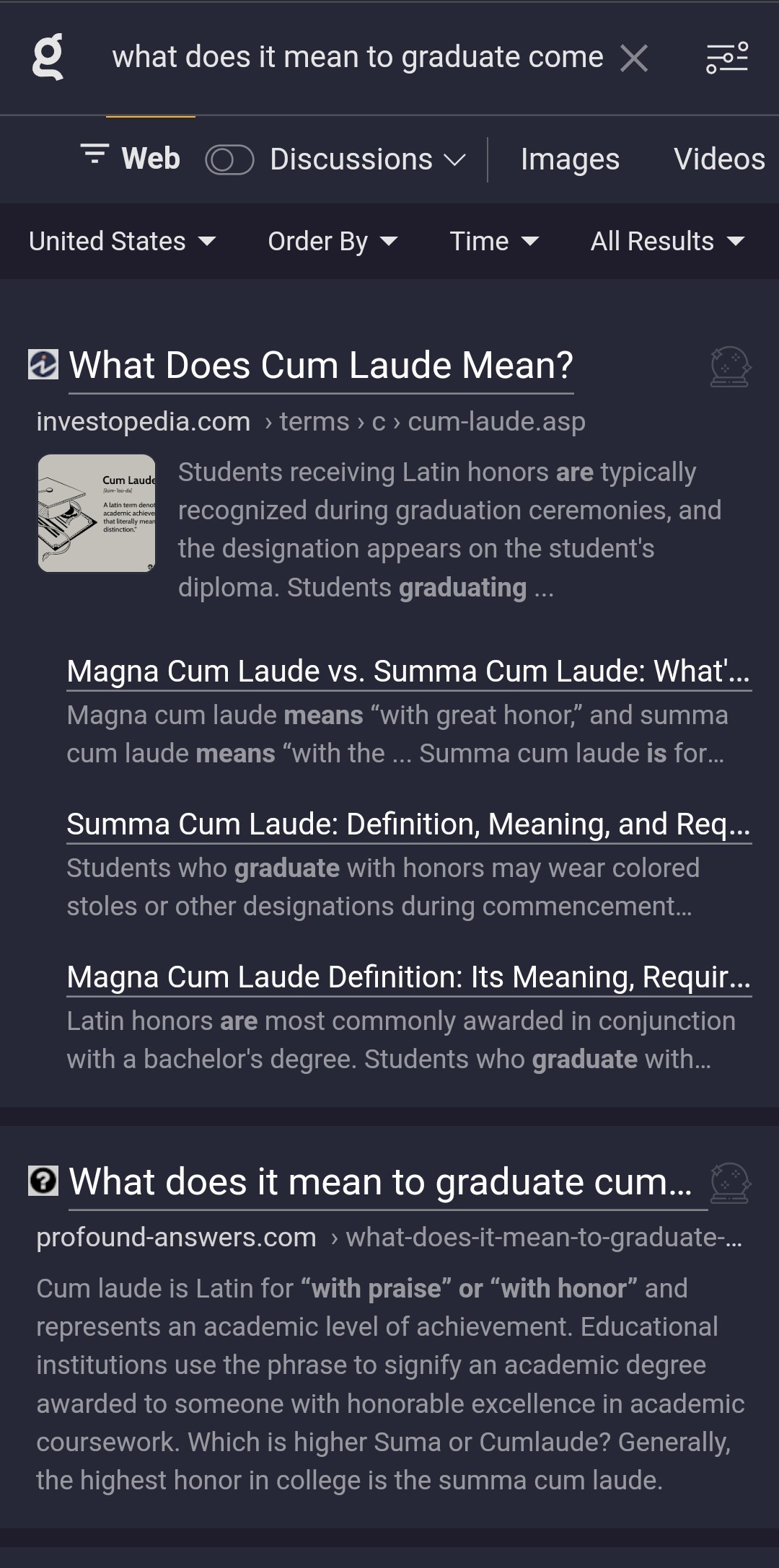

This is even more stark when it comes to typos. We all make typos, don’t we? And sometimes we spell words incorrectly because we don’t know any better. For example, the phrase cum laude is an uncommon one in daily life, and so there will be people who want to know more about it and don’t know the correct spelling. How does ChatGPT handle a spelling of come lad compared to Kagi?

This isn’t a case of ChatGPT not having the answer. It does when you use the correct spelling:

There’s another problem here, which is how ChatGPT shows the incorrect results. Or, really, how it shows the results generally.

User Experience

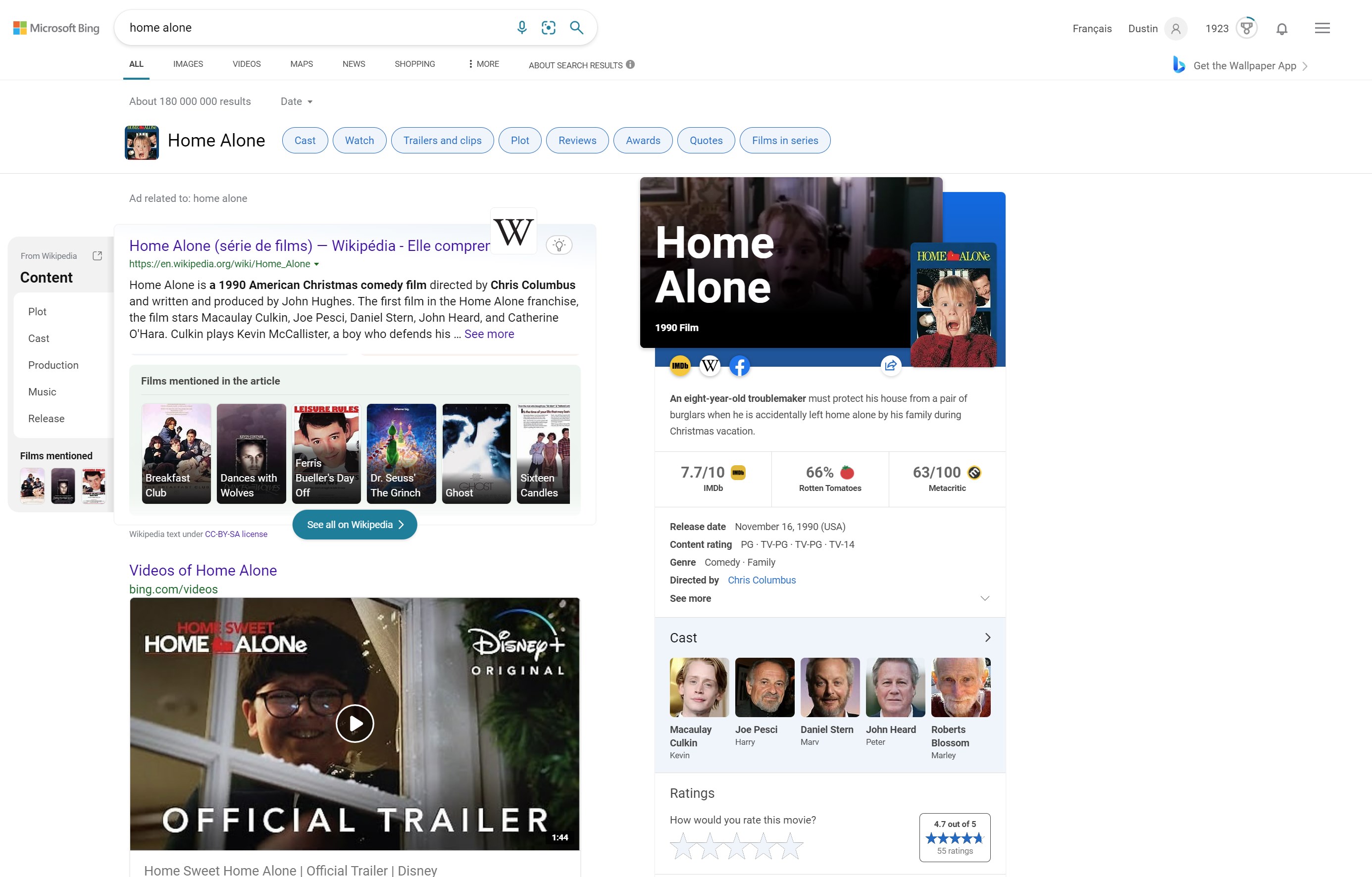

Search layouts have been, typically, the same for decades. Specifically: a set of results in order from most to least relevant (however that is measured). That has changed somewhat in recent years. With search engines bringing in answer boxes, side boxes, suggested searches, multimedia searches, and more. Take a look at a Bing search page:

Of course, Bing is an outlier. This one search results page includes approximately 20 different components: streaming options, video results, image results, and webpage results. Maybe that’s too much. Google, Kagi, and others have less. But the point is that searchers always get options.

It’s important for searchers to get options because the first result isn’t always the best for the search. It may be “objectively” the best overall, but a search is a combination of a query, an index, a user, and a context. All of those combined might lead results beyond number one to be the most relevant at that time. This blog post claims that the number one result on Google is clicked 28.% of the time. Whether that number is precisely right, it is generally correct: the majority of clicks tend not to be the first result.

What is chat-based searching? Only the first result.

Even more, it’s in a chat-based context. In a conversational interface, users expect to get a response that is relevant, with a minimal amount of “I don’t know” responses.

Chat also robs information of context. Landing on a page and seeing related information is good: it helps frame the information you find and perhaps even show you where the original snippet was incorrect or misleading.

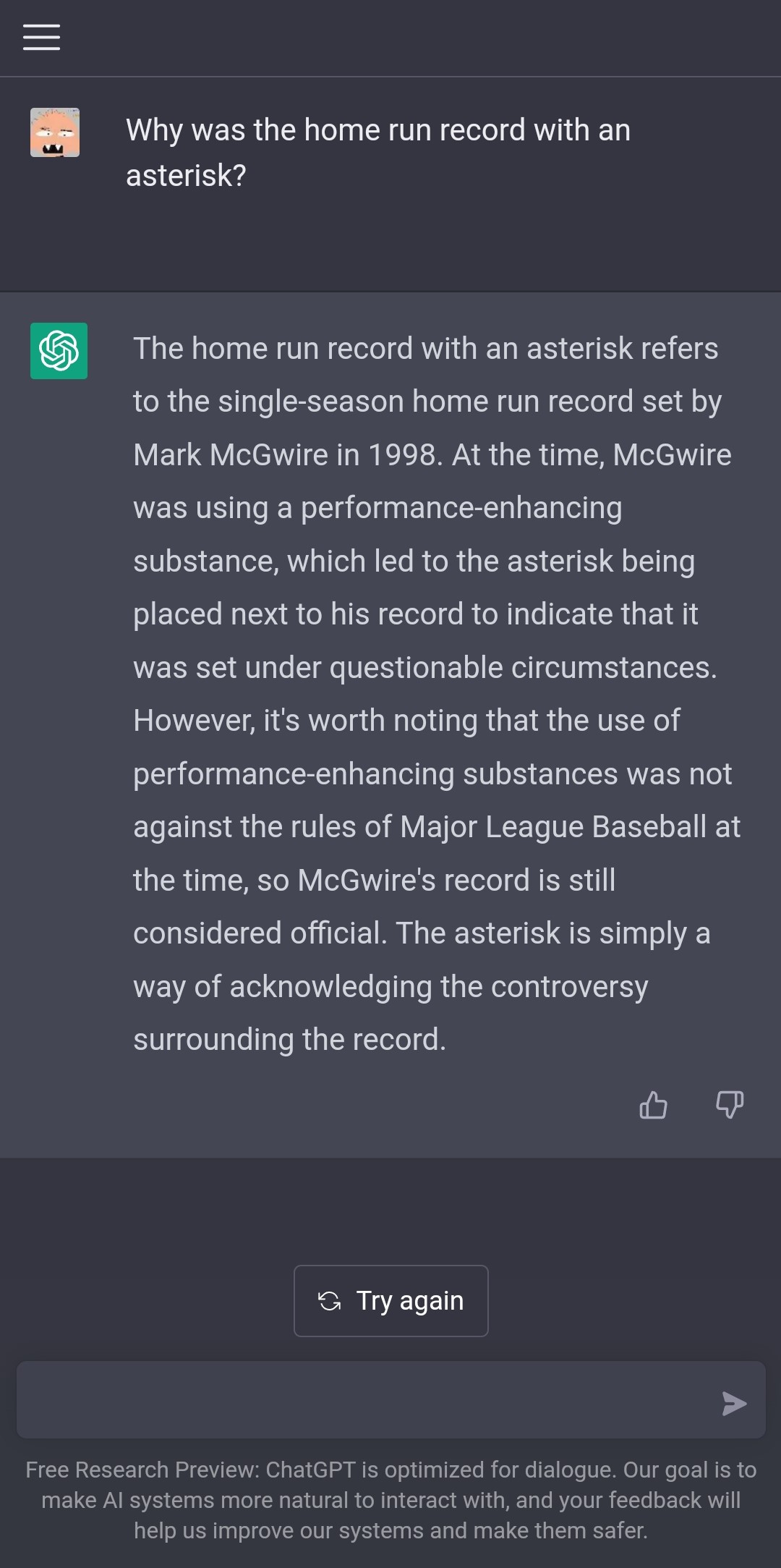

Take someone who wants to know about the baseball home run record. This person has heard that the record used to have an asterisk. But why? What was the record? This famously refers to Roger Maris’ 61 home run season in 1961, but the searcher doesn’t know, and so searches why was the home run record with an asterisk? Compare the answers from ChatGPT, Google, and Kagi:

ChatGPT provides an answer about the home run record from ‘98 with Mark McGwire, which has been controversial years later, but isn’t the home run record with an asterisk. Google gives the correct answer in an answer box along with links out to sources, and Kagi provides results. Of these, Kagi might even be the best because while Maris’ is the one that comes to mind when people say “astrisk,” both McGwire and Bonds also have controversy attached to theirs.

To be fair, OpenAI is aware of this:

fun creative inspiration; great! reliance for factual queries; not such a good idea.

we will work hard to improve!— Sam Altman (@sama) December 11, 2022

But I do think that the lack of context and multiple choices is inescapable within a chat context. That’s why chat is great for finding business hours; less great for learning what it’s like to go through boot camp or why people like RomComs.

This isn’t even touching on product search. A large amount of search spend these days is not on SEO for Google, but building search for a site’s own product catalog. In these situations, it is so important for searchers to be able to see options, filter down with a click, and generally get deep into a “discovery phase.” This is not what chat is suited for.

There are other hurdles: legal (Australia has a law requiring payment from Google and Facebook for news; what will they think when the news is automatically summarized without sources?), cost, and speed. These may one day be surmountable. So, too, might the overly confident incorrect results.

But user experience: this one isn’t going away. Okay, yes, you might argue that it’s easy enough to fix. A chat-based system could show multiple results at once and let the user decide which is the best. Maybe even rank them by confidence. Then it could even link out so that a searcher could see the information and decide if it’s accurate. Even better, why not also include suggestions for follow-up queries or multimedia that might be interesting?

Congratulations, you’ve just rebuilt search.

So, in short: LLMs are great. Understanding user intent is fantastic. Automatic summarization is powerful. Search is going nowhere.

So, what do you think? Let me know if I’m way off base. Please, only human-written responses: dustin@dcoates.com.