The Three Levels of Voice Interaction: Voice-First, Voice-Only, and Voice-Added

Speaking with people who focus on “voice-first,” I notice that increasingly people are seeing that voice is not a platform, voice is a mode. This categorization seemingly downplays voice—no one has a “keyboard” strategy—but I disagree. As a mode, voice is now relevant everywhere. And, despite the snarky comment about keyboard strategies, voice requires a different way of designing user experiences.

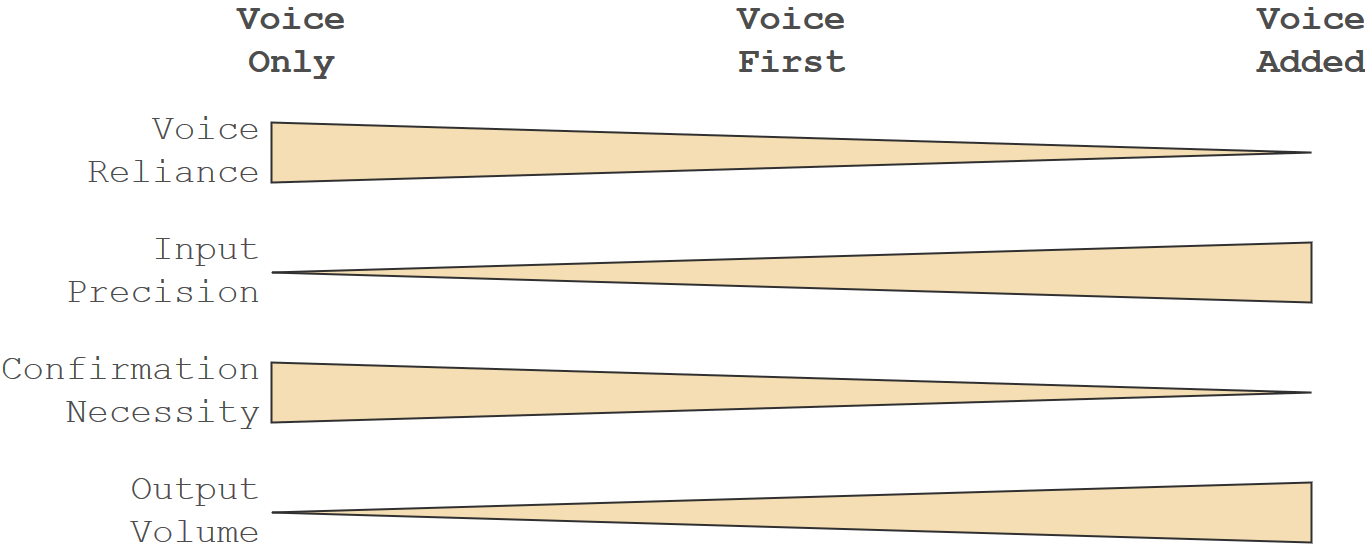

I think of applications that use voice as falling within one of three groups: voice-only, voice-first, and voice-added. These are not rigid roles, but stops on a spectrum. An application, in fact, can move “down” by shedding modes, primarily driven by user behavior, such as when a voice-first device becomes voice-only when placed out of view. Use cases matter, and, combined with these groupings, provide a mental model on how to design and developer voice applications.

Voice-Only

My readers are smart (and, I hope, easily flatted), so I’m sure you have no difficulty guessing what voice-only entails. It’s an application in which voice is the only input and the only output. True voice-only interactions are very rare. Even those situations that place the heaviest emphasis on voice often have another mode. The Amazon Echo and Google Home are those that are most obvious.

Both devices, when used on their own, rely on voice exclusively for the user input. Neither relies only on voice for the output. You’re protesting, I can hear it from here. What other output is there? Yet, that humble light on top of each device communicates information on its own. “I’m listening,” says the pulsing blue light on the Echo. “Something went wrong,” explain the Google Home’s shaking dots. If you’re building voice applications, and you’re not working with Alexa Voice Service, Google Assistants SDK for Devices, Mycroft, or the like, this isn’t something over which you have control.

(Related, but unrelated: I want to write more on the hardware side of voice assistants. It’s a selfish interest—there’s not a ton of demand compared to the interest in guidance on the software and VUX side—but working with hardware is fun and challenging in ways different than software.)

Even that output isn’t guaranteed. On my desk, I have an Echo hidden a monitor, unable to see the LED indicator. In his talk at VOICE Summit 2018, Jeremy Wilken talked about the frustration his daughter felt when she wasn’t tall enough to see that Google Home lights spinning. She felt that the Home didn’t hear her, instead of the truth, which was a delay in the response from the device. This is your first look at the loss of a mode. Never assume that all of the modes ostensibly available for an application will always be there. For the interaction with Wilken’s daughter, a confirmation (“Hey, I’m about to do what you asked me to do.”) would have been sufficient.

Both the input and the output are hampered in a voice-only environment. Input is less precise, we know this well. The speech-to-text systems are going to sometimes misunderstand people in a perfect environment with a “standard” accent, and even more in a noisy area like a car or a living room with a bunch of small kids who can’t be quiet for just a minute, we’re trying to play some music like they asked for! Speech-to-text errors are a problem up and down the three levels of voice. They are more of a problem with voice-only because the user cannot see what the text came out of the speech.

Voice-Only Input

Because the STT-created text isn’t visible, the user needs to trust that the application is performing the right action. Of course, trust can be a big request. If I’m asking an application to turn on my lights, how much trust do I need? If I’m asking an application to buy a pizza, how much trust do I need? If an action will be destructive or otherwise difficult to reverse, any application must confirm.

Do not, though, get skittish and ask for confirmation on every request. As the developer, you need to judge whether a situation needs a confirmation. Insert a “did you really mean that?” too often, and the application becomes quicksand. This is where you take your experience building voice applications to decide if you need to confirm the user’s request.

Oh, you don’t have experience and you’re just starting out? That’s why you’re here reading this blog post? We all are beginners sometime. Take your experience using voice applications. A writer who doesn’t read is a poor author, a film director who doesn’t watch movies is missing new ideas, and a voice application developer who doesn’t use voice applications doesn’t catch the subtleties of what works and what doesn’t.

Truly, what you want to know is that the more your application relies on voice alone, the more you need to confirm. The more that an action is difficult to reverse, the more you need to confirm.

Voice-Only Output

For the output, remember that audio is the only source of information in a true voice-only application. Remember, as well, that audio is temporal. It moves in a single direction (forward) at a single rate (one second per second). Audio doesn’t come back, and listeners can’t jump directly to their desired topic. Still, a voice-only application does not have a screen on which to provide information.

The voice-only level requires the most information dense response. The most relevant information to answer the request must come at the beginning of the response, while the most relevant information to move the conversation forward must come at the end of the response. And, yet, the information in the middle needs to be relevant, timely, and measured without venturing into abstemiousness. Make every word in your response fight for its existence.

One tactic to reduce word count is through the use of ellision. This is the practice of removing nouns or items that either the user or the application has already explicitly mentioned. In a baseball application,, this can take the place of an exchange about an upcoming game.

“When do the Astros play next?”

“September 3rd against the Pirates.”

There’s no need to repeat “the Astros” again, because the listener will assume that the team that plays against the Pirates is indeed the Astros.

Notice, as well, that there’s no confirmation. There are only thirty major league teams and only one with a name similar to the Astros, which lessens the likelihood of a misunderstanding connecting to a valid input. Other teams might call for a different level of confirmation. “When does New York play next?” might require an explicit confirmation (“Do you want the Yankees or the Mets?”) when the application knows nothing about the speaker. An implicit confirmation (“The Mets play next agains the Braves.”) will suffice if the application knows that the speaker is a Mets fan, follows the National League, or lives in Queens.

Voice-First

Voice-first is when an interaction relies primarily, but not exclusively, on voice for the input and output. Voice-first is generally marked by screens as a nearly-equal output, which can sometimes be used as input. Common voice-first devices are the Echo Show and Spot, plus televisions and set-top boxes with a voice input.

A device can go from voice-only to voice-first or voice-added to voice-first. Voice-only becomes voice-first with the addition of new modes, such as connecting Echo Buttons to an original Amazon Echo. Voice-added becomes voice-first with the loss of modes, like a Pixel phone placed on a dashboard.

Special Considerations

Voice-first is often used as a broad umbrella to refer to both voice-first and voice-only applications. This is not stretching the definition much, because the interaction considerations between the two can be very similar. In many current cases, developers should assume that a user is interacting with a voice-first device in a voice-only manner. This is especially true with devices with a smaller screen, where there is no assurance that a user is close to or facing the on-device screen. Devices where the screen is a more prominent part of the system, such as televisions, will meanwhile provide a means of use that more often assume that users can see the output on the screen. This clearly represents voice-first’s place in between voice-only and voice-added, and shows the extent to which voice levels exist on a spectrum.

Developers should never eschew the screen, even in situations closer to voice-only. Applications can use the screens to provide complimentary information that is adjacent to the user’s request but which does not directly provide an answer. In my mind, the canonical example is the weather. If a user asks for the weather, the current temperature and chance of precipitation is most pressing, but a screen provides the space to insert this evening’s weather as well. This information is in addition to the direct answer, and should not be spoken aloud.

(The screen is, most often, additive in displaying information, though the newly-released devices that include Google Assistant with a screen adds interesting input controls as well.)

Voice-first experiences that are more similar to those that are voice-added are often those with a larger screen, such as televisions. These are hybrids, because they combine the voice-only input with the voice-added output. Developers should treat this experience as equivalent to voice-only in regards to input considerations with one significant change: the addition of the display provides a space for the placement of the speech-derived text. Users can see what the device understands, and require less explicit confirmation than the voice-only counterparts.

Voice-Added

The final level of voice interaction is voice-added, where voice is no longer the primary tool for input or output. Voice-added is most commonly seen on mobile, where voice is added as an additional input mechanism to alleviate the pains of typing on an on-screen keyboard. At this point, with the strength of speech to text technology, voice-added rarely represents environments where voice is an output but another mode serves as the primary input tool. (Two example of non-vocal input paired with a primarily-voice output are from long ago: the Speak and Spell and the See ’n Say.)

The voice-added model continues the pattern of experiences needing less confirmation when voice becomes less important. Users should be able to clearly see the text representation of the speech, and can generally fall back to a keyboard when the speech to text is having too much difficulty, making correction easier. Another common UI pattern is to aid correction by displaying an interim transcript from the speech to text service. Some services, such as the STT built directly into Chrome, will correct over time as people speak more and there is more context to draw upon, but users can also exit out early if they see that no automatic correction is possible.

Developers also need to think about the practical aspects of voice-input, especially on mobile. Always-on listening is a signifcant battery drain on phones, and most applications will not have subtle wake-word detection. Instead, a “car mode” or “voice mode” can split the difference, adding large action buttons that trigger common actions, including turning on the listening mode of the app. Developers can also take this as a signal of a move toward eyes-free usage, and trigger a voice-first interface, including a change in reliance on voice output.

The desirability of a voice output for voice-added applications is dependent both on the usage state and the use case. A shopping website that uses speech as a better search input will likely call for no vocal response at all, instead displaying results in a traditional sense. Meanwhile, an app that will have a vocal or audio aspect as a result of the interaction (such as a phone dialer or music app), can use voice as an output, instigating a conversational interface with the user. This is, finally, the last lesson of voice levels: as applications move away from voice, interactions tends to become less conversational as data flows through more than natural language.

In all, the different levels of voice are not true levels at all, but a slide on which an application can move up and down where voice shows more or less primacy. When voice reigns, applications needs to take on much of the burden on instigating proactive confirmations and paring responses of everything but the absolute essentials, building a more conversational interaction style. As voice becomes more of an additive part of the experience, applications can add secondary and tertiary information and rely on the user input more as it becomes more precise. Ultimately, the most important item for developers to remember is that an application’s level is not static, and developers need to prepare for the loss of modes. In short, anticipate the users’ behaviors, and the users’ needs.