Voice Search for Mobile, Voice-First, and Other Platforms

Recently, I had the opportunity to present at VOICE Summit 2018 in Newark, New Jersey. I spoke about how we at Algolia have been thinking about and building voice-powered search for our customers. This is that presentation.

Before we start: if you like this post, will you consider sharing it on Twitter or posting it to LinkedIn? There are too many apps out there with poor voice search experiences, and I’d like to remedy that.

Quickly—who am I and why do you want to listen to me? I am Dustin A. Coates, a software engineer from Texas who is now based out of France (2018 World Cup champions!). My official title is “Voice Search Go-To-Market Lead” at Algolia which is, I admit, a mouthful. It means that I focus on Algolia building voice search that is as smooth and powerful as the search we support on mobile and on the web, and that we’re speaking about it to the right people. Finally, I’m the author of a book on building Alexa Skills and Actions on Google. It’s in early release right now, meaning that you can follow along with me as I write it, and that you’ll get the finished version when it comes out in late 2018. Plus, I co-host a podcast, VUX World.

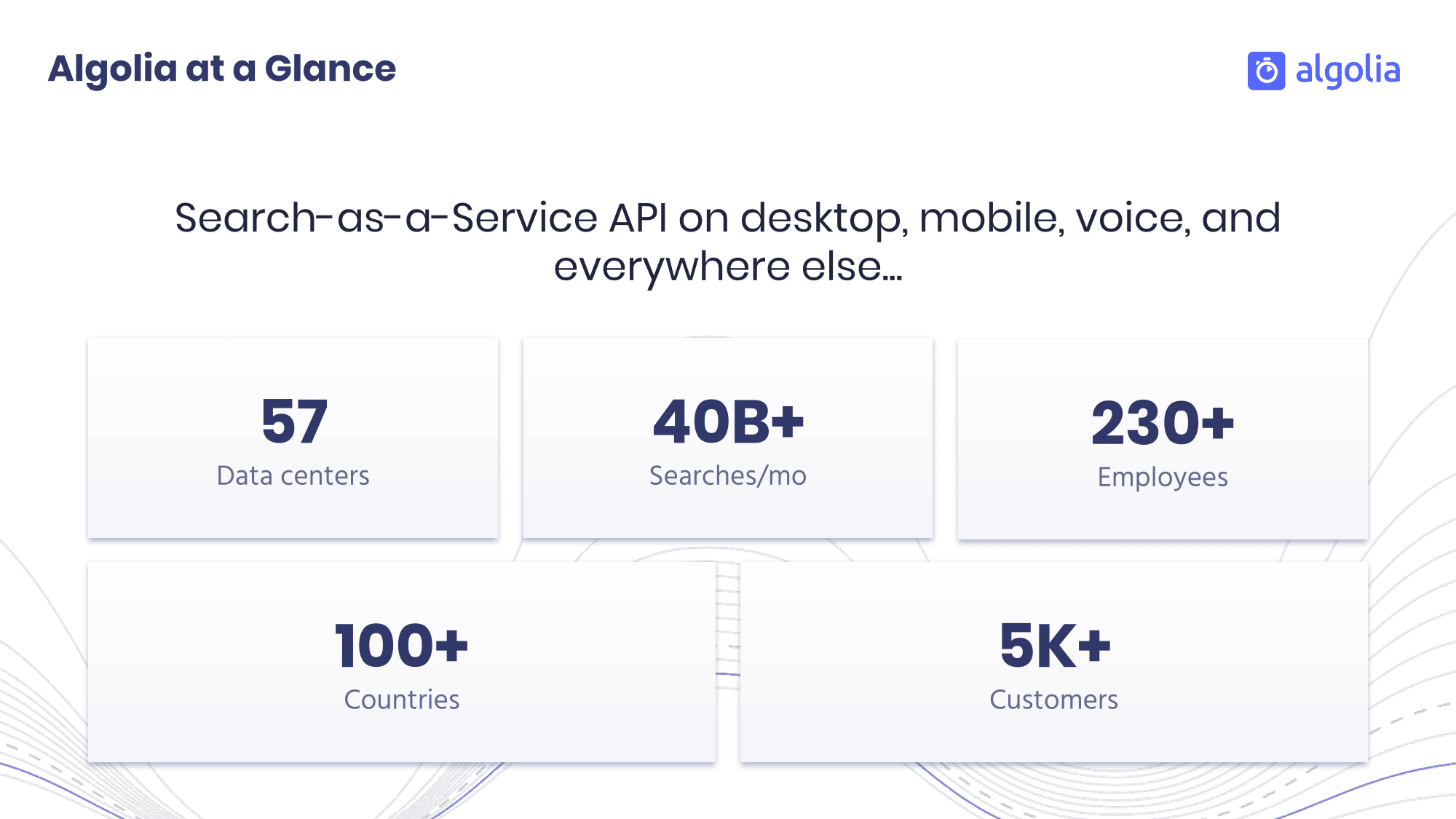

Algolia, meanwhile, is a search-as-a-service API. If you’ve used Twitch, Lacoste, or thousand of others websites and apps, you’ve used our search. While I come from Algolia, and I think highly of our search, I won’t include anything here that is specific to Algolia.

This talk I’m giving is not about search engine optimization. Lately, I’ve often been hearing people using voice search to mean “how to rank your site higher in Google or Bing when users search through voice.” I have no interest in SEO. More, I believe that if too many people rush into voice-first focused on SEO, it will be to the detriment of users, as businesses will focus on ranking more than retention or building experiences no one has considered before.

Instead, this talk is about optimizing search engines. Once your users or customers are in your app or on your website, how can they use voice to search for what they want.

The goal, when building any search, but especially voice search, is how to provide the right result for the right user at the right time, presented in the right way.

Whom does voice search affect?

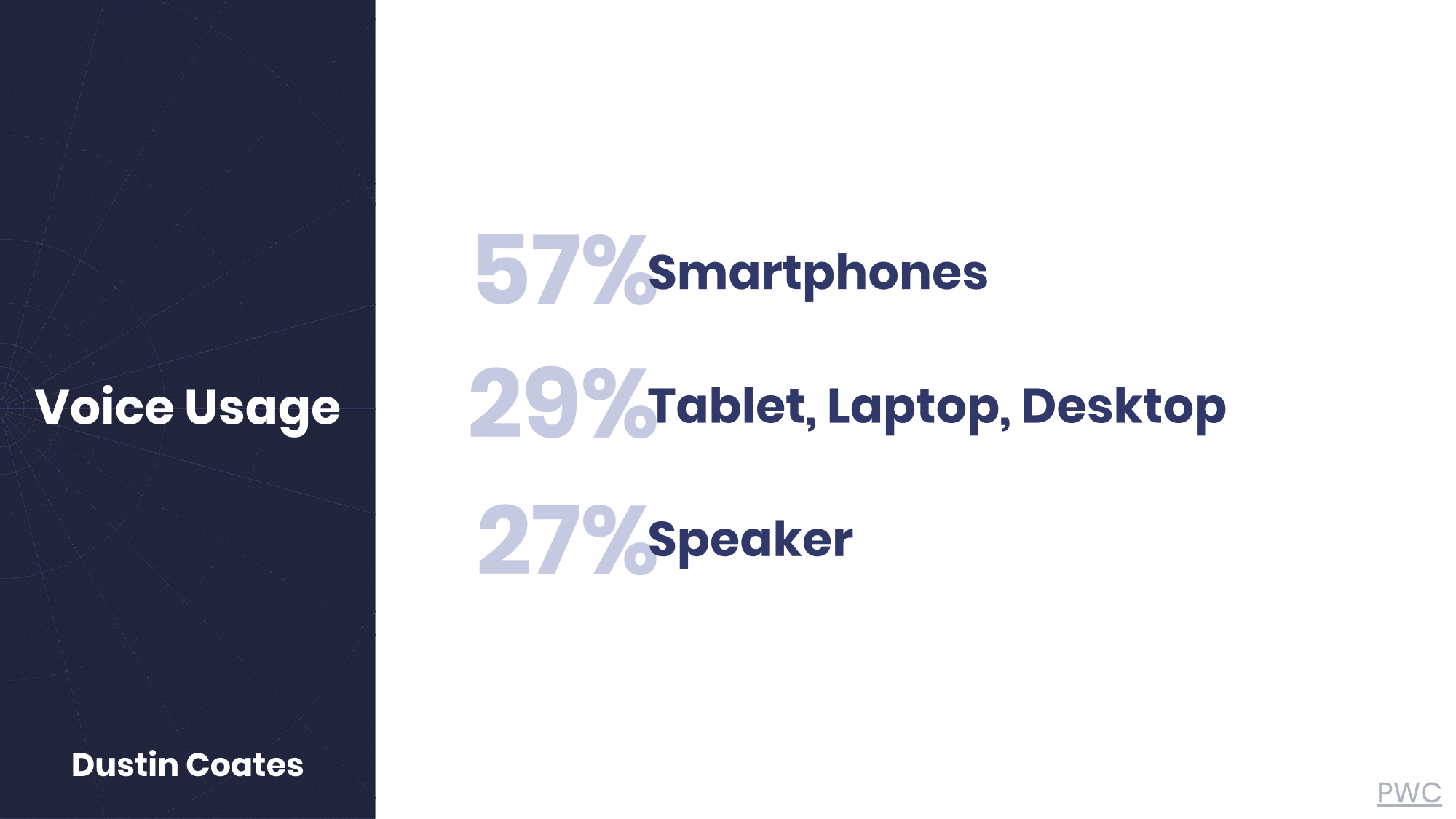

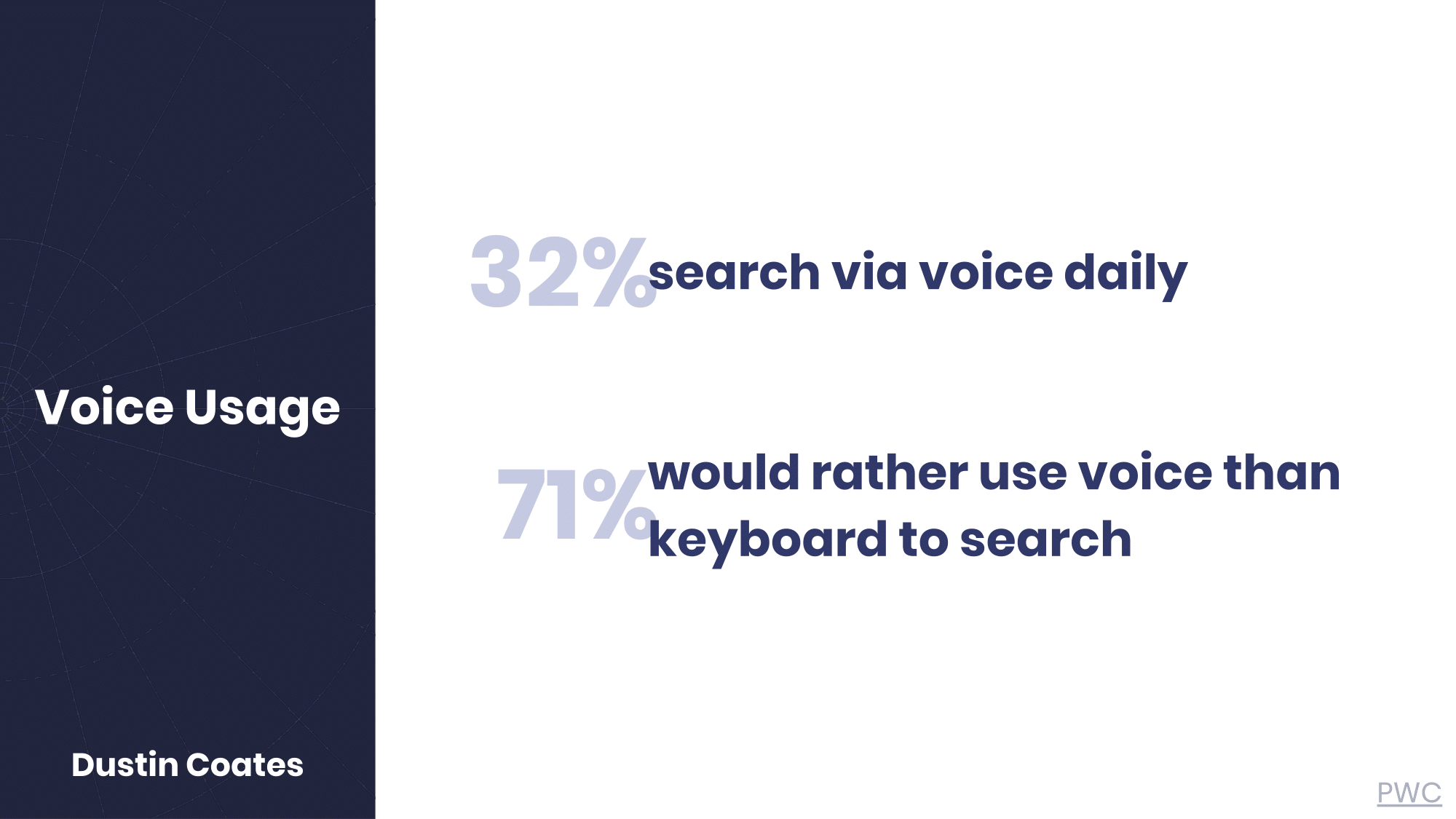

Every presentation on voice includes the “50% of searches will be via voice by 2020” figure, so I won’t. What I will point to are some figures from Price Waterhouse Cooper. 57% of people are using voice on smartphones; 29% on tablet, laptop, and desktop; and 27% on a speaker. These numbers aren’t exclusive, and people are using voice across multiple devices. Further, 32% are searching through voice daily, and 71% say they would rather use a voice input than a keyboard.

Seventy-one percent!

How much do we hear that we are still “early days” in voice? And, yet, already, nearly three-quarters of people would rather use their voice as an input to search.

Voice search affects everyone who has a website or a mobile or voice application. With numbers like these, users will walk away less satisfied if they can’t interact with your search through voice.

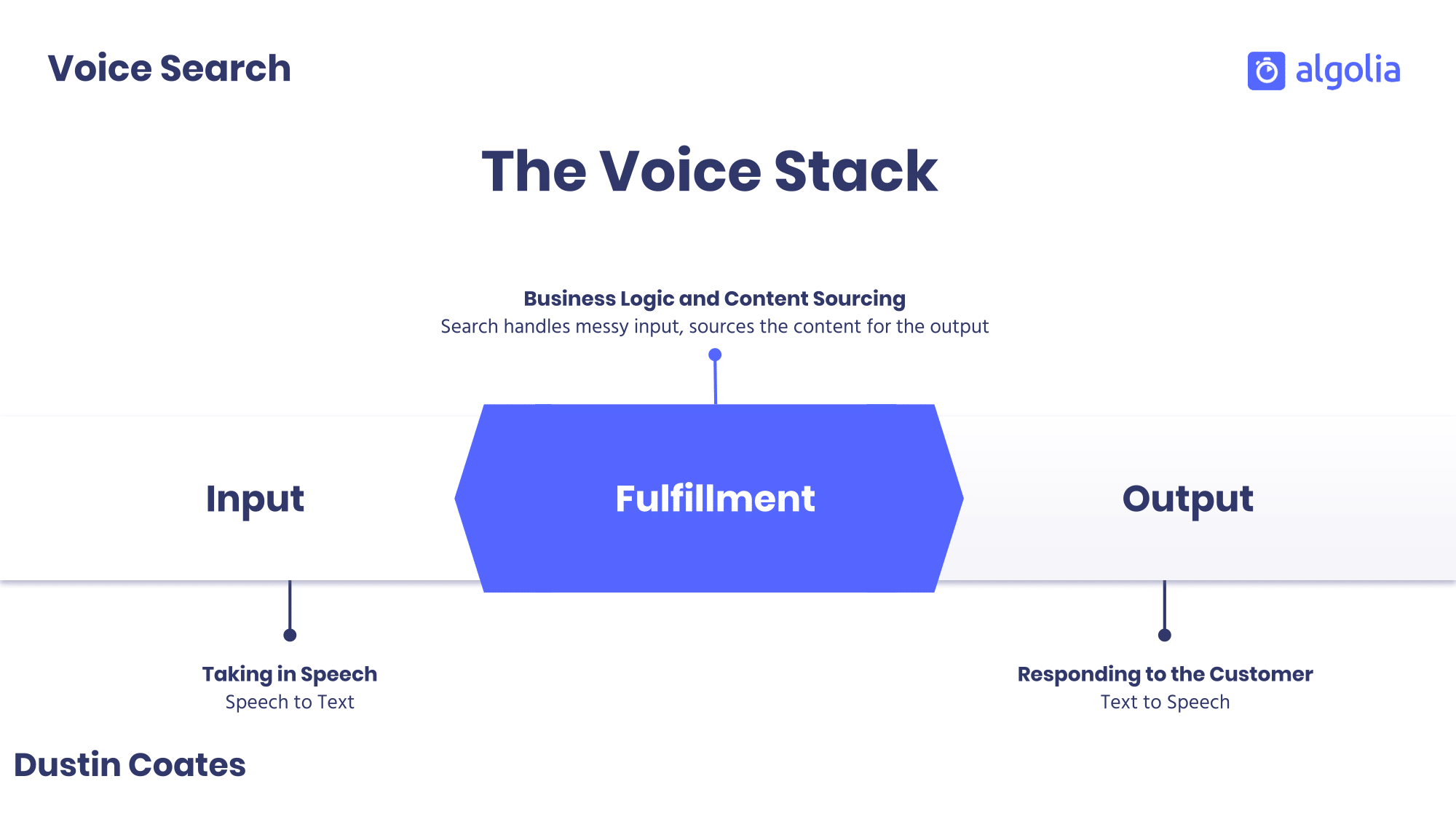

I talk often about the voice stack: the input, the output, and the fulfillment in-between. The input represents the incoming request (often speech-to-text), while the output is the application response (often text-to-speech). The fulfillment consists of the intent detection, code, and business logic that takes the messy request and turns it into a natural response. The fulfillment is where the search lives.

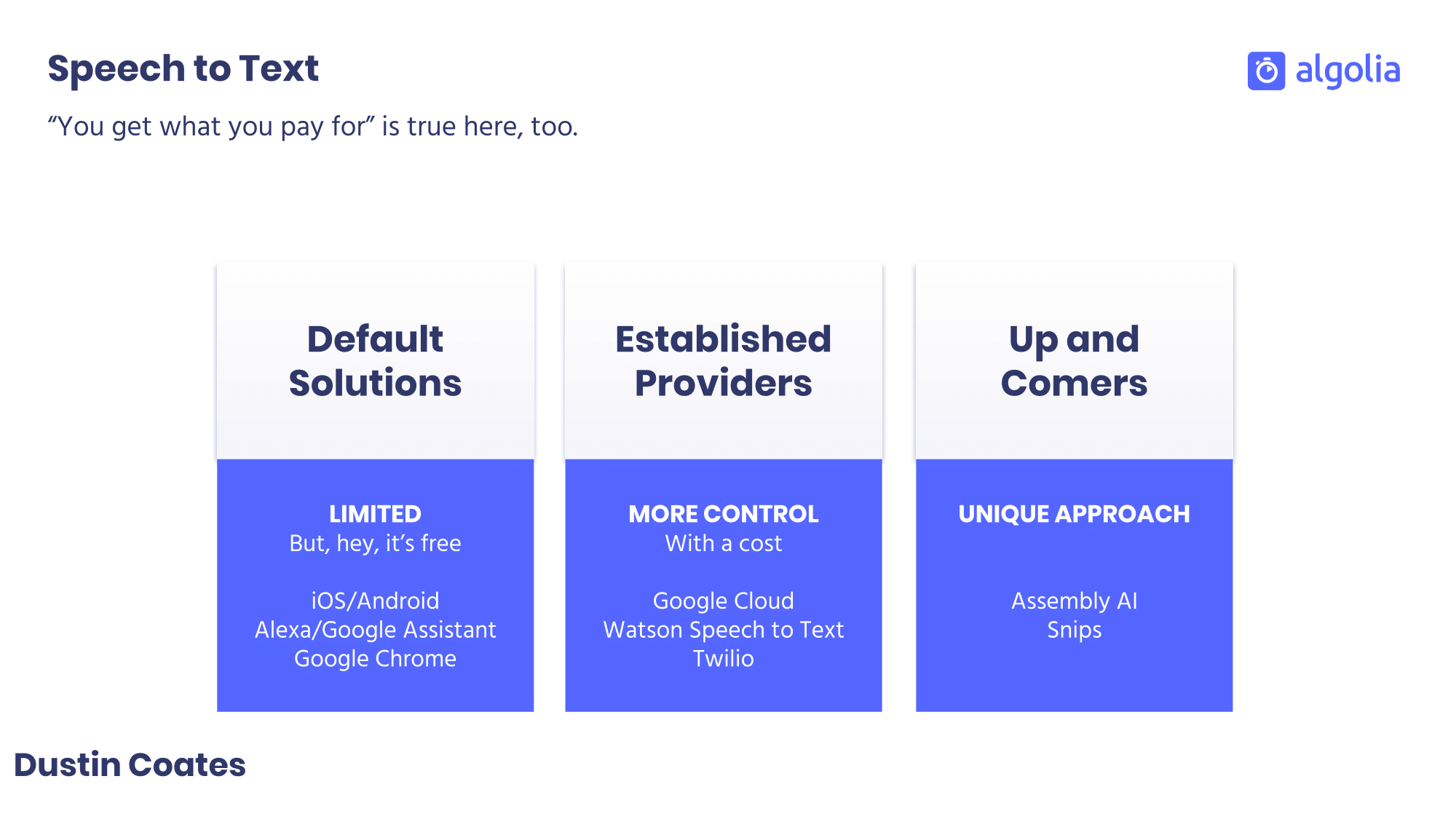

The whole “garbage-in, garbage-out” saying might not be entirely true, but certainly the better the text represents the user’s utterance, the better the search results will be. There are essentially three classes of speech-to-text: the defaults, the established providers, and the up-and-comers.

The default providers are free, but each comes with a cost. Primarily, you have little control of customization. The speech to text on Google Assistant and Alexa are in fact pretty good, but you can’t switch it out if you have a specialized case. We’ve found at Algolia that the iOS built-in speech to text can be good on sentences and longer phrases, but suffer on keyword based voice search. For in-browser speech to text, you have no choice but to pull in a third-party API, unless you only want to support Chrome. As a Firefox user, I ask that you not do this.

The third-party APIs are numerous, and you have many established providers, like Google Cloud, Watson Speech to Text, Nuance, and even Twilio. Finally, you have some newcomers with unique approaches such as Assembly AI and Snips. Snips I want to call out because of what they call “privacy by design” speech to text. Their service lives on-device, either on mobile or IoT. What this enables is that developers can configure each case differently, and the speech to text will even learn from user behavior. If I’m always searching for Ke$ha, a Snips-powered application will prioritize that over “Cash,” even if I mumble.

Once the speech to text engine has provide text—provided a query—the search engine needs to do something with it.

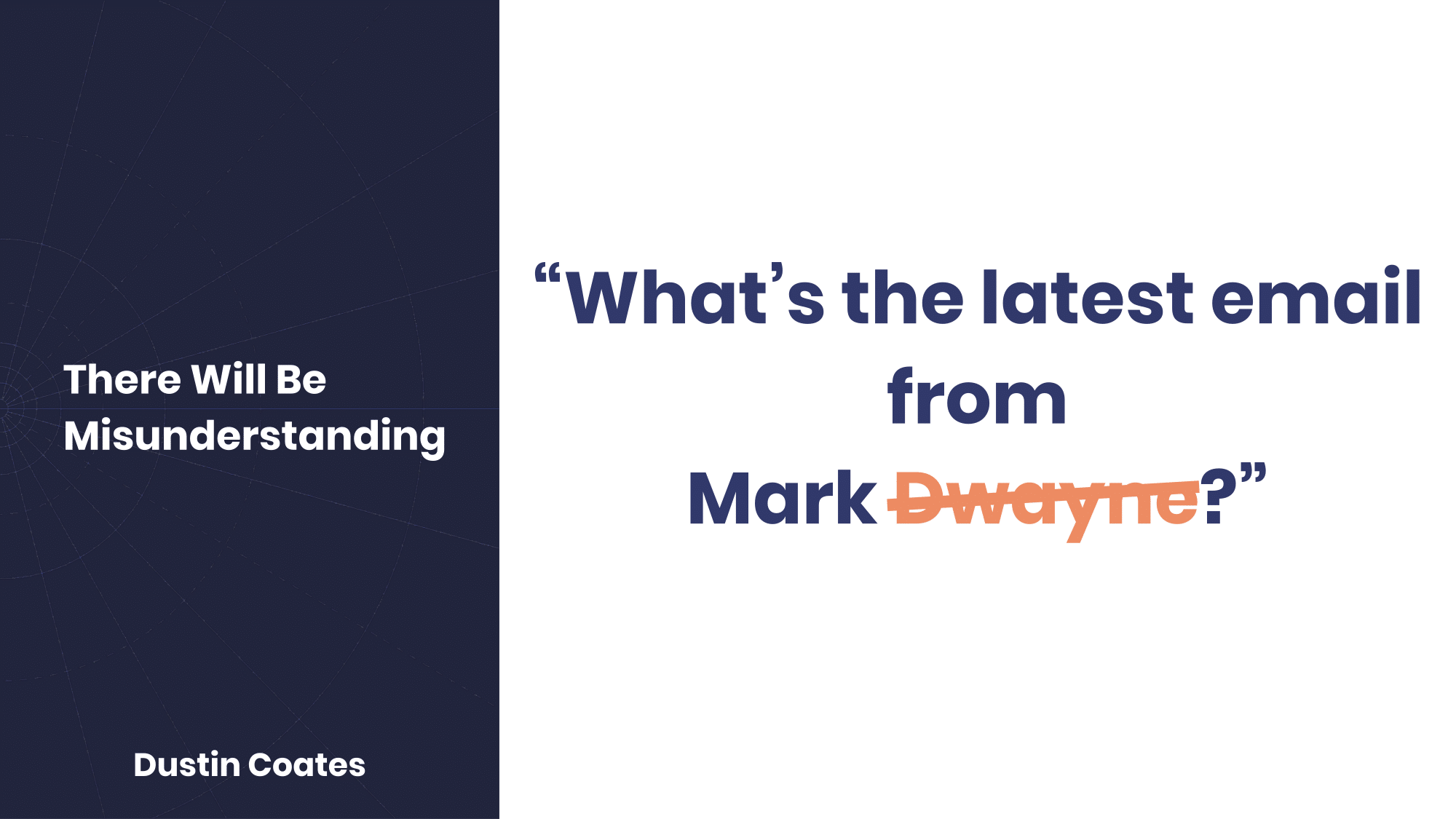

We need to know that there will be misunderstanding. Speech to text is good, and getting better. Most engines hover at about 95% accuracy, which is actually better than human listening! But not better than typed queries.

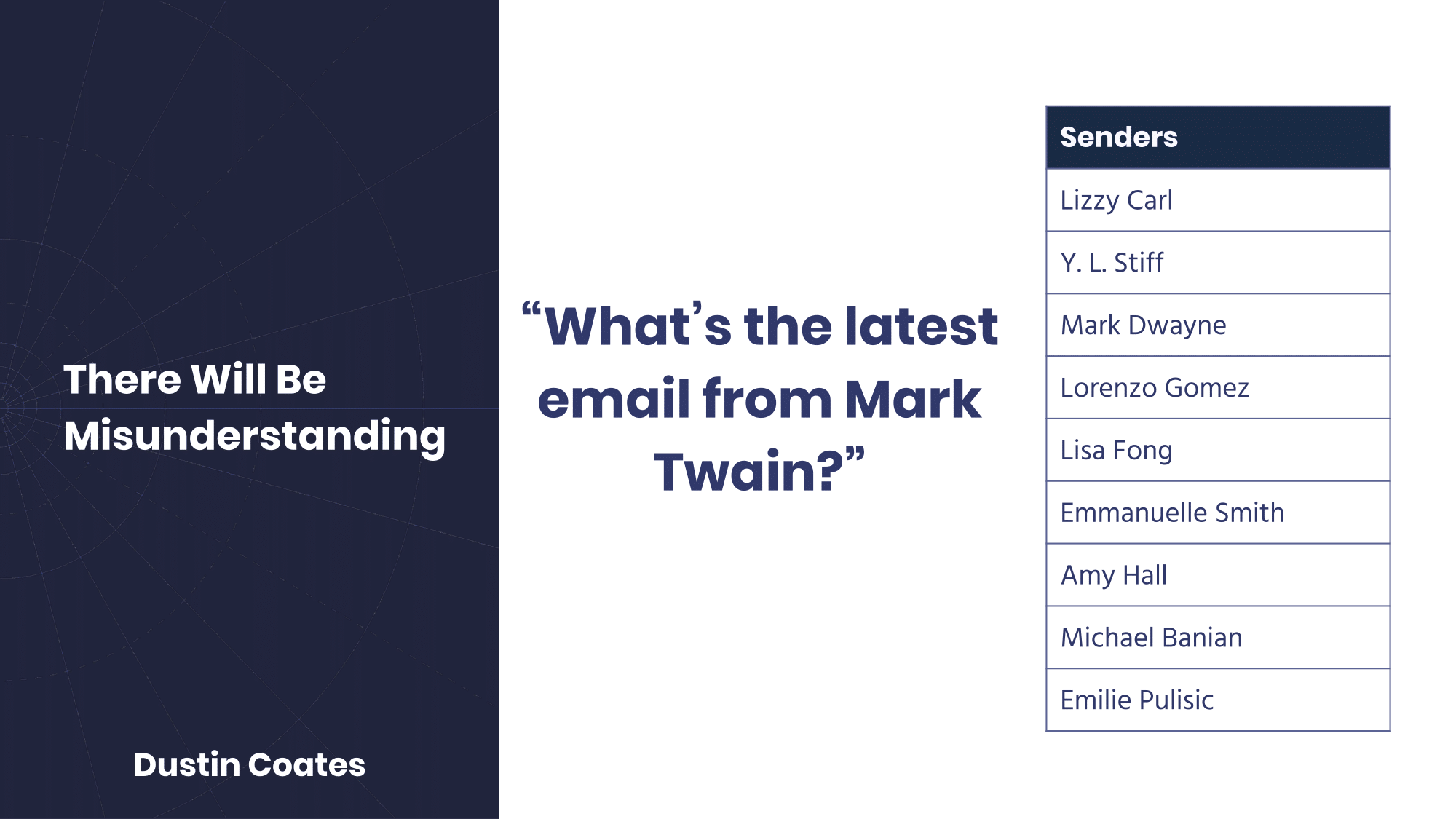

Maybe our searcher is asking for an email from Mark Dwayne, but the text that comes back is for the renowned American author Mark Twain.

Another compounding factor is that searchable items are enums. That is to say that a user is always searching through a constrained set of records. Taken to the extreme, searching through an index of only The Beatles is a constrained set of four. Problems with relevancy come about when users search for, or it seems like they’re searching for, a value outside of that constrained set. Mark Twain is nowhere to be found in this list of senders.

And, yet, a match must be found. Let’s look at some common approaches.

If you’re on any voice development message board or chat group, you’ll hear someone say, at some point, “Dude you’ve got to use fuzzy matching.” But what even is fuzzy matching? One approach is through the use of the Levenshtein Distance. This is also known as the minimum edit distance and represents the number of changes necessary to change one string (e.g. word) to another. Developers use it for a number of different applications in computer science, and two of the most common are in spell checkers and “Did you mean?” functionality.

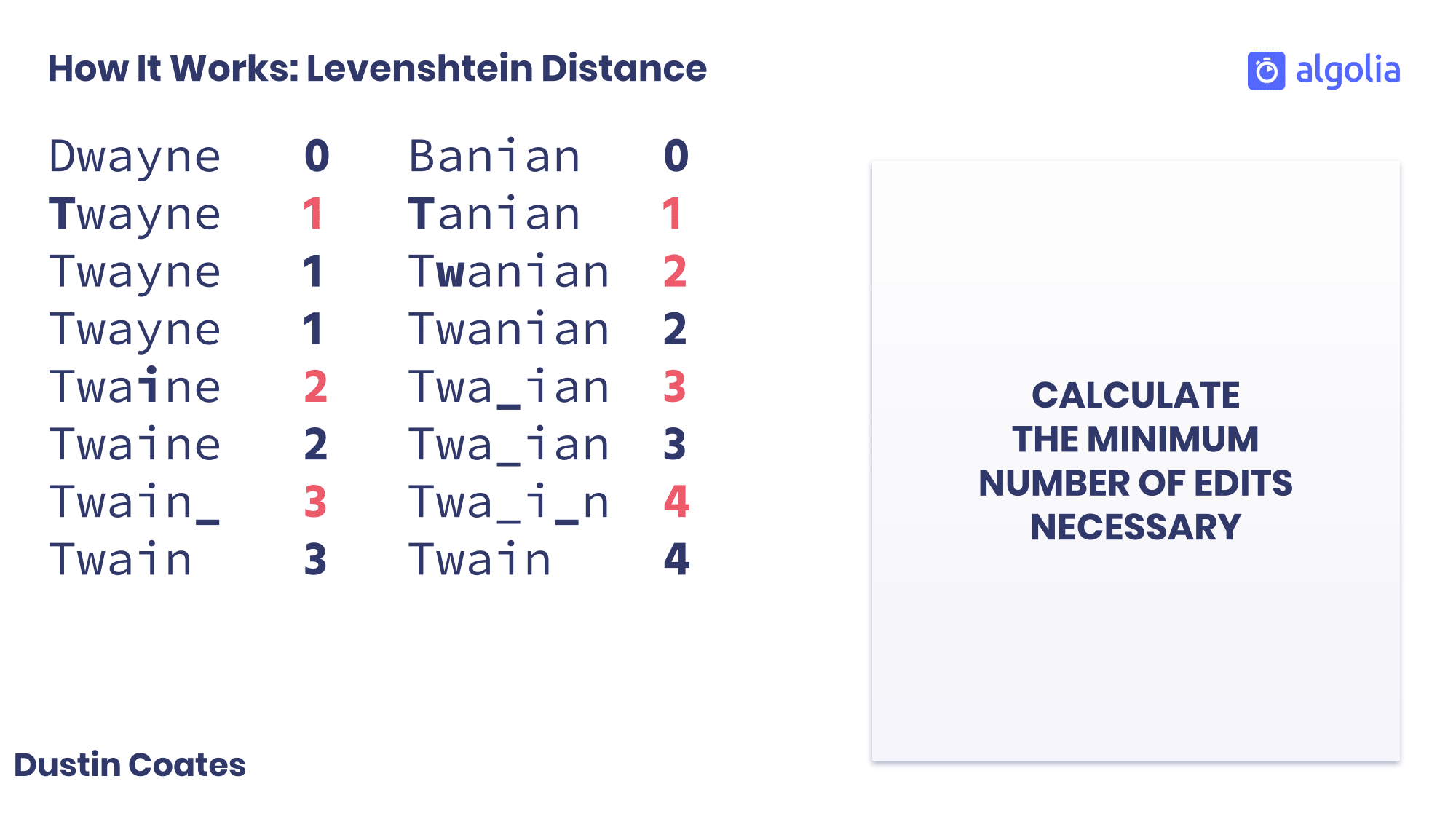

The Levenshtein Distance works by first taking two difference strings and going character-by-character to count the number of necessary changes. To change Dwayne to Twain, we start with the D and change it to a T instead. That’s one change. The letters w and a require no change, but y changes to i. That’s two changes. The n is fine, but the final e is axed. Three changes.

Changing Banian to Twain requires four changes: B to T, addition of a w, removal of an n, and removal of an a. Because Dwayne has a smaller minimum edit distance than Banian, we can say that Twain is most similar to Dwayne. If we had to rank these two names in a search, Dwayne would appear first.

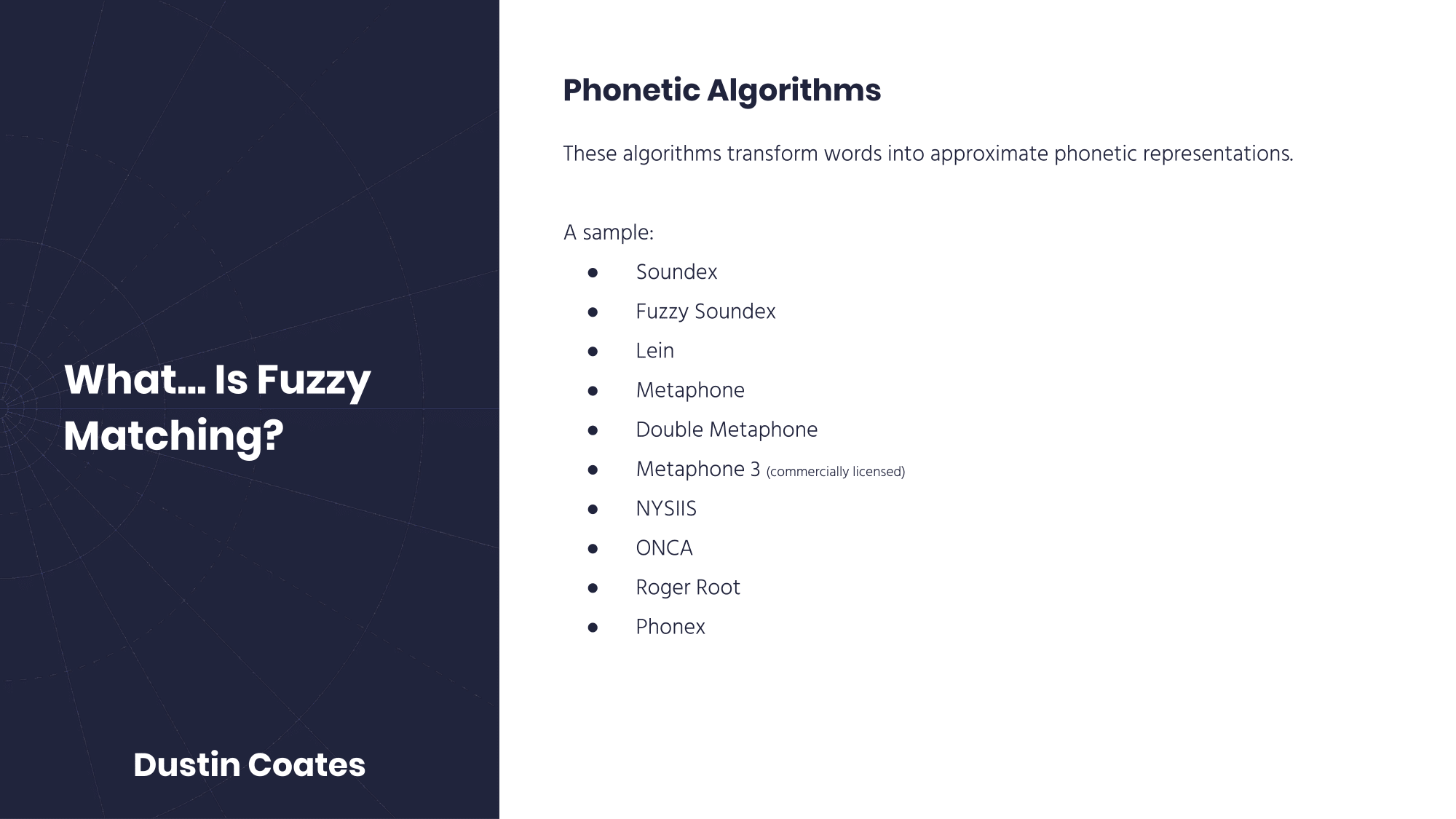

Another form of fuzzy matching that developers commonly recommend is the use of phoenteic algorithms. These algorithms take a word and create an approximate phonetic representation of it. Some algorithms serve a different purpose—Caverphone is geared toward New Zealand names and pronunciations—and others come because developers can feel they can do better than what exists. That’s how we end up with Metaphone, Double Metaphone, and Metaphone 3.

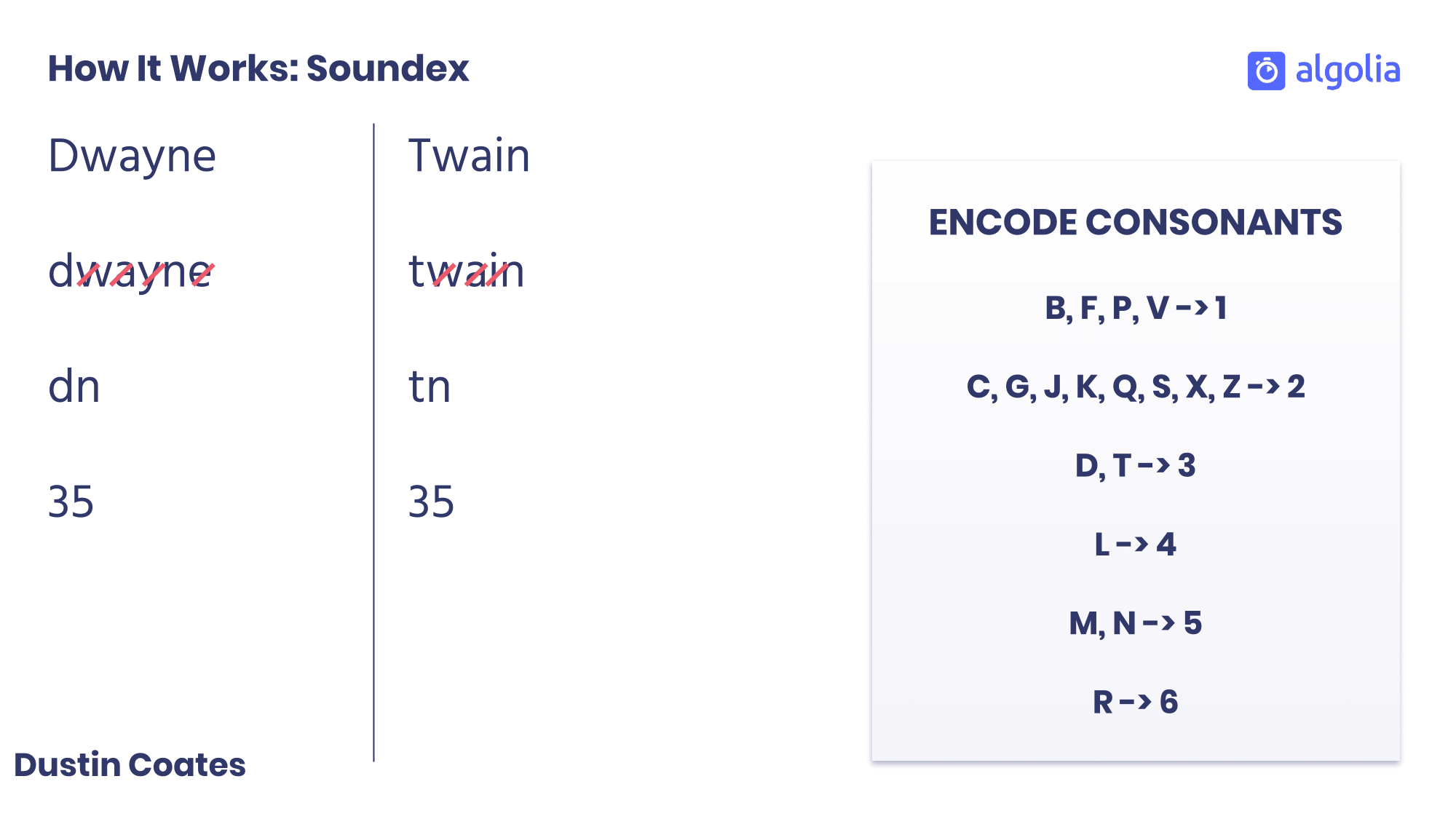

One of the most well-known phonetic algorithms is Soundex. We’ll examine how it works by looking at each step.

First, we keep the first letter, and drop any vowel, h, or w. After the initial step, we have dn for Dwayne and tn for Twain.

Then we encode the consonants into numbers. The encodings will group letters that sound roughly the same. The number five represents the near-twins of m and n, and three stands in for d and t. After encoding, Dwayne and Twain are both represented by three-five.

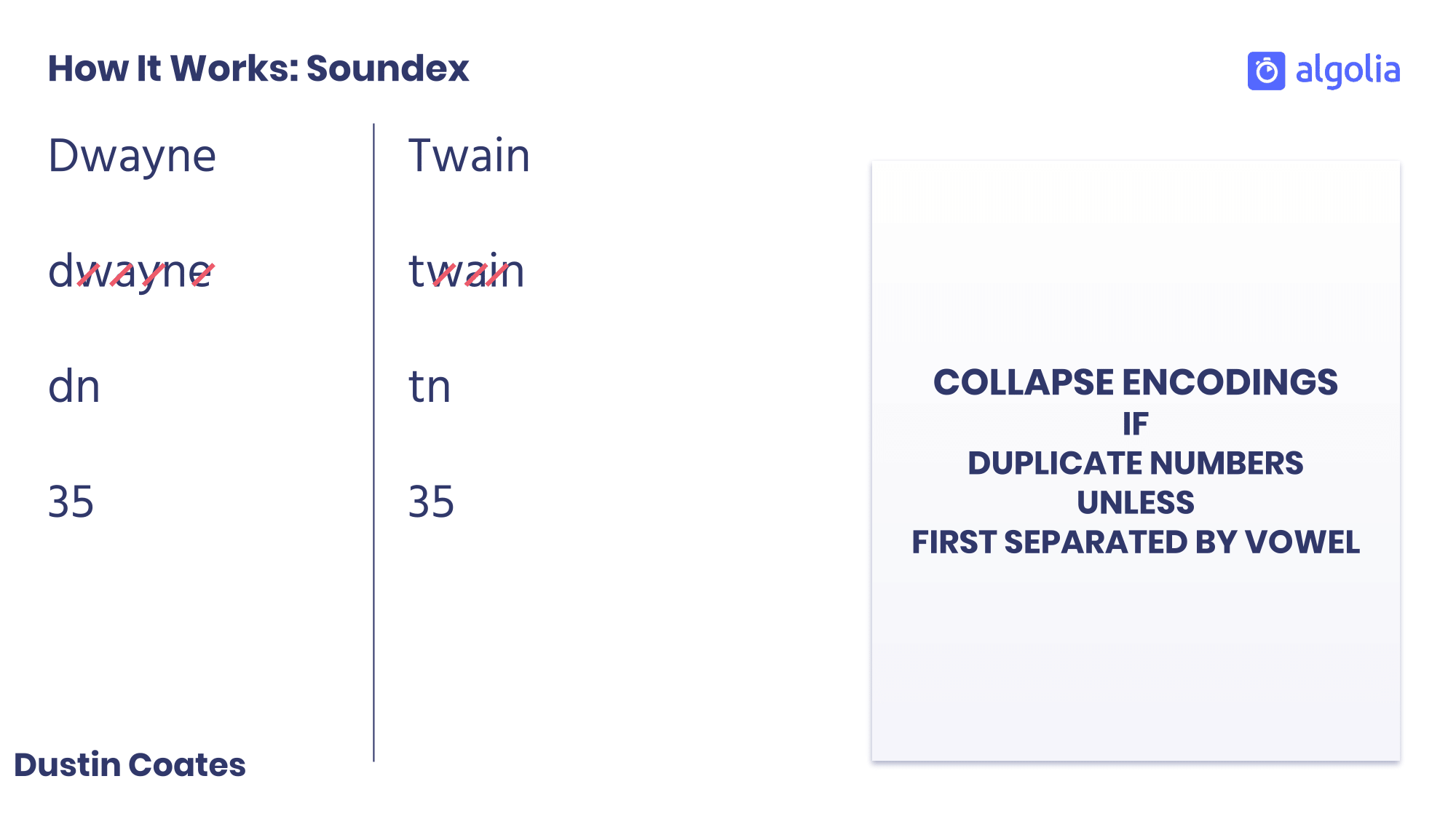

The next step is to collapse the encodings if you have two duplicate numbers next to each other unless they were first separated by a vowel. This isn’t applicable to Dwayne and Twain, so we’ll skip it.

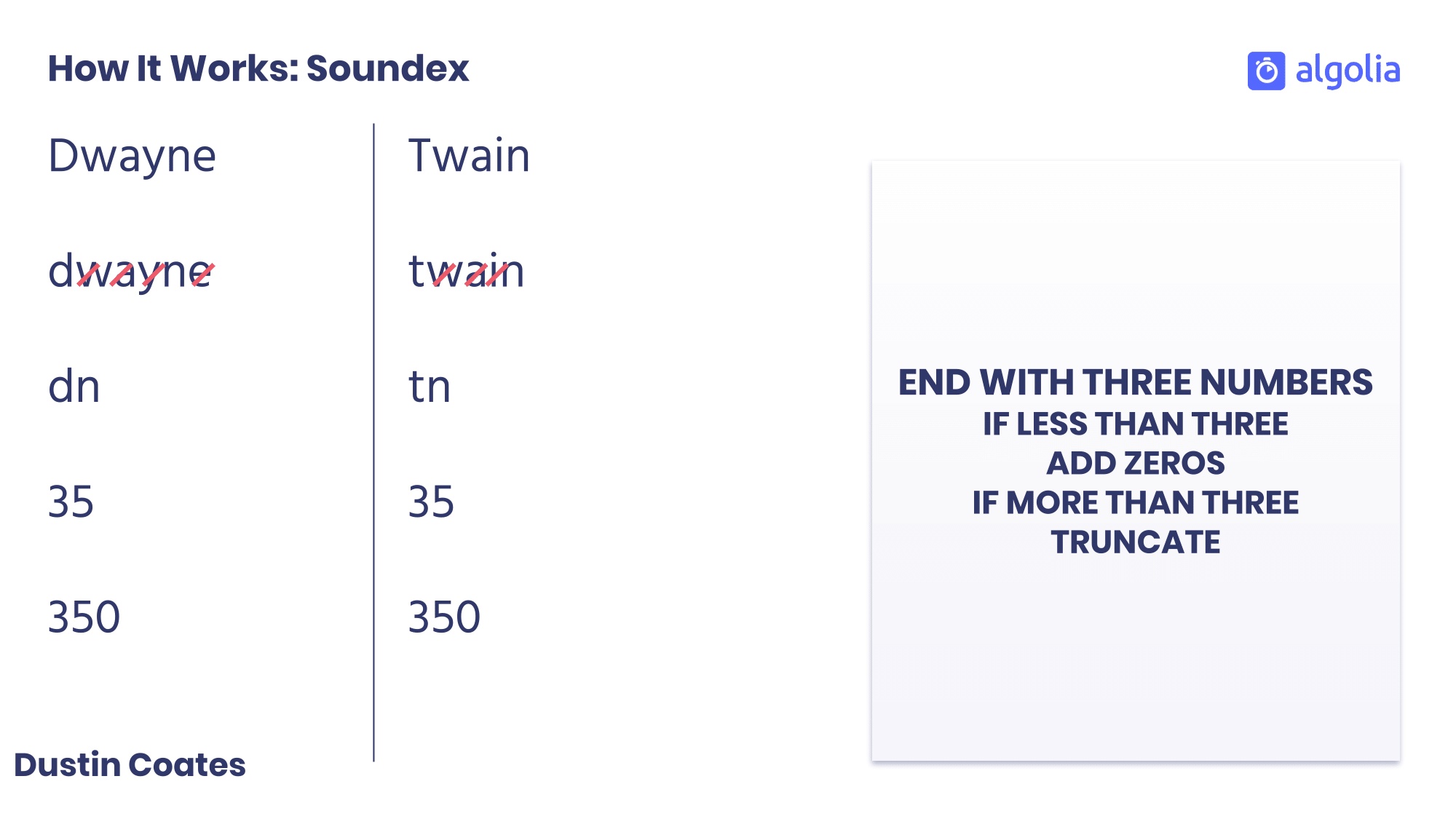

The final step requires us to end with just three numbers. If there are more than three, we chop off the end. If there are fewer, we add zeroes.

At the end, both Twain and Dwayne end up as three-five-zero. They are approximately, phonetically the same. Dwayne, Twain. Dwayne, Twain. Twain, Dwayne.

Checks out.

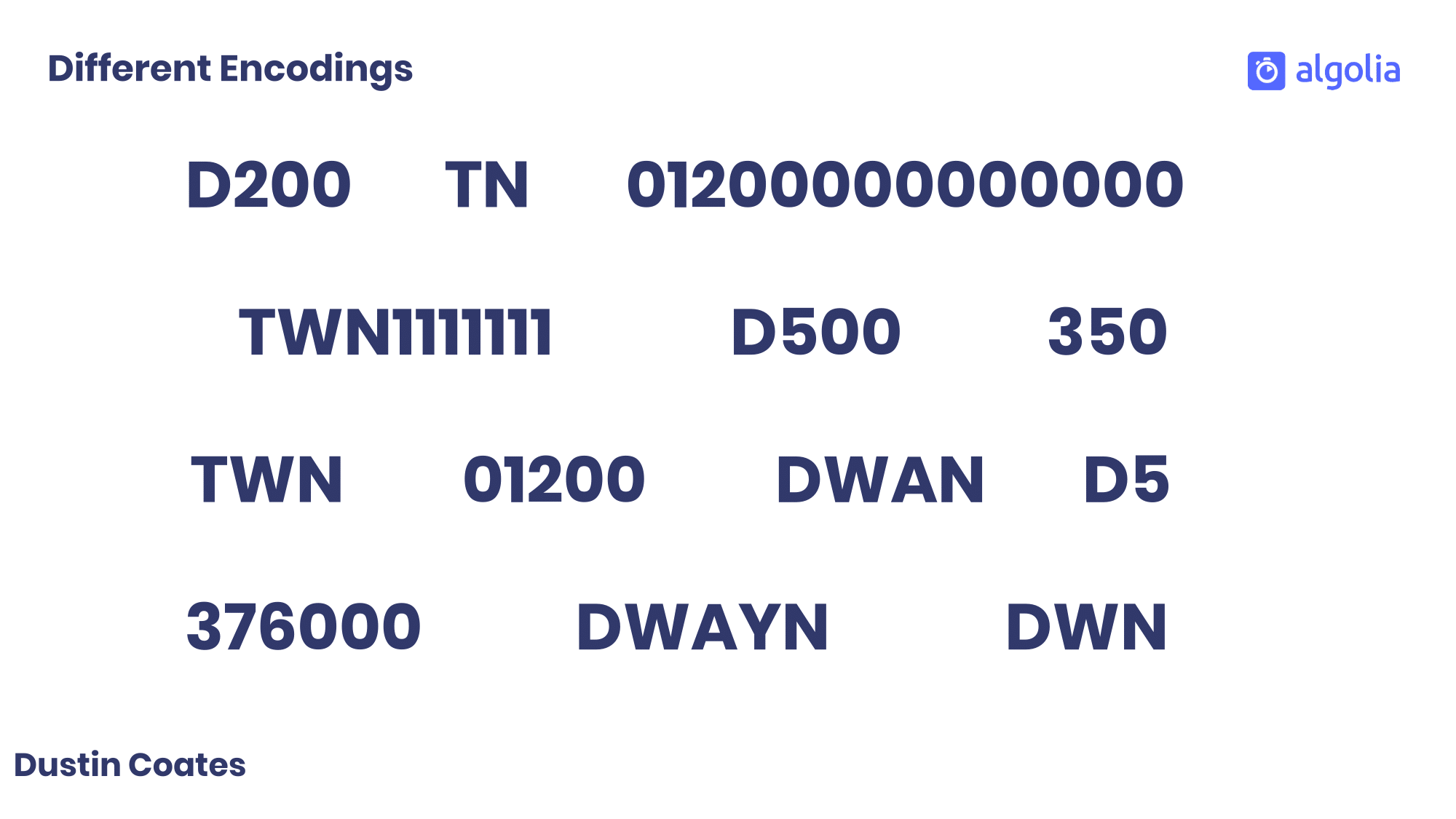

Here we see different phonetic encodings for the name Dwayne. Some of them are just numbers, some just letters, and some are a mixture of both. There’s a wide range of encodings.

I don’t like assuming that something’s going to work. I’d prefer to see some data. I put together an experiment that would test whether phonetic algorithms improve relevancy and cover for speech to text errors.

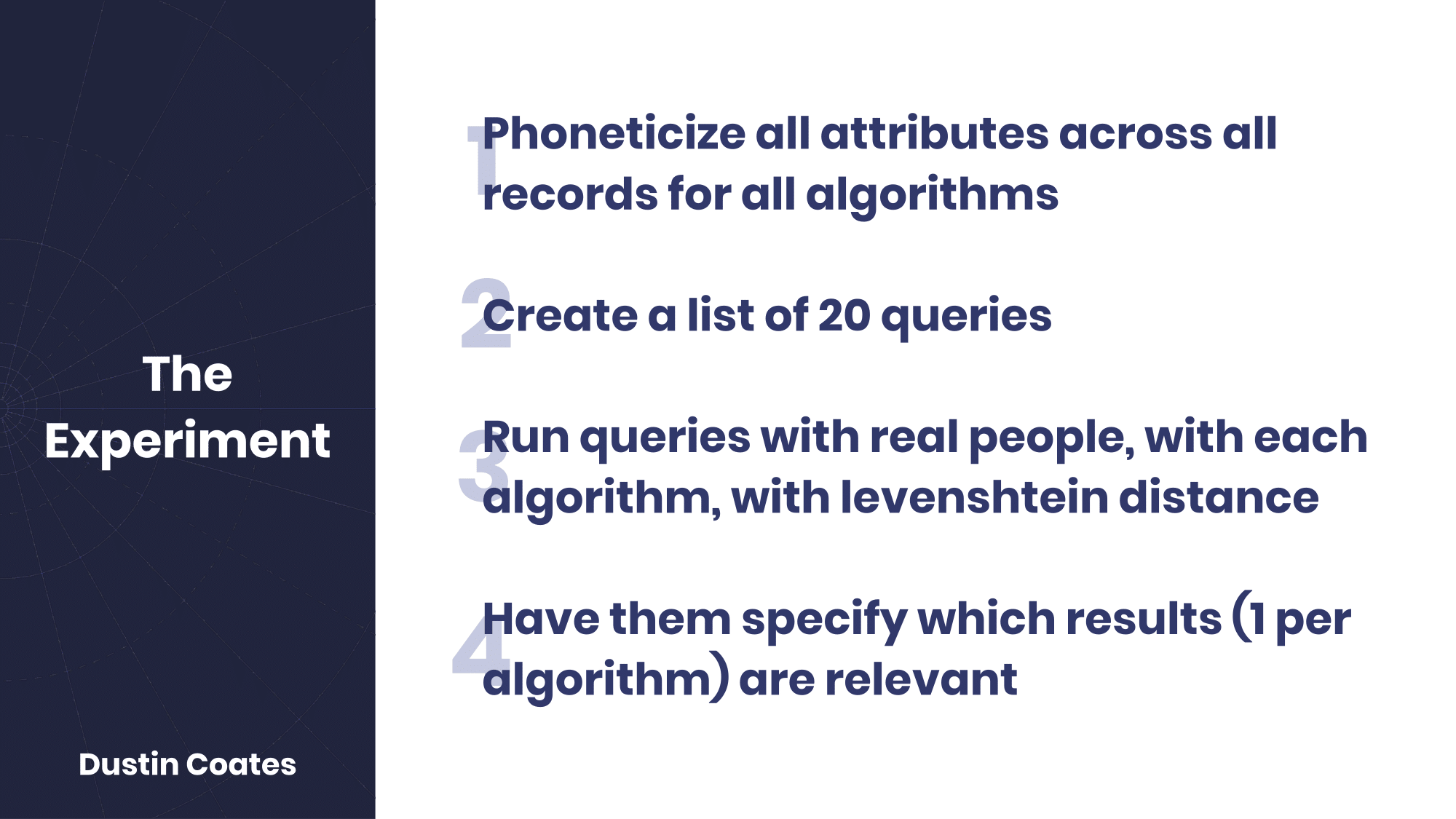

- I started with a search index of tens of thousands of books, and phoneticized each searchable attribute—title, name, category, and description.

- Then I created a list of 20 queries that I knew matched products inside the index.

- I proceeded to run the queries with real people, with them speaking the queries into the Google Chrome default speech to text, and performed a search for each phonetic algorithm by encoding the query words as the speech to text returned them.

- Finally, after sorting by the number of matching phoneticized query words, then by the Levenshtein Distance, the web page showed one result for each algorithm (and a query with the raw text) in a randomized order without labels.

- Users selected all of the results that were relevant.

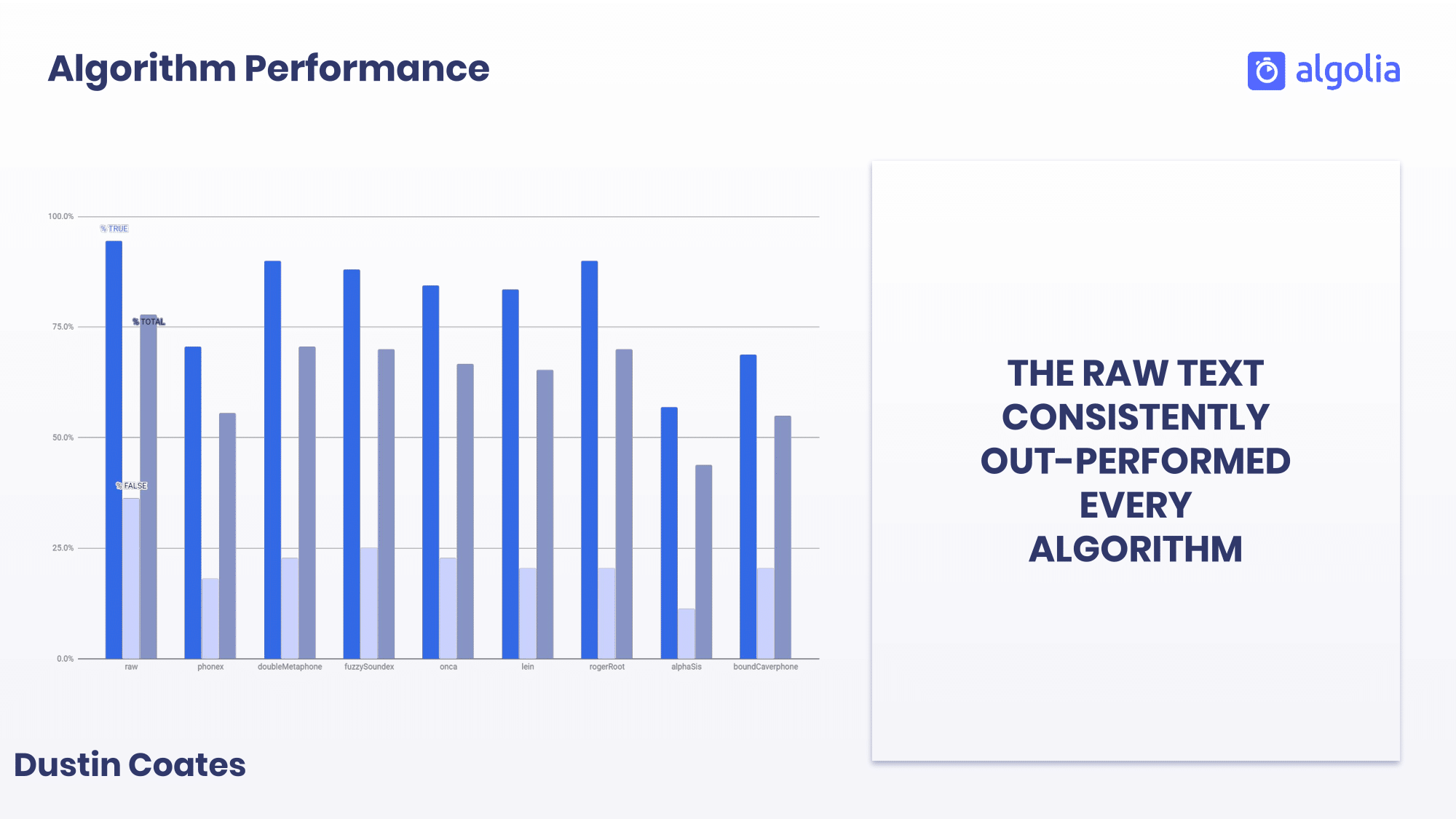

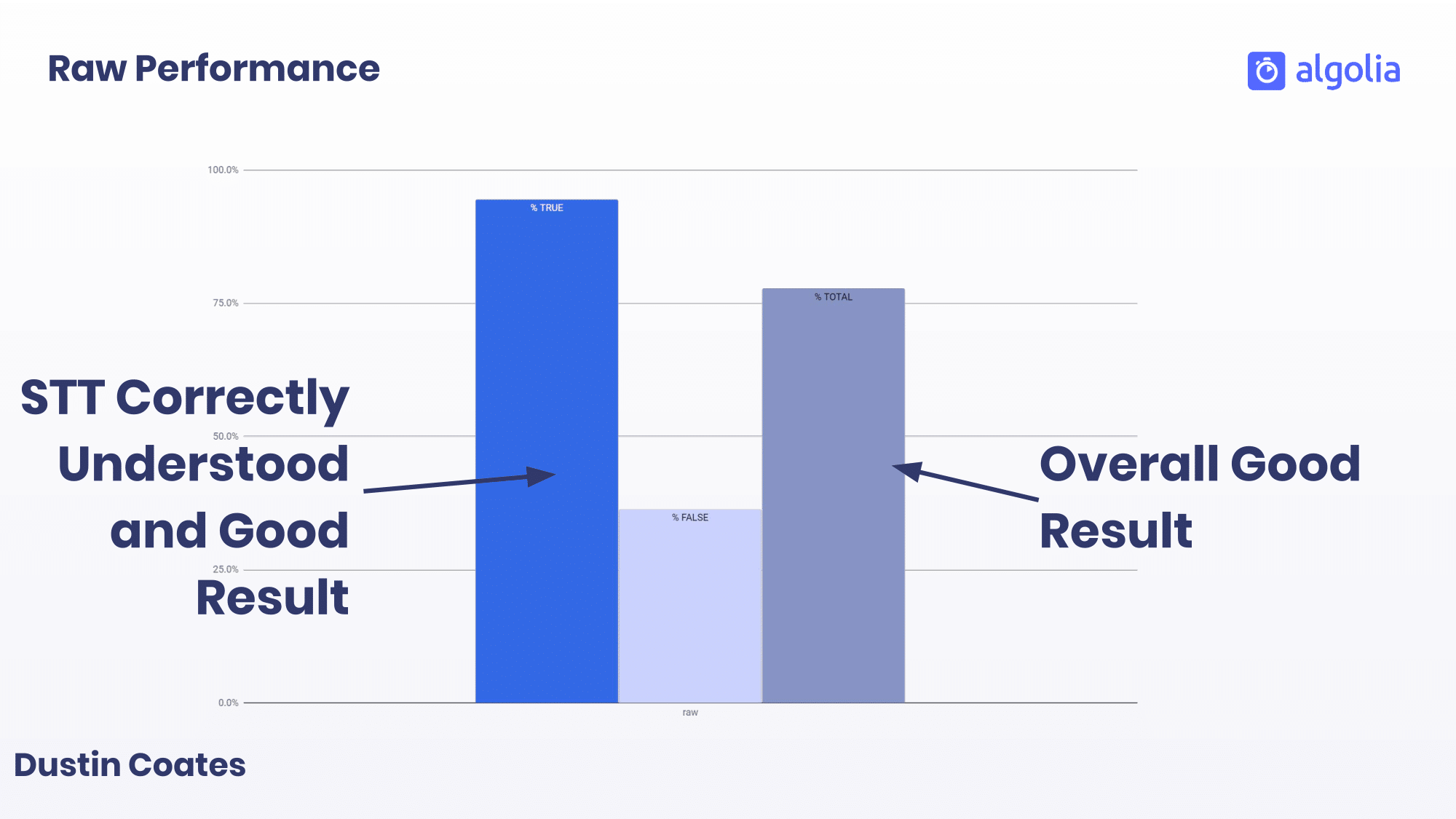

What I found was that, even when there were errors, the raw text outperformed every phonetic algorithm. We see over a 75% success rate without any relevance optimization. The next closest performer was double metaphone with around 70% success rate.

By the way, there was no relevance tweaking, so you could expect an effective 100% success rate with raw text with five minutes of work.

What this shows is that phonetic algorithms are not a solution for correcting speech to text errors, at least with a data set the size of a book store. This could be a solution for a small data set, such as the teachers in an elementary school.

I also had the question of whether phonetic algorithms could be a useful backup when the raw text fails. I didn’t include the data here, but it did show that there were situations where the speech to text engine made an error, and a phonetic algorithm provided a successful result when the raw text didn’t. In this case, double metaphone performed best, but the uplift was minimal, and this approach opens up many questions. Namely, how do you determine whether the raw text isn’t providing a right results and your code should fall back to an algorithm, or if you should tell the user that you found no result. We’ll come back to that in a moment.

We now know that the phonetic algorithms aren’t going to magically make our relevance better. What do we do, then, with the query?

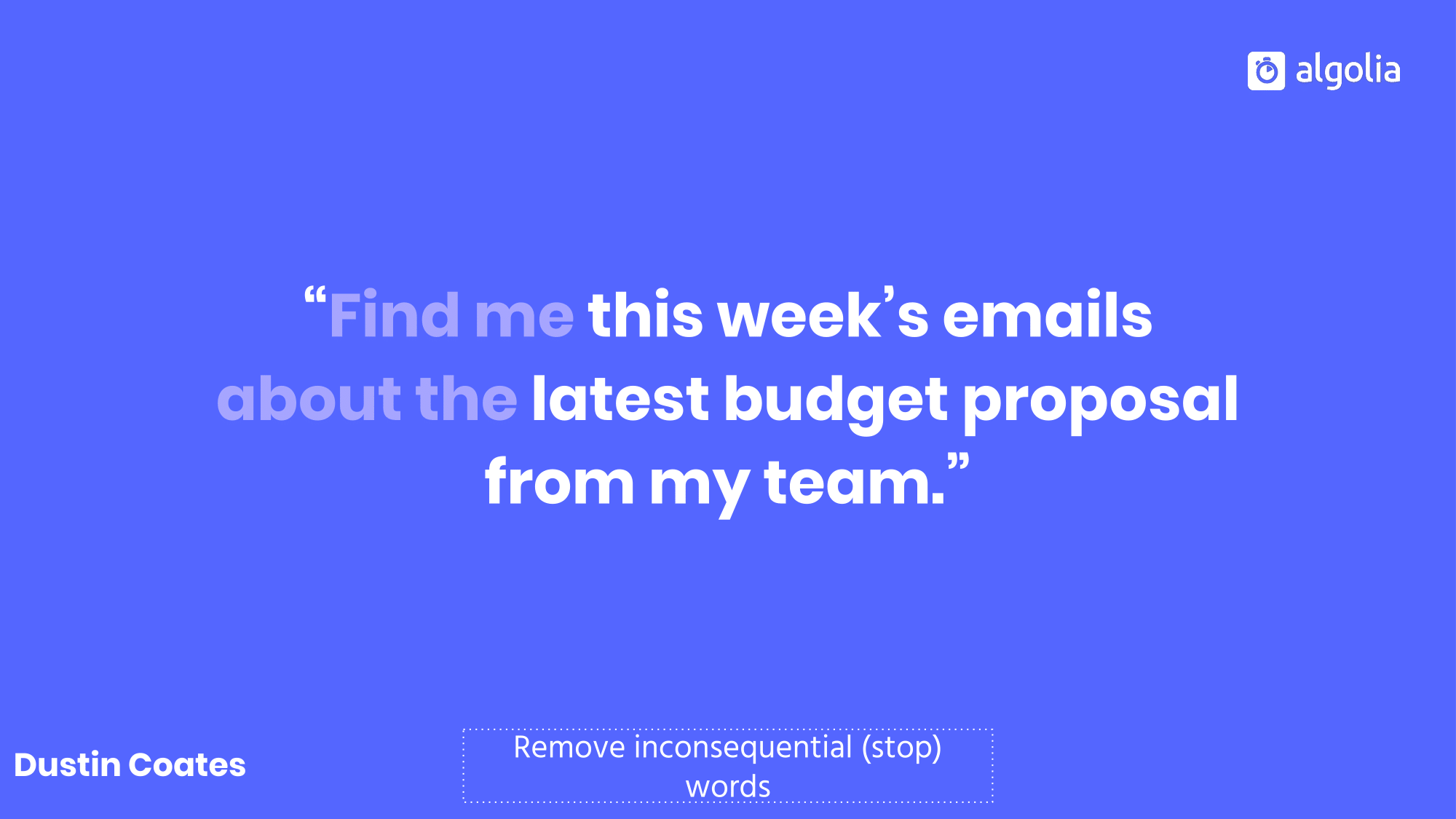

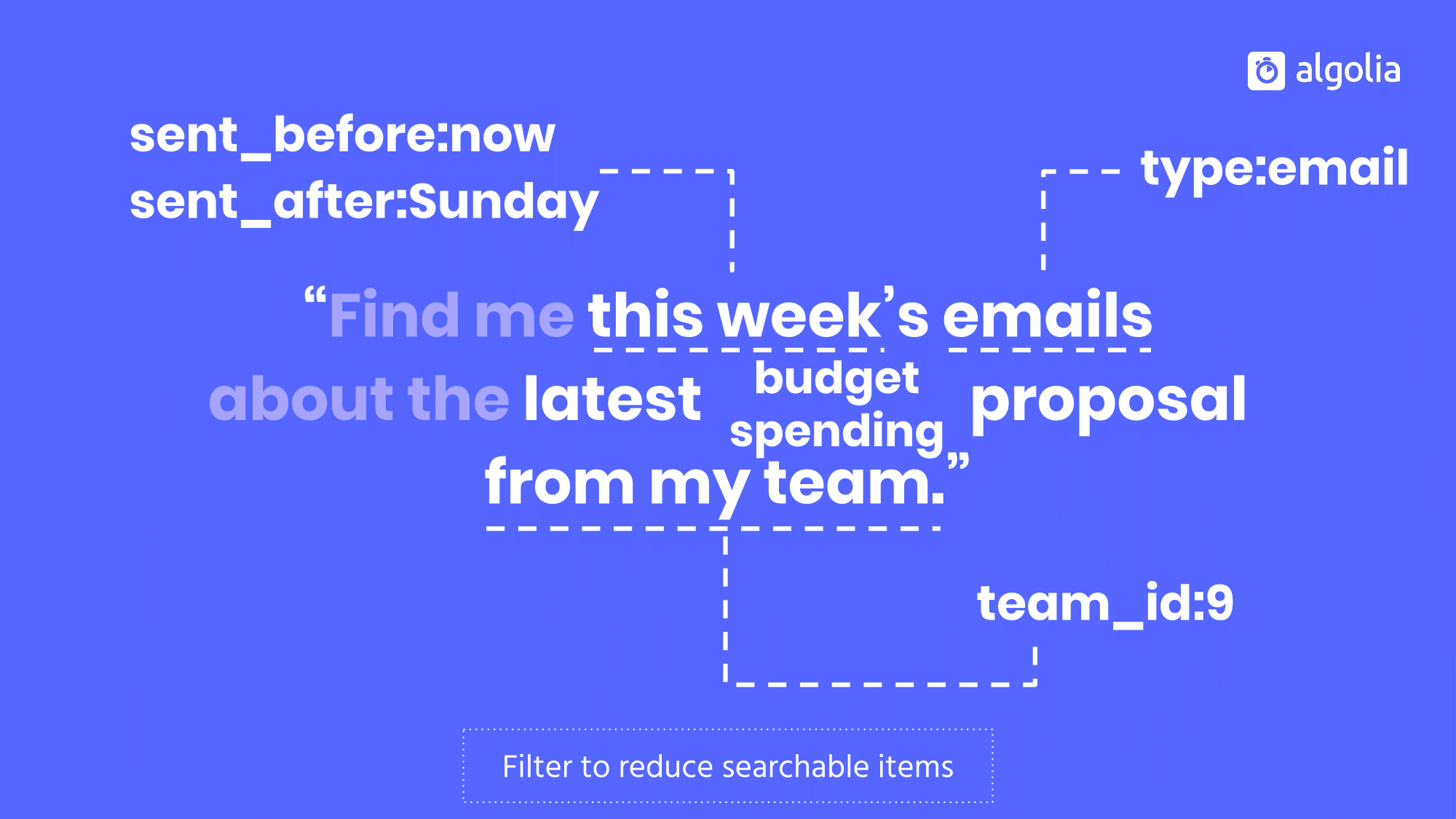

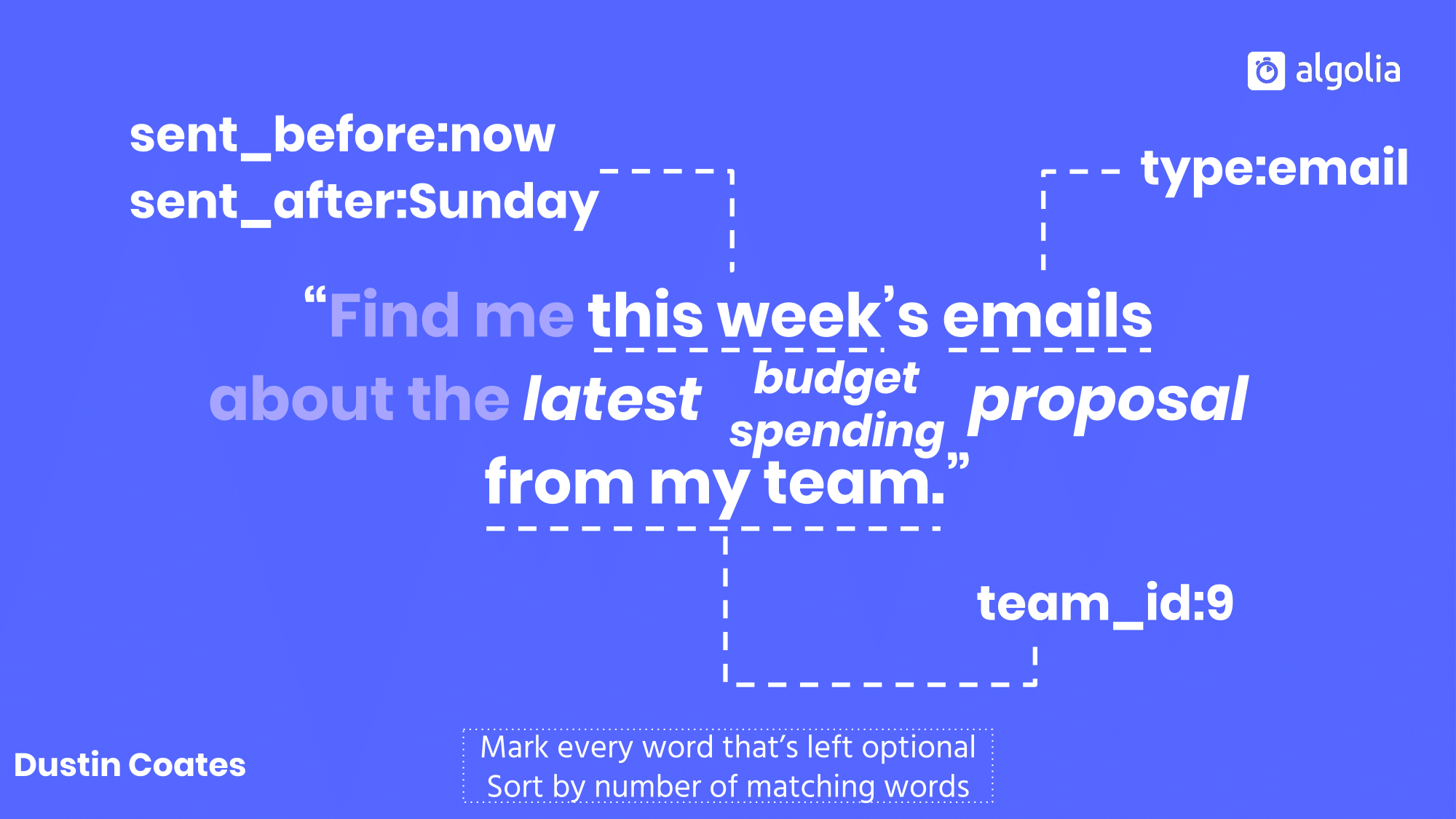

Let’s use the example of searching for emails about the latest budget proposal.

We first want to remove words that don’t offer much meaning to the query. In the search biz, we call these stop words. The hallmark of conversational search is the move from keyword-based search, to a wordier environment where people throw in more than just keywords. Now, you’ve probably heard people say that conversational search is difficult because these stop words have meaning and they’re difficult to parse. Show me “an email” versus show me “the emails.” Sure. These two constructions are asking for different things. But the added complexity is great enough that the benefit of trying to parse that isn’t worth it. A good UX will wave away most of the differences between these two.

So just ignore them, and act like they aren’t there.

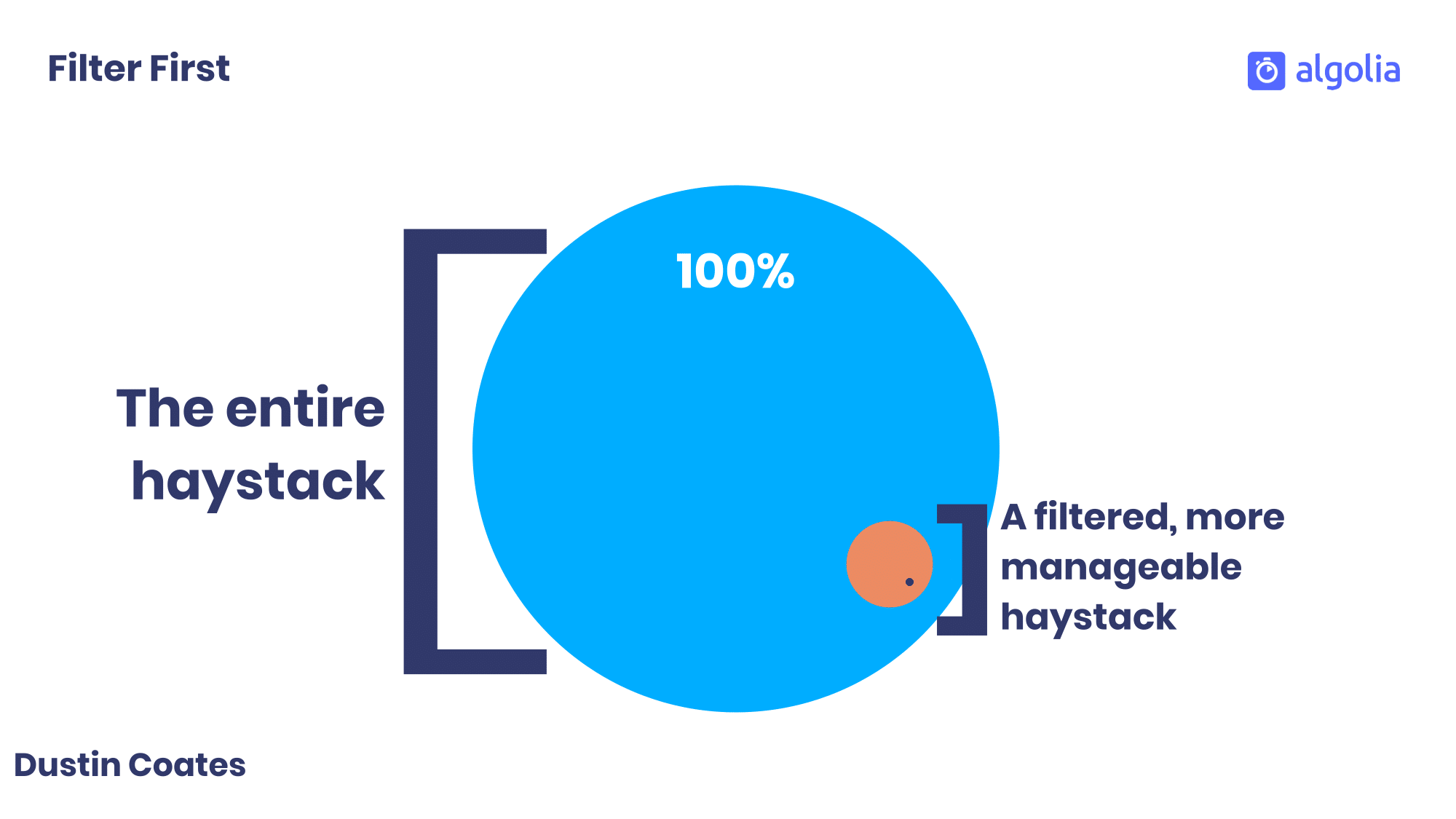

We’re probably searching through thousands of emails. That’s a huge corpus and we’re searching for what’s most relevant. There’s a term for this… where you’re trying to find a small thing in a huge pile of other small things that are very similar in construction. A needle in a haystack, I think…

Finding a needle in a haystack is impossible. Finding a needle among two hundred haystalks is difficult but surmountable. And plucking a needle from just five haystalks is trivial. As it is with search. If we can reduce the haystack through filtering, then the full-text searching becomes much easier and more precise.

Three ways to reduce the haystack through filtering is to look for filterable values within the query, apply personalization, and use context.

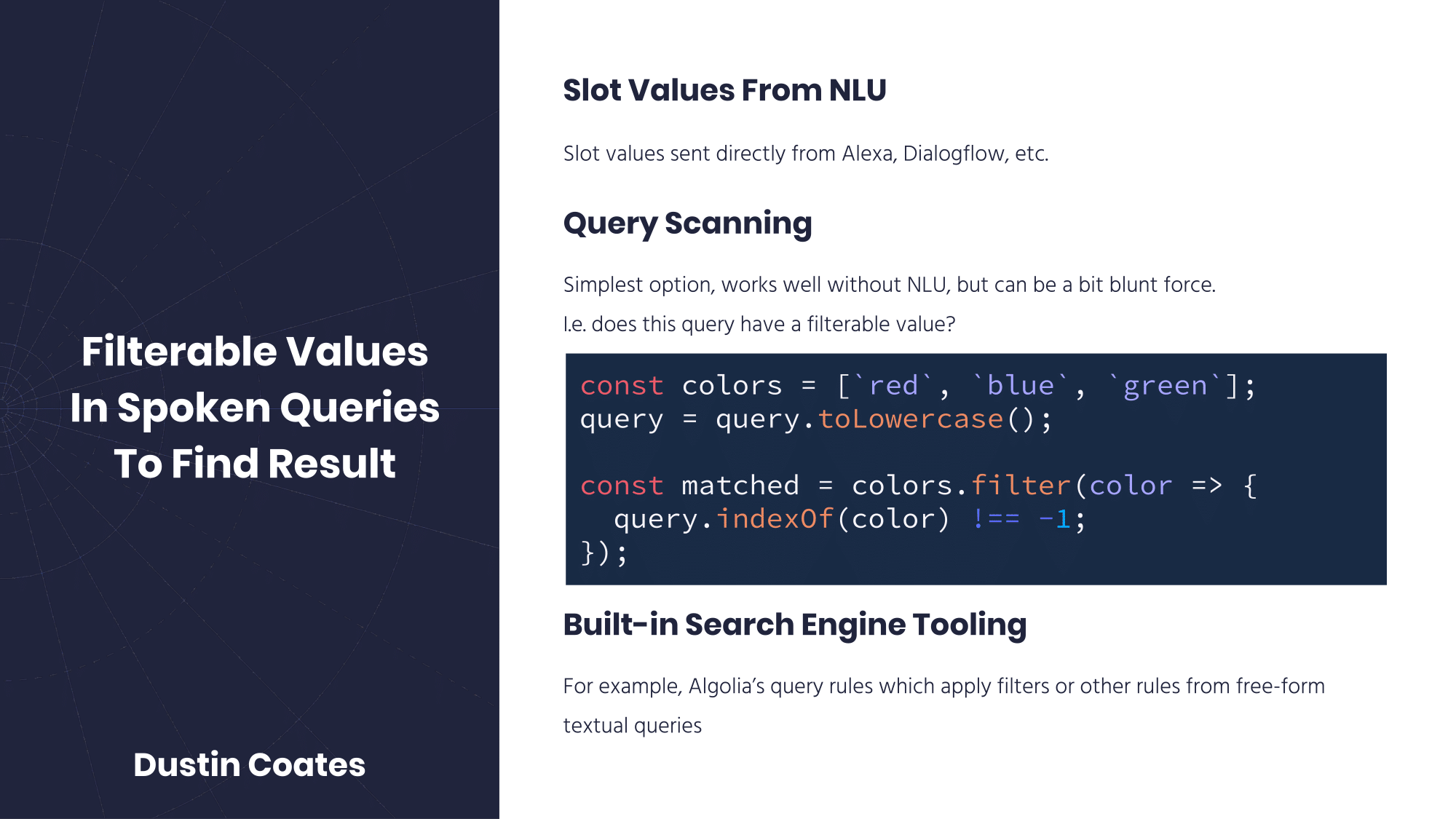

There are a number of ways to find filterable values from the spoken queries. If you’re using an NLU engine such as Alexa, Dialogflow, or others, you can use slot values by anticipating where users might say the values, and training the NLU with the values from the search index.

If you’re not using an NLU and the filterable values are a small and constrained set, query scanning can work well, even if it’s a brute force method. This approach looks through every query word individually to see if they match a defined list of queryable values, collecting the ones that do.

const colors = [`red`, `blue`, `green`];

query = query.toLowerCase();

const matched = colors.filter(color => {

query.indexOf(color) !== -1;

});Finally, there’s built-in search engine tooling. One example is Algolia’s query rules which can apply filters or other rules based on textual queries and facet values.

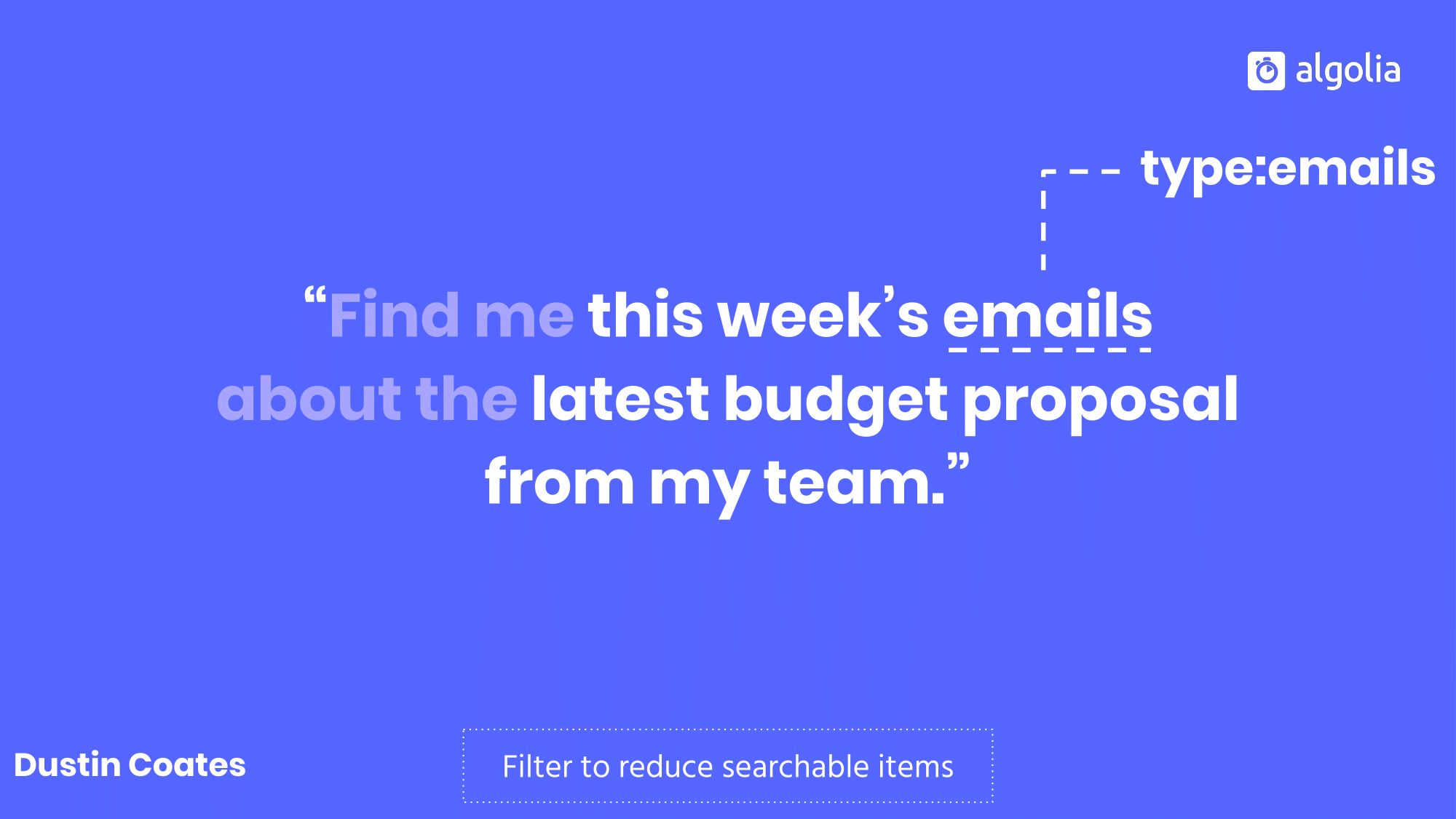

In the example query, we might find a filterable value in the word “emails,” which corresponds to a type. Now we’re are no longer looking for the latest budget documents or chat messages, but only in the emails. The haystack is getting smaller.

Personalization and context are two more ways to filter down the haystack.

Remember that our goal is to get the right result for the right user, for which we can use affinities to either filter or boost results. Boosting is an interesting approach in situations where personalization effects aren’t as clear. If I continually search for men’s pants, a clothing store can assume I’m interested in men’s clothing and show me men’s pants next time I search for the generic “pants.” But if I need to buy a gift for my fiancee and search for dresses, I shouldn’t hear that there are no dresses because my affinity for men’s clothing has taken an overbearing precedence.

Right result for the right user at the right time. If personalization is about the searcher, context is about everything else surrounding the search. This can be information such as the requests the user has recently made, the time of day or current date, or whether a user is new or returning. On my baseball apps, I will use the user’s previous requests for the situations where a request isn’t as explicit. If a user is asking for the Houston Astros a lot, and then says “How have they been doing?” I can reasonably extrapolate that to mean the Astros.

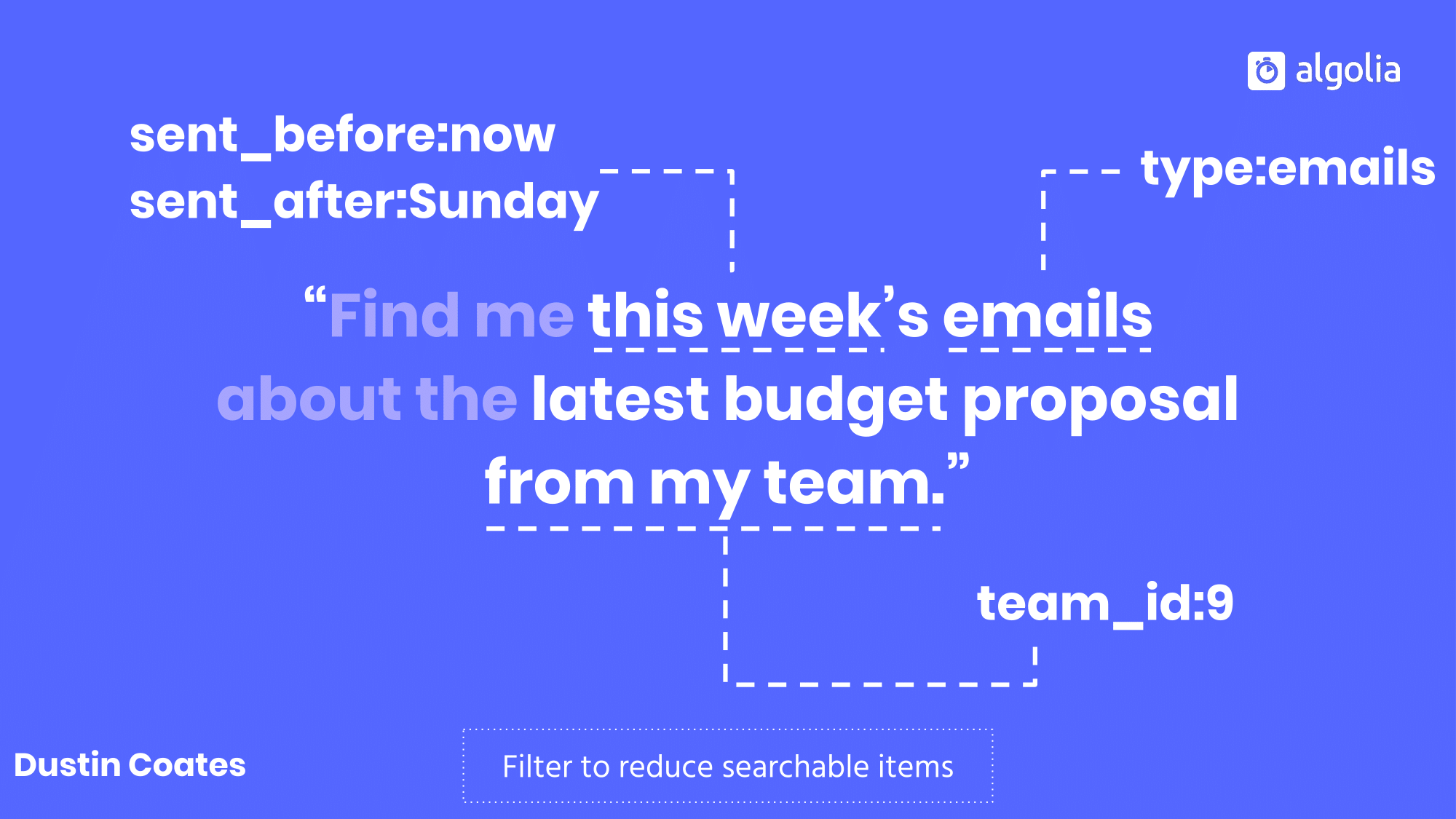

For the example query, that’s pulling in the context of the current day to reason about what “this week” means, and using “my team” as a stand in for the team ID of the current searcher.

We know that people are going to ask for the same thing in different ways, with different vocabulary. Preparation and testing ahead of the time will go a long way toward preparing for how searchers are going to interact with the application, but nothing’s better than real use data. Looking at that data, you can take it and feed it into the search index as synonyms.

Synonyms can be used both for words that mean the same thing (like “pop,” “soda,” and “Coke”) and for speech to text misunderstandings. Because remember…

Our search relevance is often hampered by speech to text performance. By equating “kiss the sky” and “kiss this guy,” we can always route people to the right Hendrix song.

For our example query, spending and budget is probably equivalent, so we’ll match whether the searcher uses one term or the other.

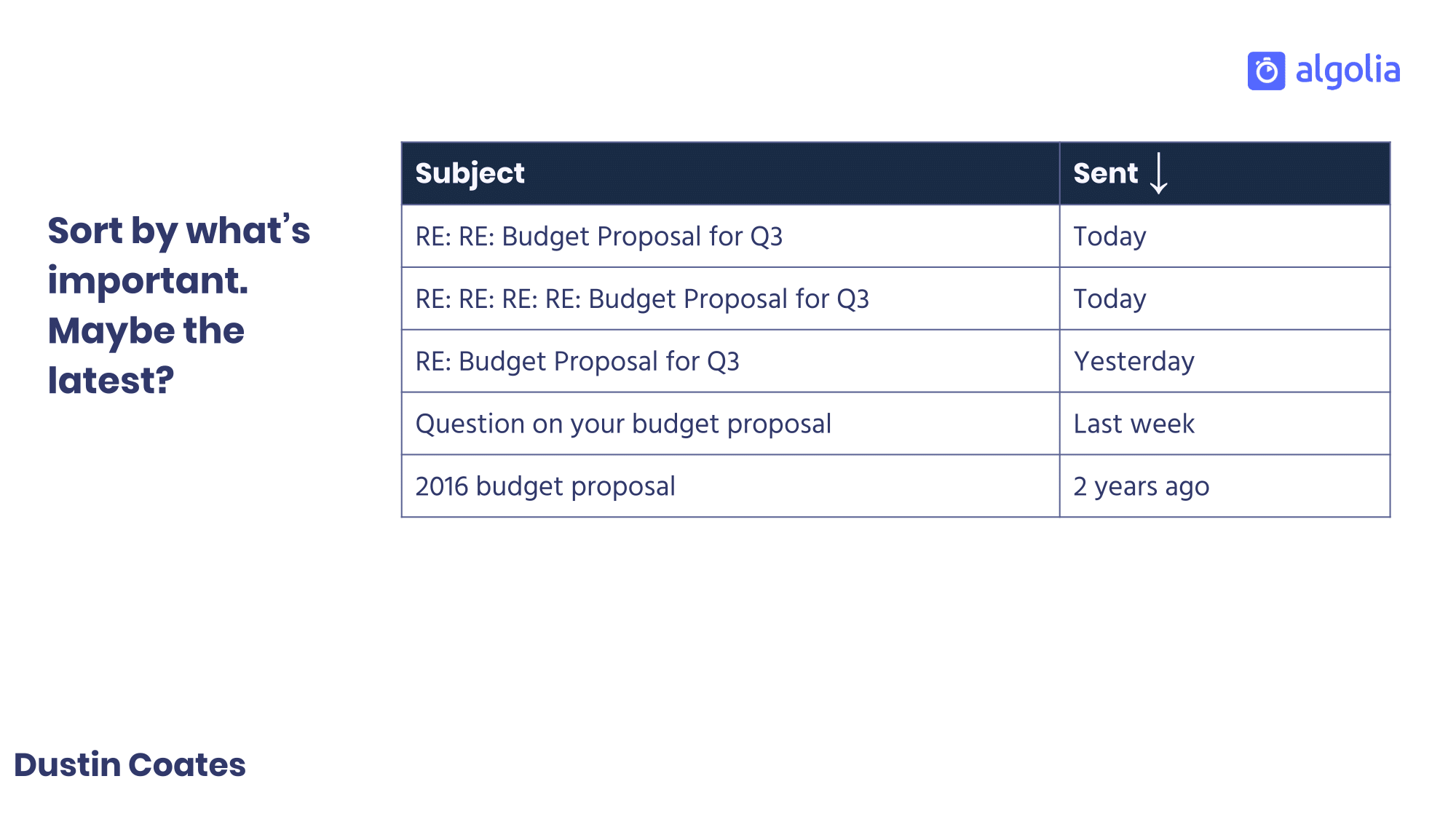

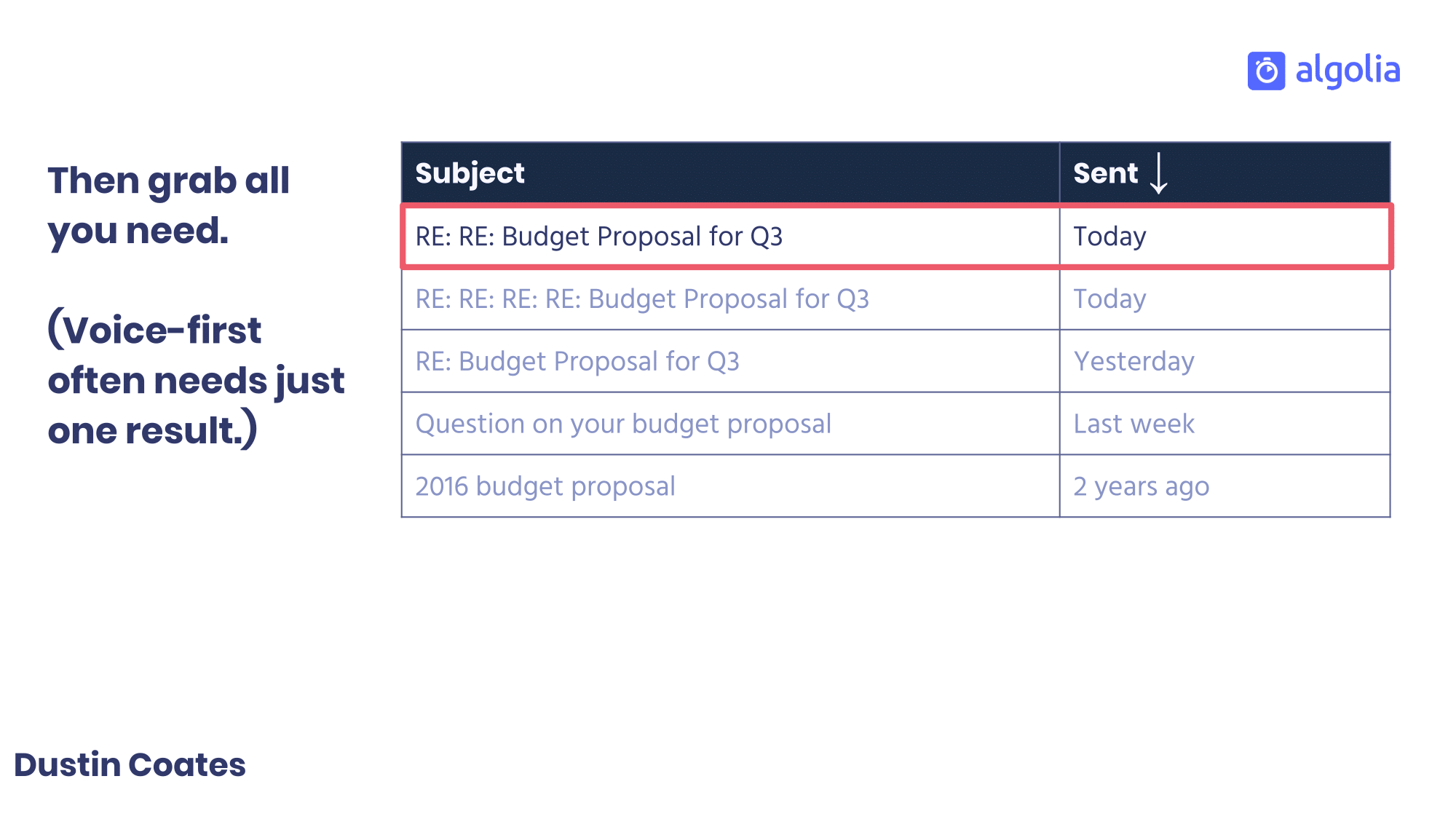

Through all of that, we’ll probably end up—especially if we’ve got a large index in which we’re searching—with more than one result. Yet we need to present just a subset. Especially in a voice application, that’s usually just one. The solution is to sort them from most relevant to least, and take what you need.

In the case of emails, often the newest ones are most relevant, so we’ll sort by send date and grab the most recent email to present to the searcher.

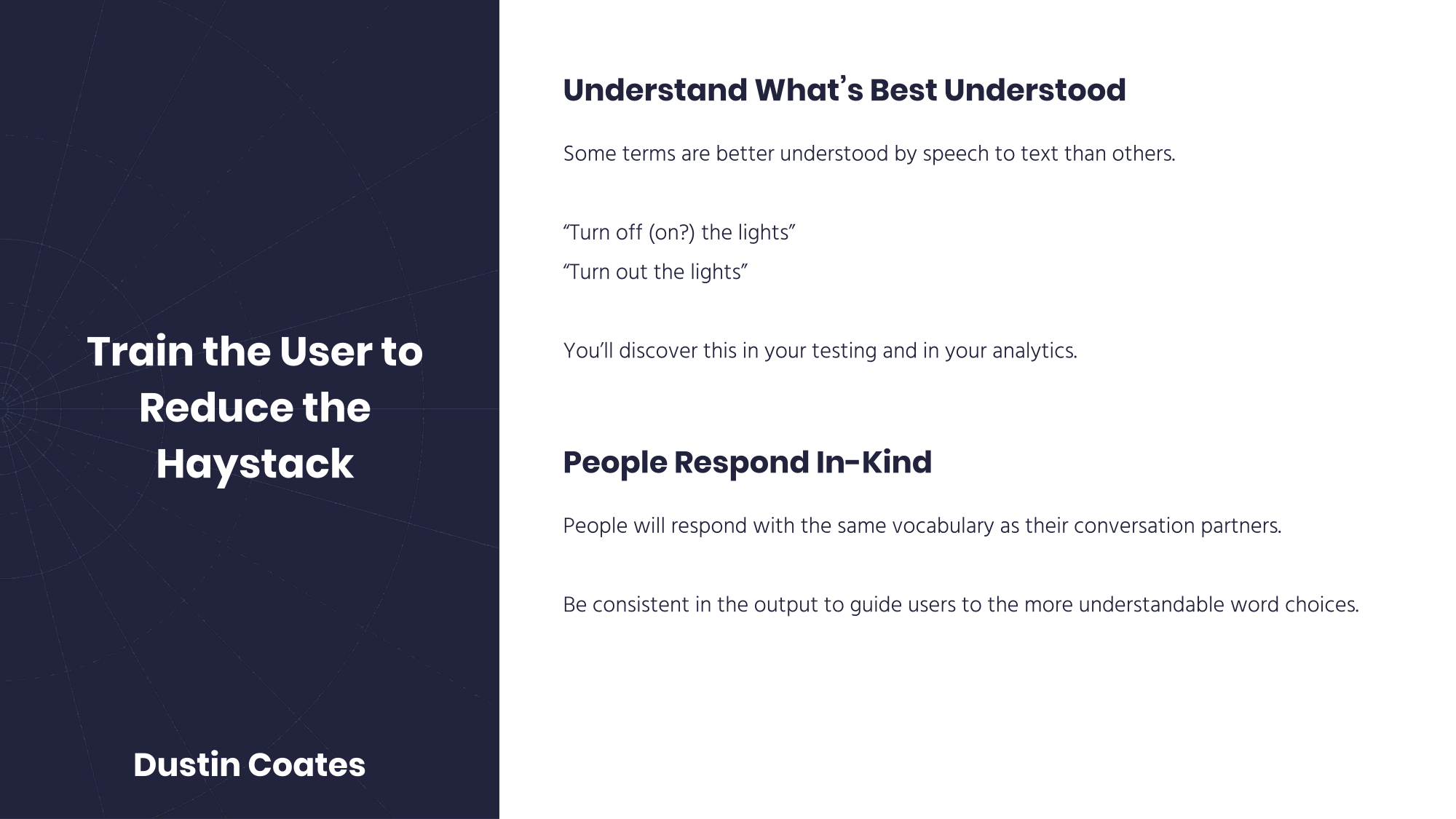

A corollary to the speech to text’s imperfection is that certain phrases are more commonly correctly understood than others. The analytics will reveal which, and you can use that information to train users in how to speak. This doesn’t need to be heavy handed. “If you want reservations, say reservations.” Instead, rely on the knowledge that people will respond to their fellow interlocutors in the same language.

I am routinely frustrated by my smart home devices when blind me after I ask them to turn off the lights. Turn on the lights, why not? What if, instead, I said to turn out the lights? This phrase greatly reduces the ambiguity. Smart devices don’t need to proactively train me to say that. Instead, subtly guide me through responses.

“Turn off the lights.”

“Alright, turning out the lights.”

“Turn off the lights.”

“Turning out four lights.”

“Turn out the lights.”

“Now turning out the lights.”

We’ve trained our users to use the best understood language, we’re using synonyms and filtering down the haystack. Yet, what if there are no perfect results?

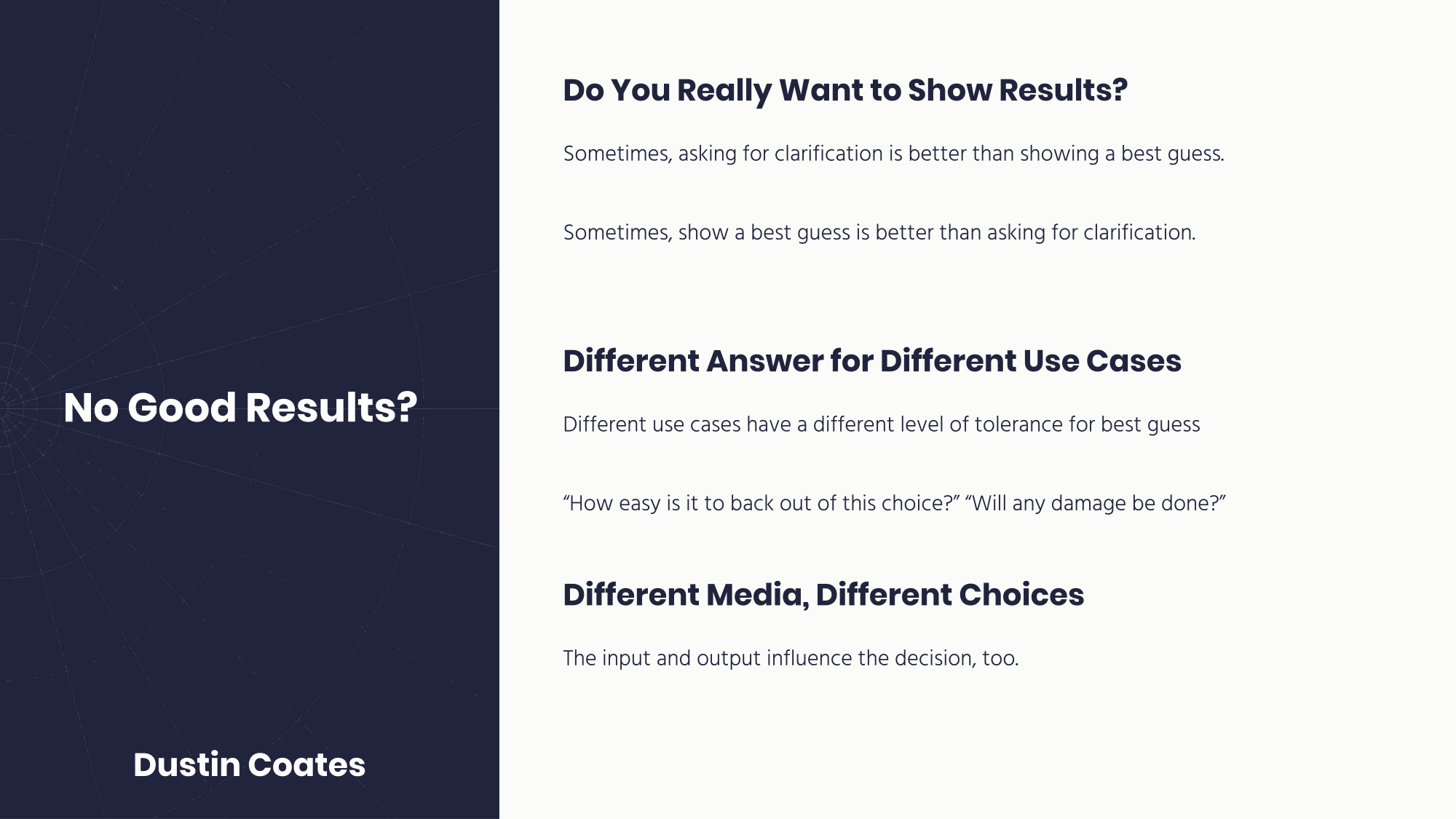

First ask yourself if you really want to show results. While sometimes asking for clarification is better than showing a best guess, other times a best guess is better than asking for clarification. Which time is which depends largely on the use cases and the media.

Some uses have a different tolerance level for sometimes showing an imperfect result. The questions to ask are, “How easy is it to back out of this choice?” and “Will any damage be done?” When you’re transferring money to someone else, there’s a very low tolerance for imperfection. Searching for emails has a high tolerance—unless the action is to delete whatever’s returned.

Different media also impact the decision. This comes down to the difference between voice-only, voice-first, and voice-added devices. Voice-only is what it sounds like, voice-first relies primarily on voice but may have a screen or minor input such as buttons, and voice-added uses voice as an addition and often uses a keyboard as the primary input mechanism. As you go from voice-only to voice-added, you can provide more information to the user and getting the “one true result” becomes less important.

If you decide that you really want or need to show a result, the final step is to mark all words as optional then sort results by the number of matching query words. In that case, latest, budget or spending, and proposal are all optional and results that have latest budget proposal will rank higher than latest re-org proposal or marriage proposal.

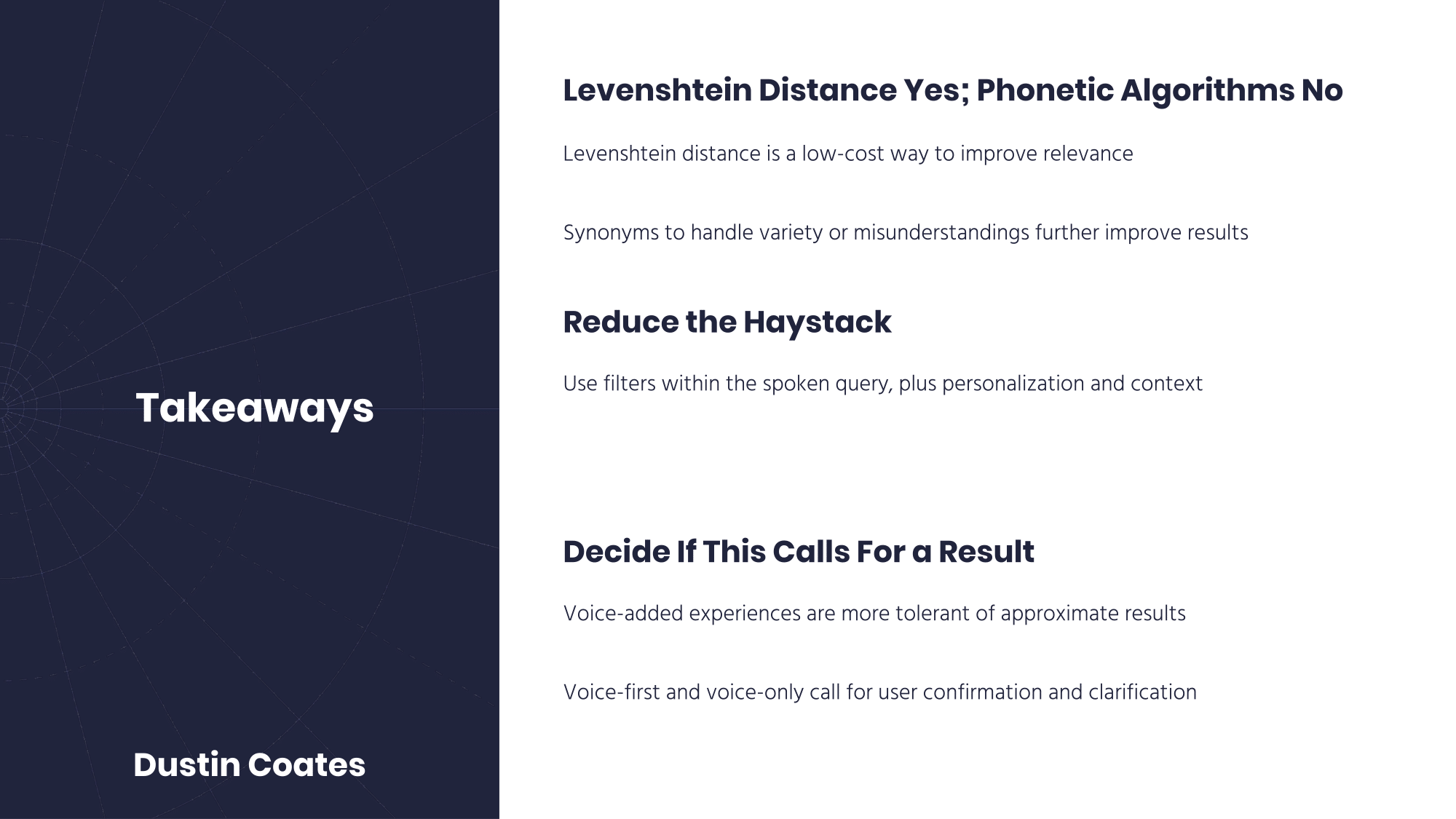

Developers can build good voice search, though the approaches are different than a normal textual search. Speech to text is going to be a problem. Training the user, synonyms, and the use of the Levenshtein Distance is useful, but phonetic algorithms are safely avoided. Reducing the haystack through filters should be an important priority when trying to get at relevant results. And, finally, not every situation calls for a result. Start with these three guideposts and you’ll already be ahead of your competitors.

Thanks for reading! Will you please consider sharing on Twitter or LinkedIn?